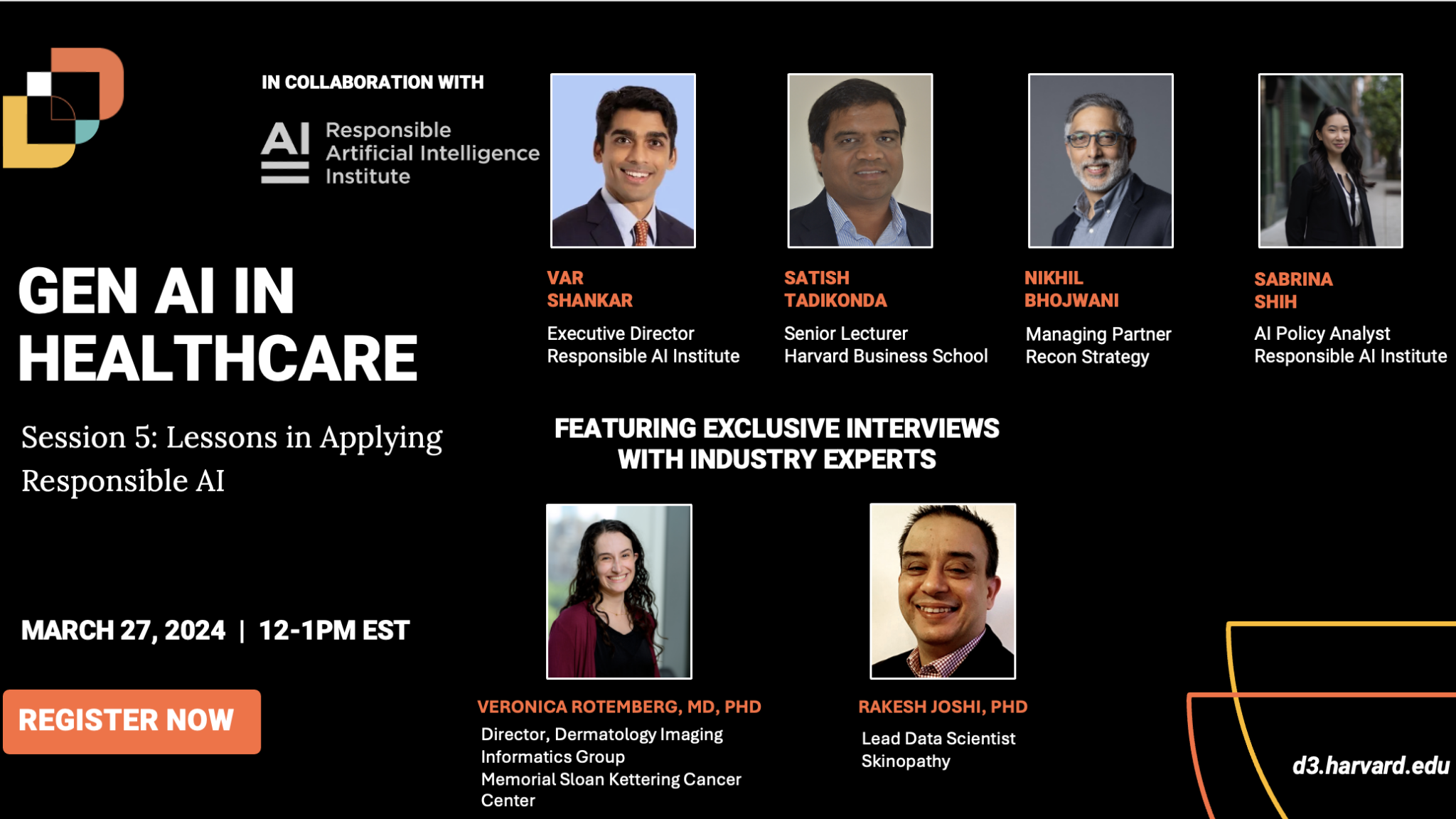

Insights from the March 27th, 2024 capstone session on Gen AI applied use cases, risks, and responsibilities

Recordings and articles from the Generative AI in Healthcare series can be found here.

In the capstone session of the Generative AI in Healthcare series, Satish Tadikonda (HBS) spoke with Responsible AI Institute experts Manoj Saxena, Var Shankar, and Sabrinah Shih as they outlined current applications of generative AI in healthcare through the lens of two case studies. The conversation included exclusive interviews with Veronica Rotemberg (Memorial Sloan Kettering Cancer Center) and Rakesh Joshi (Skinopathy), who shared their perspectives on responsible AI implementation, and closed with a broader discussion on how these lessons can be applied in a variety of AI contexts.

Responsible AI as a Foundation for Positive Impact

Satish Tadikonda and Manoj Saxena kicked off the session by discussing the key questions at the core of this series – can generative AI have a positive impact in healthcare, and if so, how can such tools be responsibly deployed? Tadikonda summarized the prior sessions with a resounding yes, that AI can indeed produce positive impacts in healthcare, but that it is crucial to do so responsibly due to societal concerns and the varying adoption levels across healthcare domains. Saxena affirmed that enterprises are applying AI with cautious excitement, and outlined a framework anchored in NIST guidelines to operationalize responsible AI. He illustrated examples of potential AI harms, such as incorrect dosages and data privacy breaches in an NHS project involving a generative AI chatbot, advocating for a comprehensive approach to ensure AI’s positive impact. He also underscored the need for responsible AI governance from the top levels of organizations, integrating principles and frameworks early in AI design to mitigate risks effectively.

Applied Use Case – Memorial Sloan Kettering Cancer Center

Sabrina Shih then took the helm to explain the use of skin lesion analyzers in detecting cancerous lesions, outlining their integration into patient diagnosis journeys through self-examination support and aiding clinicians. Shih highlighted the benefits of such analyzers, including convenience and triaging support, while also discussing the advancements in generative AI for skin cancer detection. In an interview, Dr. Veronica Rotemberg, Director of the Dermatology Imaging Informatics Group at Memorial Sloan Kettering Cancer Center, discussed the institution’s approach to evaluating an open-source, non-commercial dermatoscopy-based algorithm for melanoma detection. She delved into the characteristics of trustworthy AI and emphasized the need for large collaborative studies and efficient validation processes to improve the accuracy and efficiency of AI algorithms. She also addressed concerns regarding biases and the challenges in evaluating AI performance in clinical scenarios, stressing the importance of multidisciplinary collaboration and thoughtful consideration of potential harms to ensure patient safety and well-being.

Applied Use Case – Skinopathy

In the second case presented, Dr. Rakesh Joshi, Lead Data Scientist at Skinopathy, discussed the company’s patient engagement platform and app called “GetSkinHelp.” The app leverages AI to provide remote skin cancer screening for patients, facilitate scheduling options, and enable triaging of cases for clinicians. Dr. Joshi emphasized three key characteristics of trustworthy AI platforms: consistency of results, accuracy against benchmark data sets, and error transparency. He explained Skinopathy’s approach to addressing these characteristics, including ensuring reproducible results across different skin regions and types, benchmarking against open-source data, and being transparent about system limitations and biases. Dr. Joshi also noted the importance of randomized clinical trials to validate the platform’s reliability across diverse demographics, and the need for ongoing recalibration and data collection to maintain accuracy, particularly in underrepresented geographic areas or demographic groups. Additionally, he highlighted the company’s careful consideration of patient privacy and the collaborative decision-making process involving stakeholders such as patients, physicians, and ethicists in determining the platform’s features and data usage. He also discussed strategies to mitigate biases in clinical decision-making, such as presenting AI results after clinicians have made their assessments. He asserted that the aim of the tool is to support clinical decision-making rather than replacing it, and to maintain simplicity for patients while providing detailed information to clinicians.

Transferrable Takeaways

The throughline of these case studies was that AI applications are duty-bound to prioritize safety, accuracy, and transparency in developing solutions for patient care. Var Shankar closed out the presentation by emphasizing the importance of drawing lessons from these examples to develop a responsible approach for organizations seeking to deploy AI in healthcare and other industries. He outlined key steps, including building on existing knowledge, understanding the benefits and pitfalls of AI, defining trustworthy characteristics, investing in testing and evaluation, and ensuring external checks are in place.

In the Q&A that followed, the speakers also delved into the role of culture in applying AI solutions, with Manoj Saxena highlighting the need for leadership in cultivating an environment which supports experimentation and lifelong learning. The conversation further explored the implications of AI regulation, particularly in the context of new global AI laws, and the need for responsibility to be a key facet throughout the AI pipeline, rather than an afterthought once the technology has been built. Saxena also elucidated that responsible AI can be profitable for institutions under financial pressure by separating AI investments into “mundane vs moonshot” buckets, starting with cost-saving projects that boost efficiency before investing in more transformative initiatives, thereby creating benefits for both AI-driven organizations and the audiences they seek to serve.

—

The Gen AI in Healthcare series is collaboratively produced by Harvard’s Digital, Data, Design (D^3) Institute and the Responsible AI Institute.

About Responsible AI Institute

Founded in 2016, Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. RAI Institute’s conformity assessments and certifications for AI systems support practitioners as they navigate the complex landscape of AI products. Members include ATB Financial, Amazon Web Services, Boston Consulting Group, Yum! Brands and many other leading companies and institutions collaborate with RAI Institute to bring responsible AI to all industry sectors.