Insights from the December 13th, 2023 session on Gen AI use cases in the BioPharma sector.

In the second session of the Generative AI in Healthcare series, speakers Satish Tadikonda (HBS) and Nikhil Bhojwani (Recon Strategy) led a discussion about current AI developments in the biopharma sector. The session featured exclusive interviews with experts from 5 leading organizations – Anna Marie Wagner (SVP Head of AI, Gingko Bioworks), Abraham Heifets (CEO, Atomwise), Michael Nally (CEO, Generate Biomedicines), Andrew Kress (CEO, HealthVerity), Stéphane Bancel (CEO, Moderna Therapeutics) – who shared their unique insights on how AI is being used today and how it will shape our shared future.

Recordings of the Generative AI healthcare series- session 1 and session 2

Current Use Cases

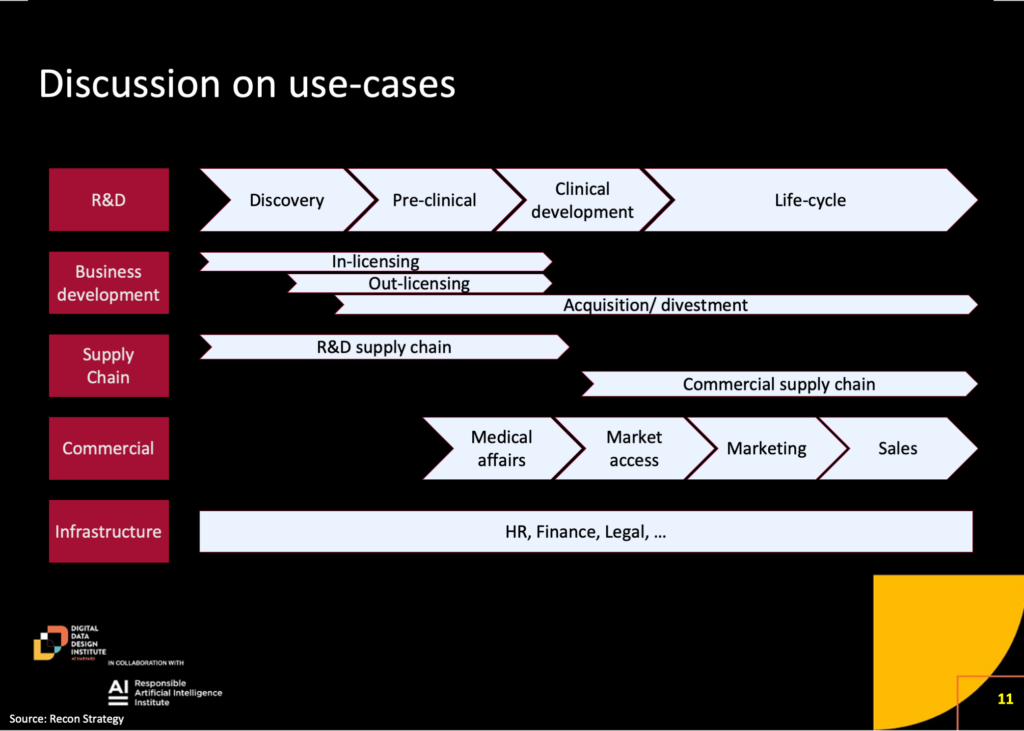

During the session, the speakers outlined a wide range of use cases for artificial intelligence (AI) across the biopharma value chain, including in Research and Development (R&D), Supply Chain, and Commercial.

AI is being employed to accelerate and enhance drug discovery and to improve every step in development including better designed clinical trials and more efficient and less biased patient recruitment. Anna Marie Wagner emphasized the impact on drug discovery, stating that “very often the winning design, the best performing protein as measured in a lab, looks almost nothing like what the best scientists designed as a starting point, you know, might have 20% similarity to that original protein.” Both she and Mike Nally also shared that they view hallucinations as a feature rather than a bug, given that those previously unseen deviations generated by AI can sometimes lead to breakthroughs that scientists might not have otherwise found due to the complexity and computational burden of that analysis. The technology’s role encompasses tasks like generating documentation, drafting regulatory submissions, and aiding in medical writing. Stéphane Bancel talked about how Moderna is using its own version of ChatGPT, called mChat, to overhaul how medical and regulatory content is created. On the commercial side, AI applications span medical affairs, market access, sales, and marketing, with examples ranging from identifying rare disease patients to automating content generation for marketing purposes.

Our guest experts highlighted the transformative potential of AI across the biopharma value chain, noting that the use of large language models to streamline appropriate tasks showcases AI’s potential to significantly enhance efficiency and decision-making across the biopharma industry.

Associated Risks

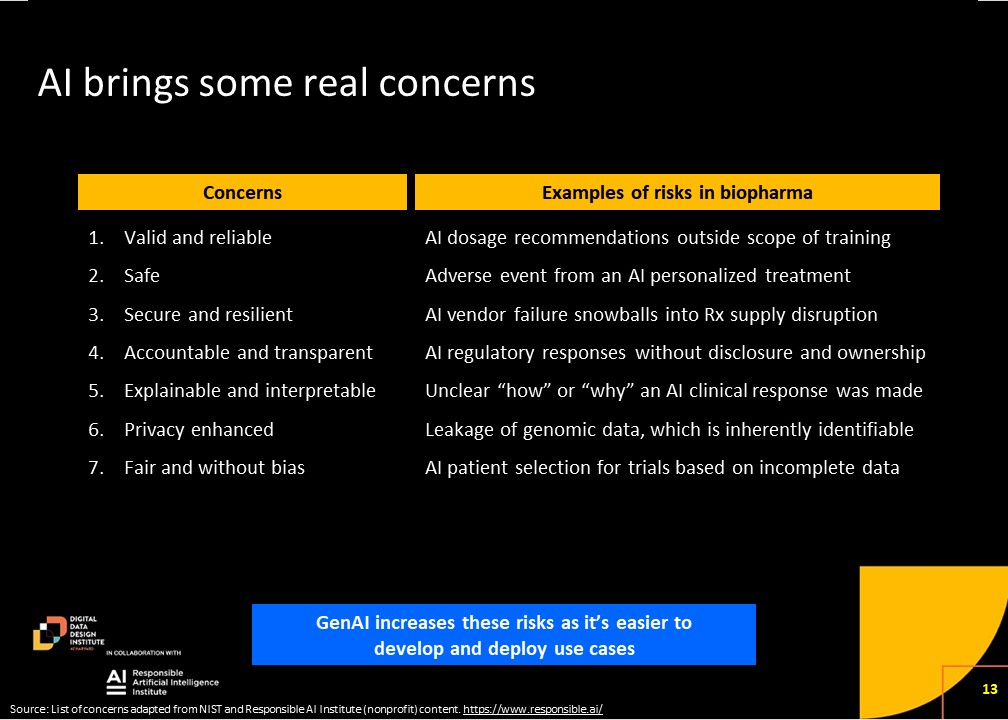

The second segment centered around the potential risks associated with the widespread adoption of artificial intelligence (AI) in the biopharma industry. Our experts outlined a range of biopharma specific risks driving the need for responsible AI.

One notable concern is the scalability of regulatory processes, especially in clinical trials. The conversation highlights the challenges posed by an exponential increase in the number of new molecules entering clinical trials, potentially overwhelming regulatory bodies like the FDA. Andrew Kress also pointed out that “for biopharma, the risks that you would observe are the same as they would be in many other areas with one additional note, which is that because it’s such a regulated industry, there is a need for work that’s done to be both transparent and reproducible. One of the challenges that you would envision occurring here is the ability to look at a study or look at data or a conclusion and be able to go back and say, how did we get to that conclusion? And can we recreate that answer?”

Another significant concern revolves around responsible AI use and the broader ecosystem. The speakers delved into the need for regulations that go beyond the AI tools themselves, extending to the environment in which they operate. They underscored the importance of addressing not only the potential misuse of AI tools but also the broader biosecurity implications. The rapid advancement of AI technology, coupled with its accessibility, poses a dual challenge—excitement for innovation but also fear of potential misuse. The call for building a comprehensive ecosystem, including biosecurity measures, highlights the necessity of a cultural shift in understanding and addressing the risks associated with AI in the biopharma industry.

Envisioning the Future

Finally, the speakers discussed a future where the time required to analyze molecular data was drastically reduced, fundamentally transforming the workflow of scientists and clinicians. They reflected on a transformative vision for the biopharmaceutical industry, where AI acted as a catalyst for change rather than a supplementary tool. Their vision centered on biology becoming instantly programmable, marking a shift from the traditional trial-and-error approach to a more predictable and programmable paradigm.

Abe Heifets brought this home with a vivid example in which he described running a safety panel using AI rather than a couple of weeks saying, “You go get your coffee, you get back see what the results are, and then you design the next one…the work of the medicinal chemist isn’t replaced by the AI …the AI has replaced the assay.”

Mike Nally shared a vision reaching far into the future. “I think the next century will be the biology century, and it’s going to be through augmented intelligence – humans plus computational power – that will ultimately help us understand the mathematical underpinnings of biology in a way that allows us to increasingly change the probabilities of success in programming biology to improve human health.”

The potential of AI was seen by all of our speakers as a solution to long-standing challenges in research productivity and medication accessibility. Shared among them was the recognition that biology, augmented by artificial intelligence, has the potential to become a central information technology, shaping advancements in human health for many decades to come.

Stéphane Bancel summed it up succinctly, “I believe it (AI) is as big a disruption as the desktop computer.”

The Gen AI in Healthcare series is collaboratively produced by Harvard’s Digital, Data, Design (D^3) Institute and the Responsible AI Institute.

About Responsible AI Institute

Founded in 2016, Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. RAI Institute’s conformity assessments and certifications for AI systems support practitioners as they navigate the complex landscape of AI products. Members include ATB Financial, Amazon Web Services, Boston Consulting Group, Yum! Brands and many other leading companies and institutions collaborate with RAI Institute to bring responsible AI to all industry sectors. Become a member.