Applying AI to the HBS Mission Statement: An Unexpected Exploration in Inclusivity

*WARNING* the AI generated some real nightmare fuel, proceed with caution.

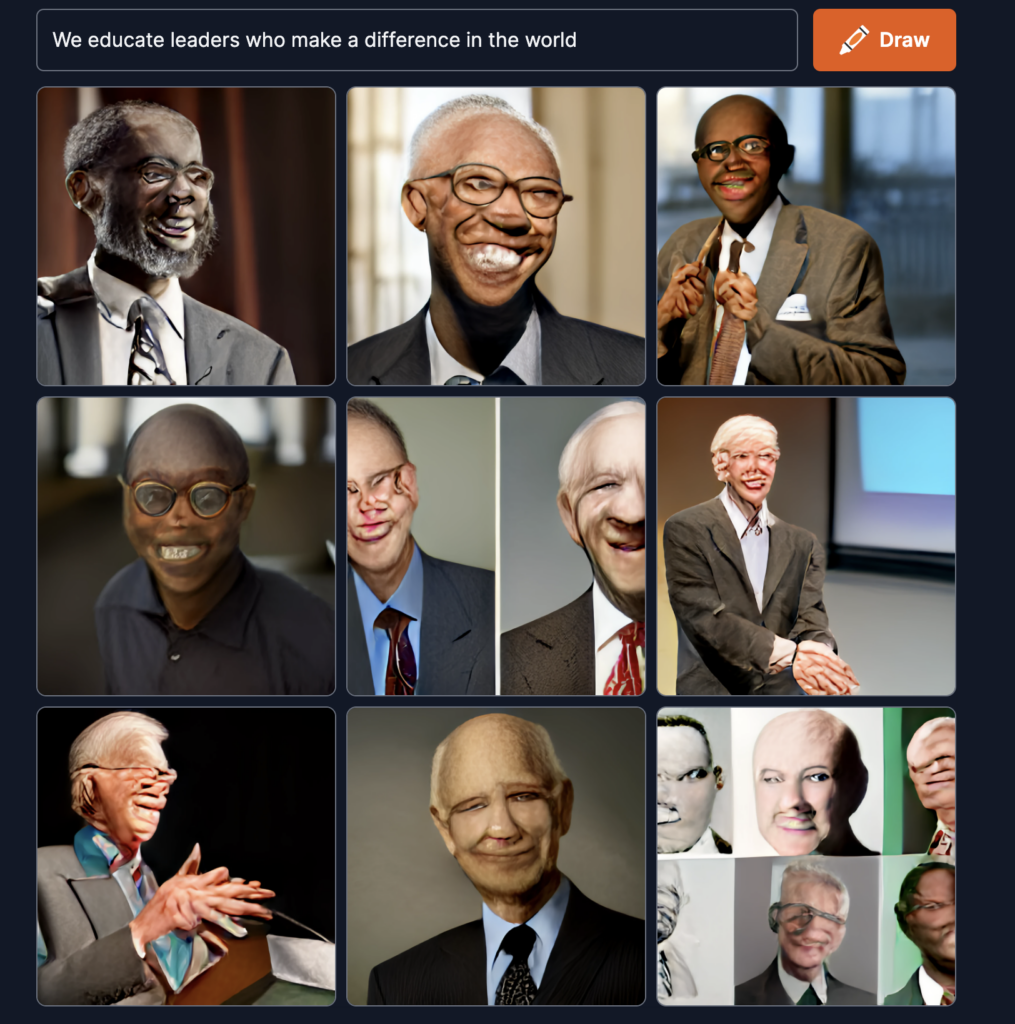

For this assignment, I decided to feed the HBS mission statement to craiyon.ai. I wanted to see how a computer assessed the following:

“We educate leaders who make a difference in the world”

With not much in mind for how this would turn out, I was truly horrified to see the following images after a few minutes of processing:

Once the initial shock of this AI’s horrifying attempt at recreating human features subsided, a sadness set in as I noticed that every image seemed to possess male features. Is the computer saying it thinks the mission statement is only applicable to men? This to me was indicative of the inherent bias built into data by systemic and historic discrimination. For most of human history education and leadership were out of the question for women. HBS only began admitting women in 1962 (nearly 100 years after MIT, Stanford, and Cornell; and even then it only admitted 8 women to the program).

Joy Buolamwini’s research at the MIT media lab uncovered deep algorithmic racial and gender bias in in commercial AI used by Microsoft and IBM. The fact is that, while we are making strides socially, we are up against thousands of years of bias, and training models with that in mind is critical to developing AI that is inclusive to all.

I know this exceeds the word limit, but I think it’s an important point that warrants noting. Many in this class will go on to lead AI organizations, and I implore you all to consider how we avoid building bias into our computational systems.

Really the stuff of nightmares! I totally hate that all this AI generated were images of old men (at least there’s some melanin though!). Glasses also seem to be the norm, but there definitely should be some flags for AI creators from just the small learnings in this exercise about racial and gender bias in AI results.

Thank you so much for the post!

I think it is shocking to see that the AI is only showing men for this statement, even though the statement does not involve the word „men“. There is not much that it could have picked up on – which means „leaders“ is what it must have based the human component on. On top of the points mentioned in the post, I am surprised that the word leaders is only connected to male characters, because there are also female stories that made history.

I was also surprised that all the characters are smiling. I wonder if the “making a difference” and “in the world” phrases made the tool look for more philanthropist-like images. Since I was curious, I input “we educate leaders” into the tool and found that the images were all of primary school classrooms. As I found in my experiment, I think the tool can’t blend two ideas into one, especially if one of them is an abstract concept. I agree on your points about bias – thanks for sharing!

Thank you for the post! It is really disappointing to see gender bias that AI relates leaders with old men. It is upsetting that its the inherent bias in the data while training the AI and should be pointed out to the creators.

This is so interesting, Katelyn. Yes, I agree with others – it’s fascinating to see 9 images of older men. I played with the Craiyon generator and when I type in “educators” it shows female school teachers and a mix of student genders, races, etc. I think the phrase that is triggering the images of older men is the phrase “leaders in the world.” I’m surprised there aren’t more references to the world in these images, such as flags behind the men, or other indications of traditional “world leadership”, such as images of people in military garb.

Incredible that in this day and age the algorithm still correlates leaders with men! Mindblowing. I think your point on fellow classmates going on to lead AI-enabled companies and how to think about inclusion and bias in the algorithm is so relevant and I’m glad you’ve raised it for all of us to have it front and center in our minds.

This is actually horrifying, and maybe doesn’t bode well that the AI attributes such monsters to HBS’s mantra! I think you raise a really interesting point about the assumed gender the AI is applying — it likely is, given it’s searching through years and years of leaders who have graduated from HBS, the vast majority of which are men. Really interesting to consider the implication it has on HBS’s history of admittances.

Thank you so much for the post! If you read their FAQs, they address this bias topic, not in a great way honestly, and I think this happens in most AI/algorithms as we have seen in different cases.

This is so crazy, because if you searched: “CEO” in Google search a while ago, same as Crayion, you would mostly get pictures of white men. Google has worked on tweaking its algorithm to address this diversity bias and make it more contemporary. To your point, we’ve seen that algorithms and AI are as good as the data that goes into them, and these AI biases are a sad truth about our own history throughout the years.

For example, there is a huge team inside Google whose purpose is to address this issue and create a “responsible AI” focused on diversity and respect, but I think it’s such a long way to go from here, but at least someone has started?

Your post gives me a lot to think about!

once you get past the shock of the image, it becomes quite interesting… the suit, the age, the sex, the glasses, the power pose somewhat… I am yet to understand why the words you entered created such a horrifying picture. Cannot help but wonder (or hope?!) that maybe the algorithm was created intentionally to shock