YouTube | Machines Cleaning Up Human Content

Every minute, over 400 hours of video are uploaded to YouTube. What role can AI and Machine Learning have in monitoring content to ensure it is safe and suitable for the platform and viewers?

The internet has democratized video content creation and distribution. Today, over 400 hours of video are uploaded to YouTube every minute.1 While the speed and volume at which content is added to the platform has been key to YouTube’s success, it also poses many questions and risks: How can YouTube ensure that content is appropriate for advertisers and consumers? How can YouTube stop extremist organizations from using the site to spread hate?

One answer is artificial intelligence (AI) and machine learning. YouTube’s “anti-abuse machine learning algorithm” enables YouTube to combat some of the biggest threats to their business by automatically spotting content deemed inappropriate for the platform, such as content featuring child pornography, hate speech, and violence.2

The need for a more robust review of YouTube content came into the public spotlight with an investigation by The Times in February 2017.3 The investigation highlighted how advertisements for global companies were being shown in tandem with indecent content. For example, a L’Oreal ad appeared on a video posted by hate preacher Steven Anderson.4 As a reaction, advertisers, including key global iconic CPG conglomerates such as Unilever, have been threatening to pull their advertisements from YouTube, posing a significant threat to the platform’s key revenue stream.5

YouTube has taken a few significant actions in response. For one, YouTube has committed to building out its content moderation workforce, by “bringing the total number of people working to address violative content to 10,000 across Google by the end of 2018.”6 This includes hiring full-time artificial intelligence and machine learning specialists as well as those with expertise in “violent extremism, counterterrorism, and human rights.”6 In addition, YouTube is leveraging micro-labor sites such as Amazon’s Mechanical Turk to train their AI algorithms via human intelligence.7 Workers are asked to watch a piece of content and indicate what it contains.7 The learning fuels the AI algorithm, teaching it to better review and identify content in the future.7

In April 2018, YouTube published its first quarterly report on content moderation. Results have been promising: from October – December 2017, YouTube removed over 8 million indecent videos, 6.7 million of which were first flagged for review by machines rather than by humans.6 Of the 6.7 million videos taken down, 76 percent were removed before they ever received a single view.6 To further highlight the progress of the content moderation, fueled by AI, YouTube has rolled out a reporting history dashboard that shows YouTube users the status of the videos they’ve flagged.6

YouTube has also refined the way they categorize and sell content to advertisers via the Google Preferred offering, which comprises 5% of total video inventory.8 Seen as the most premium video inventory, YouTube highlights that all Google Preferred content is reviewed by humans before it is sold as an ad placement.8 One might infer that this suggests human review is still superior to YouTube’s AI enabled algorithms.

As YouTube looks to the future, it is important to carefully consider how the algorithms are being taught. This includes implementing practices that prevent biases in content review. Put eloquently by reporter Jacob J. Hunt in a piece for the ACLU, machine learning’s role should not be to “aggregate our biases and mechanize them.”9 Also, YouTube should be transparent with advertisers and consumers on what content YouTube allows, as the lines are often blurry. For example, in March 2018, YouTube initially defended its decision to permit Neo-Nazi group Atomwaffen Division to publish videos on its platform, but later decided to remove them after receiving pressure from the Anti-Defamation League.7 Further, the speed at which YouTube’s AI can review content will become increasingly important as live-streaming becomes more prevalent.

A few questions that remain to be answered include: What is YouTube’s responsibility in monitoring content vs. enabling freedom of speech? Will YouTube ever be able to entirely rely on its AI technology or will human content review always be needed? How else might YouTube be able to leverage AI to enhance its product offering – for example, through more targeted video recommendations to users, review of comments on video posts, or anything else? How are you seeing other digital media platforms, such as Facebook and Twitter, think through similar challenges?

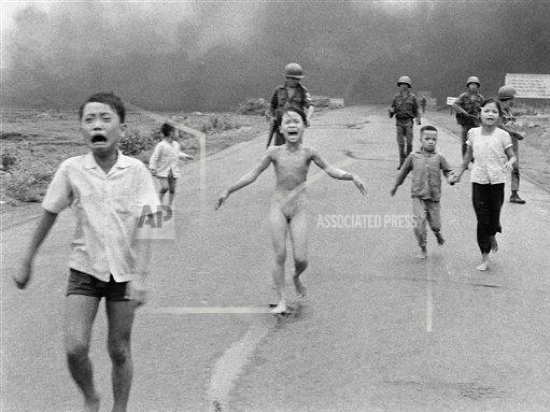

As you think through these questions, consider the below iconic photograph by Nick Ut (1972), referred to as “The Napalm Girl.”11 How might a human interpret this image as opposed to an AI algorithm? What are the implications?

(Word Count: 735).

Sources

[1] Nilas, J. (2018). YouTube Subjecting All ‘Preferred’ Content to Human Review.[online] Available at: www.wsj.com/articles/youtube-subjecting-all-preferred-content-to-human-review-1516143751.

[2] Meyer, D (2018). AI Is Now YouTube’s Biggest Weapon Against the Spread of Offensive Videos.[online] Available at: http://fortune.com/2018/04/24/youtube-machine-learning-content-removal/.

[3] Mostrous, A. (2017). Big brands fund terror through online adverts. [online] Available at: https://www.thetimes.co.uk/article/big-brands-fund-terror-knnxfgb98.

[4] Vizard, S. (2017). Google under fire as brands pull advertising and ad industry demands action. [online] Available at: https://www.marketingweek.com/2017/03/17/google-ad-safety/.

[5] Marvin, G. (2018).A final call? Unilever threatens to pull ads from platforms swamped with ‘toxic’ content. [online] Available at: https://marketingland.com/final-call-unilever-threatens-pull-ads-platforms-swamped-toxic-content-234323.

[6] YouTube (2018). Official Blog. [online] Available at: https://youtube.googleblog.com/2018/04/more-information-faster-removals-more.html.

[7] Matsakis, L. (2018). A Window Into How YouTube Trains AI To Moderate Videos. [online] Available at: https://www.wired.com/story/youtube-mechanical-turk-content-moderation-ai/.

[8] Nicas, J (2018). YouTube Subjecting All ‘Preferred’ Content to Human Review. [online] Available at: https://www.wsj.com/articles/youtube-subjecting-all-preferred-content-to-human-review-1516143751.

[9] Hutt, J. (2018). Why YouTube Shouldn’t Over-Rely on Artificial Intelligence to Police Its Platform. [online] Available at: https://www.aclu.org/blog/privacy-technology/internet-privacy/why-youtube-shouldnt-over-rely-artificial-intelligence.

[10] Ut, N (1972). Napalm Girl Photo. [online] Available at: http://www.apimages.com/Collection/Landing/Photographer-Nick-Ut-The-Napalm-Girl-/ebfc0a860aa946ba9e77eb786d46207e.

Fascinating article! I think the most interesting part of this challenge is deciding where to draw the line with what is considered inappropriate for YouTube. Will there be a point at which the machine learning algorithm will become so advanced that it is considered the best and most unbiased judge for public content? Also how will the machine learning algorithms change over time as cultural and social norms shift? I think these issues are especially challenging for a global product like YouTube that serves people from a wide range of geographies and cultures.

This certainly raises some interesting points. I personally am skeptical that human content review will ever be able to be removed completely given the significant levels of judgement that can come into play when considering whether content is appropriate or not – there can and will be grey areas that will require human intervention. As societal and social norms evolve, content that was once considered appropriate may shift to inappropriate, and vice versa (for example, many views that were in the past considered acceptable would absolutely be considered offensive and inappropriate today). While the statistic that machines were able to flag 6.7M out of 8M indecent videos is staggering, the fact that humans were still required to flag 1.3M videos indicates to me that there is still a role for human interaction in the foreseeable future, and humans will likely be required to update the algorithms over time as society evolves.

This certainly is a complex question – “appropriate” means something different to everyone, and the global reach of YouTube means that they will need to consider how content will be received across geographies. Given some of the concerns raised by the Google memo, I’m curious about the formal and informal structures that the management team has implemented to separate the internal culture and values of the company from the judgment of content they may not agree with. YouTube requires on the input of many diverse creators, so alienating people who want to share or consume controversial opinions could foster the development of other video hosting platforms and drive people off of YouTube. It sounds like they are investing heavily in building a strong team to tackle this challenge, but will they be able to attract and retain a diverse enough group of talent to approach this problem without bias?

This is a tricky, yet important topic. You addressed the sensitivity surrounding censorship, which is what I am also wary of. AI can indeed help people, and companies, operate more efficiently. Nevertheless, the potential for context-specific error is real, specifically because like in this particular case, it can result in a company distorting the voice of even its most supportive content creators. Therefore, it is somewhat of a slippery slope. The relativity of offensiveness, for instance, makes it particularly difficult to decide what warrants being pulled. If YouTube “hushes” the wrong person, there could be significant backlash.

Having worked in the trust and security space at a cloud-computing firm, the issues you’ve raised in this article are top of mind for tech companies who host content. AI/ML have supported content safety in being more efficient in reviewing video and image hexes, but there is still a ton of sensitive content that require human judgment and human review. One thing to consider is the role sound plays in assessing the appropriateness of the material. There is a national movement around technology companies working together to report child pornography to federal agencies, and a key part of identifying some of those materials is by listening to what’s being conveyed in the videos. These hexes get stored in a shared database that AI/ML algorithms can scan and run matches against, but unfortunately, changing the video slightly (adding a filter or shortening it) can change the hex, making it difficult to catch re-uploaded content.

Until AI progresses to the point where machines can understand the nuances of language, humans must remain involved. We have witnessed how online content can be oppressive, offensive, and exploitive for political gain. Unless companies such as Facebook, Twitter, and YouTube control for hate message, they risk losing their audience.

To answer your question – I believe YouTube has a clear responsibility to monitor content first and protect free speech second. As the company’s user base and content volume grow, this must become a company imperative. How costly will this be? Will it be worth it?

Really interesting piece. Of course, there should be no space for hate speech online and there are certainly risks associated with ignoring language that incites violence online. For instance, John Oliver has a good segment about the failure of Facebook to adequately remove hate speech which he believes has contributed to violence in Myanmar. That being said, I worry deeply about some of the risks associated with training machines to remove content deemed inappropriate, in particular when organizations have specific political views. There have been several instances of sites like YouTube removing Conservative content from their site, claiming it hate speech when it was obviously not…which makes me very concerned about the freedom we should all have to express both popular and unpopular views. This raises the question of who should be the arbiter of what is considered “Hate Speech”…and frankly, I’d rather it not be Youtube.

You raised true questions here. As much as we want to protect our younger ones from bad media, I am much more interested in the question you asked about “monitoring content and free speech”. This makes me take this question one level deeper: “is youtube playing God here? Given that the world is such a diverse place and what is deemed inappropriate is different by race, ethnicity, geography etc – How is Youtube deciding on what is appropriate vs not. Lastly, I am also concerned about the various biases that are being fed into the machine.

Ultimately, I do believe that this is a step in the right direction, I just hope Youtube is making the right data entry choices.

This is an interesting article. I think Youtube is doing a very great job using AI technology to filter out inappropriate content. The photo that you posted at the very end pose an interesting question – is Youtube also eliminating free speech? I think AI and human may interpret this photo very different. But as we learned in class, I think it’s better to commit a type II error (“better to reject a good candidate than accept a bad one”) to ensure that Youtube is a healthy/safe place for people to browse videos and reduce the risks of legal ramifications.

Interestingly enough this article reminded me of Watson’s ‘Toronto’ faux pas on Jeopardy. In the spirit of freedom of expression, I’d be concerned that YouTube’s AI would extrapolate its understanding of language to a point that it interprets mere discussion of a controversial topic as a showcase of support for indecent content. As an avid consumer of YouTube videos, I’ve already seen instances in which content creators have had their videos de-monetized simply by sharing their opinions on pop-culture. For this reason, I believe that there should always be a human element to AI so as not to inhibit creators when expressing their views.

Thanks for sharing this interesting information! I think it’s really cool that Youtube is using ML for this important issue. It can be very difficult and expensive for humans to review every video that’s posted on the site, but leveraging ML and AI to make the review process more efficient seems to make sense. Several of our classmates have voiced a concern about censorship, but I personally I think making a Type 2 error is more costly than making a Type 1 error. Type 1 error is when you incorrectly reject a video which shouldn’t have been rejected, whereas Type 2 error is allowing a video to be on the site which shouldn’t be allowed. Because of the polarized political environment and the availability of firearms in the US, I think allowing a video that condones hate speech or violence could have real world consequences (e.g., Pizzagate conspiracy)