Woebot: The Robo-Therapist Supplying Comfort, Consolation, and Care at Scale

By leveraging mobile technology and NLP, Woebot, the chatbot therapist, promises to help more people than healthcare providers can in coping with common mental health problems.

By the end of this blog post, your greatest fears or hopes may be realized.

Woebot Health, a technology company founded in 2017 by veteran clinical psychologist Dr. Alison Darcy, offers users the on-demand mental health support of a chatbot, named Woebot. Care is delivered in the form of a text-based conversation between Woebot and a human on an iOS or Android mobile app. Woebot’s communication with users is rooted in the dogmas of Cognitive Behavioral Therapy, Interpersonal Psychotherapy, and Dialectical Behavior Therapy. The conversations are generated using AI’s Natural Language Processing (NLP) capabilities. Woebot reports that 1.5 million people have downloaded it as of September 2023.4

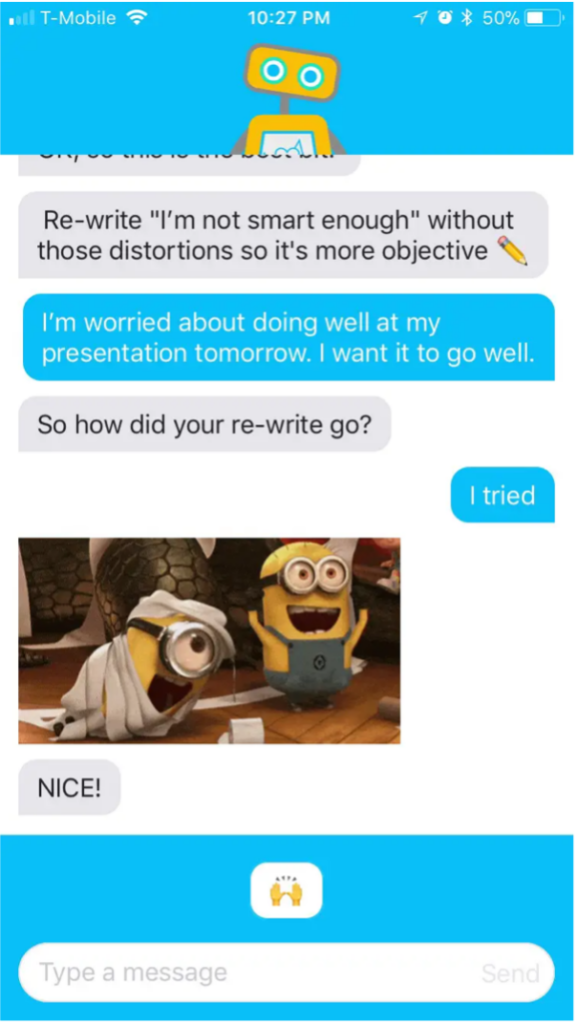

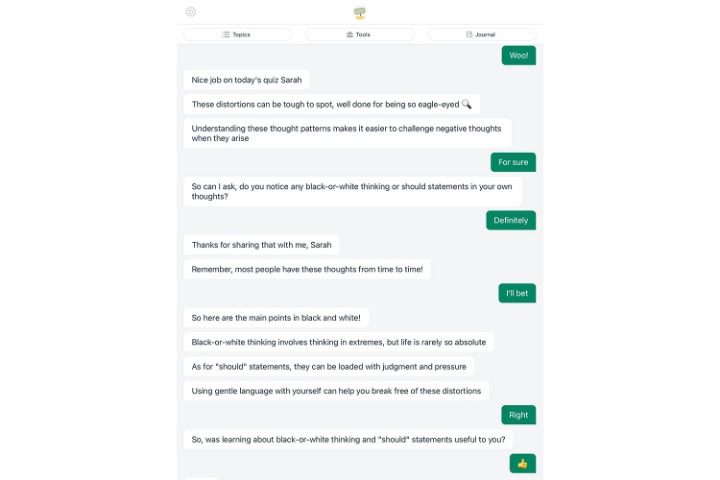

Screenshots and video below reflect real conversations between patients and users of Woebot. Users can chat with Woebot 24/7 and expect instant responses. Woebot can be trained to “nag” users into discussing how they feel at different points during the day, and users can select a different theme of support they’d like (sleep hygiene, anxiety, etc.) The app also features a graph for users to track their daily moods. Woebot’s content is targeted to three populations – adults, adolescents, and new mothers.

Woebot’s use of AI is limited, but evolving and expanding rapidly. Currently, every message Woebot sends originates from a bank of statements and questions pre-approved by conversational designers and mental health experts. AI’s role is scoped to learning about users’ patterns and problems and habits of speech. But Woebot has big plans to change that. As of July 2023, Woebot announced that it would begin research on the safety and effectiveness of integrating Large Language Models (LLMs) into their technology.4,5 The deployment of LLMs (a type of generative AI) might mean that Woebot will start to craft its own correspondence. (Think of this foray like ChatGPT, but for managing your deepest fears and worst nightmares.) Upside is high, downside is catastrophic, but its occurrence is probably inevitable.

Woebot’s success hinges on the fact that (1) contemporary demand for high-quality mental health care dwarfs supply (of human clinicians), and (2) the depth of relationship that Woebot can form with humans is on par with the kind of relationship that humans would form with a therapist. The first point is industry-agnostic – one of the most common value propositions for software development is that it is useful to scale delivery of services. Analysis of the second point can lead one think Woebot is a nightmare dressed like a daydream.Research conducted by Woebot in 2021 concluded, from a sample size of 36,070 over a two-week period, that users felt empathetically connected to Woebot.6 (Supposedly, forming this connection took between three and five days for most users,) Most users interacted with Woebot every day. Their depressive symptoms also diminished, per self-report. This study is corroborated by thousands of positive reviews on the App Store and PlayStore. It has average 4.7 and 4.1 (out of 5) starts on each respective store, and people describe it as “funny” and full of “much insight.”7 Often praised is Woebot’s mastery of the re-framing technique common in CBT. (An example of this would mean helping the user characterize a particular endeavor, like an earning a poor grade, as a failure instead of calling him or herself an abject failure.) Where Woebot falls short is in its ability to manage people through acute crises, like suicide. (Its NLP can detect alarming language, but it isn’t trained to be a crisis intervener.) Barring the questionable fidelity of self-reported and sponsored feelings and findings, that people feel more connected to and comforted by a two-dimensional robot than they are by the three-dimensional human beings around them is profoundly sad.

Below are a couple of examples of Woebot’s descriptions of how its adult and maternal modes build trust:

MATERNAL:

The good news is that sadness is Woebot’s gas and electric. (In case that joke didn’t land, that is a play on bread and butter.) Depression and anxiety don’t appear to be diminishing in frequency anytime soon, and if Woebot’s LLM experiments go well, it could start to replace human therapists. Obviating the need for human therapists will be a big challenge, though. To scale the offering of high-quality healthcare, Wombat will need to hire many highly-educated specialists in psychology, medicine, and data science. These experts are in chronic short supply, so shrinks can expect their couches to be kept warm for a while.

To use Woebot, a person must have a smartphone and be part of either a research study Woebot is conducting or a member of one of Woebot’s partner organizations, which are mostly healthcare delivery or employee benefits companies, including Virtua Health, Curai Health, and PayrollPlans. This is a departure from the company’s previous business model, which permitted unaffiliated individuals to engage in a free trial before opting into a paid subscription plan. (Yours truly considered accepting a job as a software engineer with Woebot when the app was more accessible, two or three years ago.) It is unclear why leadership transitioned.

This analysis would not be complete without a review of the incipient government regulation. The intersection of healthcare and AI, like the intersection of AI and every other field is nascent and dynamic. The companies who build the runway, though, are likely going to be the ones that shape how they are policed, and Woebot is no exception to this rule. Woebot proudly boasts that much of its regulatory compliance team were formerly employed by the Food and Drug Administration.8 (The infamous Sackler family used the same playbook for Purdue Pharma to keep lethal opioids on the market for as long as possible. Woebot isn’t selling heroin, but its strategy might help exclude competitors for a while. The proliferation of chatbots across all verticals means that without regulation, Woebot might not be able to maintain its moat.) The organization gets IRB approval for the studies it runs, and it promises that it does not share or sell data to advertisers.8 (Nothing is stopping it from doing that though, in the future.) It remains to be codified if the sacrosanct doctor-patient confidentiality will apply to humans and Woebot. The executive branch of the US federal government is laying out comprehensive guidelines and watchdogs for how AI will be used from the government’s perspective – how that policy will influence private companies’ decision-making will be a highly-consequential nail-biter.

That’s all! Now woe away.

Sources:

(2) https://www.verywellmind.com/i-tried-woebot-ai-therapy-app-review-7569025

(3) https://www.patreon.com/posts/72281734

(4) https://woebothealth.com/why-science-in-the-loop-is-the-next-breakthrough-for-mental-health/

(5) https://woebothealth.com/why-generative-ai-is-not-yet-ready-for-mental-healthcare/

(6) https://woebothealth.com/img/2021/05/Woebot-Health-Research-Bibliography_May-2021-1-1.pdf

(7) https://play.google.com/store/apps/details?id=com.woebot

(8) https://woebothealth.com/safety/

Thanks for the awesome post, Aliza! Your point about research showing that from a sample size of 36,070 over a two-week period, that users felt empathetically connected to Woebot within 3 to 5 days is interesting. Typically (ethical) designers attempt to sustain some gap between AI and a real human to prevent users from feeling tricked. This type of tricking would even qualify as a dark pattern. This situation is interesting though – its baked into the value proposition to feel as close to a human as possible to the point of feeling emotionally attached. I wonder how management is proceeding with this – I foresee an onslaught of lawsuits from users getting too attached to the AI.

This is super cool Aliza! But also reading some of these chats are a bit terrifying and remind me of the GenZ affliction of “therapy speak” – do we think Woebot can actually improve mental health or nudge people into healthier patterns of thinking or do we think that this is potentially empty and might not resolve underlying issues? Or worse, could dependence on an AIbot make things worse in the long term?

This is a great post, Aliza!! Thanks for sharing. I’m definitely interested in understanding what it will look like from the regulatory side in terms of doctor-patient confidentiality. Another thing I’m curious about – do you expect a product like Woebot to replace or be better than tools like 7 cups (free online therapy – but not necessarily with a licensed therapist, but an everyday human / peer)?

Awesome post Aliza! This is both fascinating and giving me existential dread at the same time. It makes sense that, for the moment at least, they’re limiting the responses to a pre-approved question bank until they can study if their AI models can be deployed safely. I’m curious, how do you think the should go about running those future tests? I’m not sure if there’s any precedent with how clinical trials are conducted for drug development in phases but as you said the downside could be really bad if they get it wrong.

That said, I love that it responded with a minions meme. Guaranteed to improve someone’s mood.

I really enjoyed your post- you did a great job looking at all angles of the service! This makes me super uncomfortable and I’m not sure why. I’m kind of reminded of something we learned in MSO about the uncanny valley- where there’s a huge dip in people’s acceptance of AI as it gets close to “human like” and I wonder if that’s it.

Thanks for the amazing post, Aliza! The potential impact on traditional therapy models and the ethical considerations surrounding AI-driven mental health solutions is fascinating. I found the ‘nag’ element you mention particularly interesting. Seeking therapy with human therapists generally involves a lot of self-work from one’s end motivated by a personal connection with the therapist and feeling understood. I guess I’m just curious to what extent Woebot can make a person feel that way and motivate them to work on themselves, especially in tough times. Have you found any data on this?

Great job Aliza! Obviously, using LLMs for anything nowadays is super trendy, as well as any counterarguments on safety and bias. I love the fact that they’ve limited their user pool, since I imagine the downside of opening this app to the general public is just much too great (I can already see the stories that get posted if people are able to game the software to produce scary responses). I actually think there’s a very valuable use case here for diagnosis, as opposed to treatment. It makes a ton of sense that an AI conversation buddy can create a reasonable inference on what types of issues are troubling someone before handing them off to a trained therapist…the treatment portion makes me uneasy, since I genuinely do believe that it requires human empathy. While Woebot may be able to simulate it, if the illusion cracks at any point, the patient might end up in an even worse state.

Interesting post Aliza! Thanks for introducing the platform. In one of my CS classes, our group is working on developing a similar chatbot for mental health. Different from traditional chatbots like ChatGPT, it might require some algorithm tuning for communicating mental health problems. I think it has the potential to integrate with enterprises’ employee systems for managers to better understand employees’ mental states. Besides, I think the UI can be designed to be more immersive and healing to render comfort for the users.

Thanks for the great post Aliza! It is really interesting to learn how effective the chatbot actually is. I am very curious if such features could be applied to the primary care setting, doing triage to assist PCPs in their workflow. Will def look more into this!