The Ethics of AI: Robotic Cars, Licensed to Kill

Google’s self-driving cars will make an immense difference in reducing road-related deaths. But in the case of an unavoidable accident, how do we train AI to make a judgment call on who should die?

Artificial intelligence is the driving force behind the next wave of computing innovation. It’s powering the Big Tech race to build the best smart assistant: Apple’s Siri, Amazon’s Alexa, IBM’s Watson, Google’s Assistant, Windows’ Cortana, Facebook’s M, to name a few. It’s pivotal to the United States’ defense strategy; the Pentagon has pledged $18Bn to fund development of autonomous weapons over the next three years [1]. And it’s spurring competition in the automobile industry, as AI will (literally) drive autonomous vehicles.

AI has huge potential benefits for society. But AI needs to be trained by humans, and that comes with immense risk. Take Microsoft’s experiment with a chatbot it called Tay, an AI that spoke like a millennial and would learn from its interactions with humans on Twitter. “The more you talk the smarter Tay gets” [2]. Within 24 hours humans manipulated Tay into becoming a racist, homophobic, offensive chatbot.

Exhibit 1 – How Tay’s Tweets evolved through interaction with humans [3]

More urgently, the challenge of teaching AI sound judgment extends to autonomous vehicles. Specifically, the ethical decisions that we’ll have to program into the robots’ algorithms. Given its stewardship in the self-driving car space, Google will play a major role in shaping how AIs drive. Google’s business model is to design a car that can transport people safely at the push of a button. This would deliver undoubtable value creation, as over 1.2 million people die worldwide from vehicular accidents, and in the US 94% of these are caused by human error [4]. However, the operating model through which Google executes on this presents difficult moral dilemmas.

Google will have to take a stance on how the car should make decisions regarding loss of human life. The big question is: how should we program the cars to behave when faced with an unavoidable accident? In the situation that a person or people have to die, how do we train the autonomous vehicle to make that decision of who to kill? As stated in the MIT Technology Review “Should it minimize the loss of life, even if it means sacrificing the occupants, or should it protect the occupants at all costs? Should it choose between these extremes at random?…Who would buy a car programmed to sacrifice the owner?“ [5]

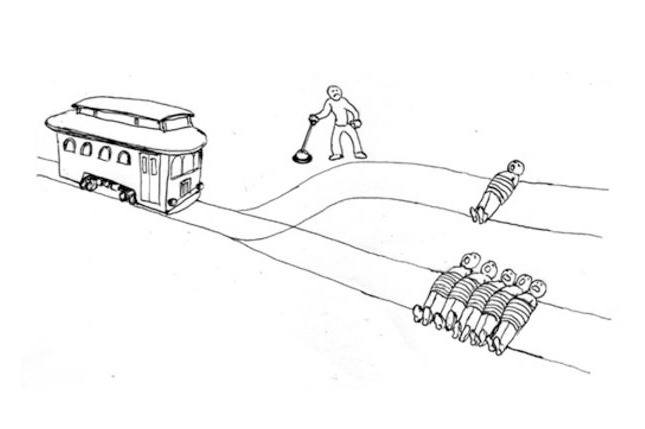

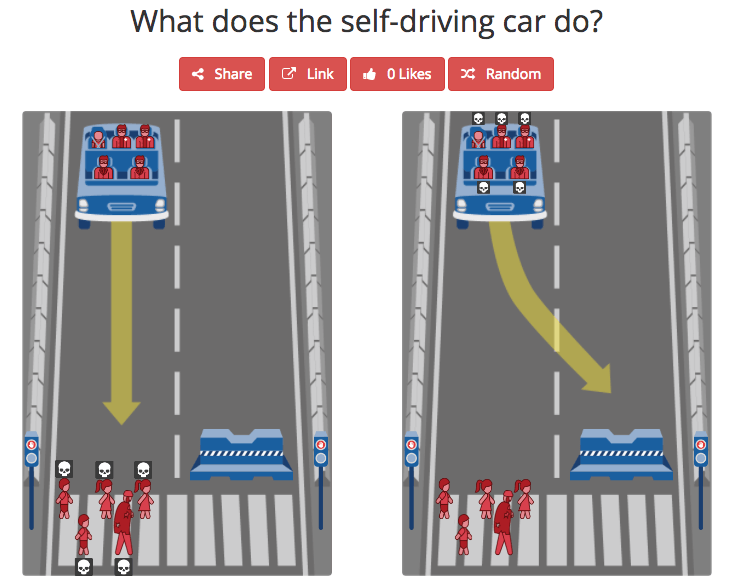

Consider the “Trolley Problem, a thought exercise in ethics, in Exhibit 2. Do you favor intervention versus nonintervention? Consider MIT’s Moral Machine Project, a website that presents moral dilemmas (example in Exhibit 3) involving driverless cars and forces you to pick your perceived lesser of two evils. In browsing through the scenarios on http://moralmachine.mit.edu/, I personally find that there’s no clear answer. It’s hugely uncomfortable to decide which parties should be killed.

Exhibit 2: The Trolley Problem: A runaway trolley is speeding down a track towards 5 people. If you pull the lever you can divert the trolley to another track where it would only kill 1 person. Do you pull the lever? [6]

Exhibit 3: MIT Moral Machine: Do nothing and kill the pedestrians who are violating the crosswalk signal — 1 grandma and 4 children? Or swerve and kill the car’s passengers — 4 adult kidnappers and the child they’re holding hostage? [7]

In a study on how people view these dilemmas, a group of computer science and psychology researchers discovered a consistent paradox: most people want to live in a world of utilitarian autonomous vehicles — vehicles that will minimize casualties, even if that means sacrificing their passengers for the greater good. However, respondents themselves would prefer to sit in an autonomous vehicle that protects their own life as a passenger at all costs [8]. It’s clear that there’s no objective algorithm to decide who should die. So it’s on us humans, the programmers at Google and other automakers, to design the system ourselves.

In addition to algorithmic design, we’ll have to redefine vehicular laws. Currently our adjudication relies on the “reasonable person” standard of driver negligence. But when an AI sits behind the steering wheel, does the “reasonable person” standard still apply? Are the programmers who designed the accident algorithm now liable?

There’s no question behind the value of Google’s business model. The real question is how Google will operationalize it. Last month Microsoft, Google, Amazon, IBM and Facebook announced the Partnership on Artificial Intelligence to Benefit People and Society (PAIBPS) to support research and standard-setting [9]. But these companies are all competing in the same race to be the leader in AI. Can we trust that they’ll take the time to carefully think through the ethical dilemmas rather than accelerate to win the race? To allow autonomous vehicles to save lives Silicon Valley will have to wrangle with the ethical dilemma of how cars take lives.

(Word Count: 792)

_________________

Citations:

- http://www.nytimes.com/2016/10/26/us/pentagon-artificial-intelligence-terminator.html

- http://qz.com/653084/microsofts-disastrous-tay-experiment-shows-the-hidden-dangers-of-ai/

- http://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

- https://www.google.com/selfdrivingcar/

- https://www.technologyreview.com/s/542626/why-self-driving-cars-must-be-programmed-to-kill/

- http://nymag.com/selectall/2016/08/trolley-problem-meme-tumblr-philosophy.html

- http://moralmachine.mit.edu/

- http://www.popularmechanics.com/cars/a21492/the-self-driving-dilemma/; http://science.sciencemag.org/content/352/6293/1573

- https://www.ft.com/content/dd320ca6-8a84-11e6-8cb7-e7ada1d123b1

Cover image: http://www.techradar.com/news/car-tech/google-self-driving-car-everything-you-need-to-know-1321548

This is really thought-provoking – I remember taking the moral machine test a while back and being surprised to learn some of the biases that I have in terms of who to save and who not to save. The paradox that you mention about having different standards for yourself and others applies in other places where individual good vs. societal good are in conflict. For instance, when splitting 8 pieces of pizza among 4 people, I’d rather be able to eat three slices but live in a society where we split the pizza evenly. Social norms of fairness (my fellow pizza eaters would judge me) and laws are guard-rails to optimize societal good and I think autonomous cars are an area where government regulation would be beneficial.

I would rather have top-down rules around car decision-making than car companies competing on passenger vs. driver vs. pedestrian safety. It looks like the government is starting to think about that – http://www.theverge.com/2016/9/19/12981448/self-driving-car-guidelines-obama-foxx-dot-nhtsa mentions that the federal government is mandating data reporting around several topics, including “how vehicles are programmed to address conflict dilemmas on the road” – wonder how much of this will be decided by the federal government and when.

I agree with Alex, the decision of what the car should do cannot be taken by the car company because then car companies could compete for customers over who protects passengers the most. However, I think this will be very difficult to implement because 1. there is no legal right/wrong in the situations described in the post. So current law itself cannot be applied in this case, 2. government cannot mandate exact forms of programming cars when there is no legal reason, and 3. situations are rarely as simple as in the examples so it will be very difficult to follow through with actual punishment in case a situation like this actually takes place.

Wow ! Hadn’t thought of this yet with autonomous cars. I would definitely not want to be one of the engineers writing the code to decide which lives to take and which to save. However, if going down this dark and complex road – why not model the whole thing and see in which scenario more lives would be saved? What I mean is model a whole state or city for example, with an algorithm written via a few different ethical decision criteria (e.g. save the passengers, save the youngest kids etc.) and see on aggregate which reality would leave us better off?

At the end of the day, I hope this would evolve slowly, naturally and in a contained fashion so we can have the time as a society to make sense of it and hit the breaks (pun intended) when needed.

This is a fascinating issue, one that is becoming more prevalent as our society becomes increasingly more digitized. However, it’s not the first time that the tech world faces such a moral dilemma. When drones were first introduced as unmanned aerial vehicles in modern warfare, many came forward to condemn and called into question the ethicality of using a killing robot. For many philosophers, it presented a “moral hazard” — a situation in which greater risks are taken by individuals who are able to avoid shouldering the cost associated with these risks.[1] When the cost of taking risks is reduced, especially in a state of war, does it invite more justification of going beyond what is needed? Just because we ‘can’ doesn’t necessarily mean we ‘should’, and lowering the stakes in warfare is certainly a dangerous territory, particularly when civilian casualties are oftentimes unavoidable. It is an important issue to be on the lookout for, and even with a top-down approach, there will unlikely ever be a morally-just answer.

[1] New York Times. “The Moral Hazard of Drones.” July 22, 2012. [http://opinionator.blogs.nytimes.com/2012/07/22/the-moral-hazard-of-drones/]

Very interesting post. I think this is a perfect example of how inherently the algorithms are biased/flawed given the inputs are man-made. I am curious about the stat above that says “over 1.2 million people die worldwide from vehicular accidents, and in the US 94% of these are caused by human error”. How much of the human error is likely to be reduced by self-driving cars? In other words, will the benefits outweigh the cons of having autonomous vehicles on the road? I agree with your point about redefining vehicular laws. I certainly hope the policies that will be enacted to govern the algorithmic design and incentive structures will be for the good of all people impacted. Another social issue that will arise due to the rise of autonomous vehicles is what will happen to the millions of drivers who will lose their jobs? This article published a year or so ago discusses trucking-related drivers (over 8.7 million trucking-related jobs as of the article’s publication): https://medium.com/basic-income/self-driving-trucks-are-going-to-hit-us-like-a-human-driven-truck-b8507d9c5961#.njzy9tgu6