Misunderstanding the American Electorate

How digitization is destroying our ability to predict election outcomes

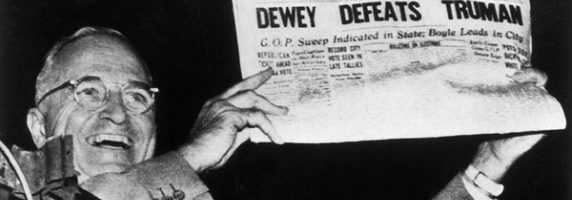

“THE POLLS ARE ALL WRONG. A STARTUP CALLED CIVIS IS OUR BEST HOPE TO FIX THEM”, screamed a Wired headline in June 2016. [1] Indeed, five months before the U.S. presidential election, the cracks in the polling system had already begun to appear. Primary elections that were supposed to be a landslide for Hillary Clinton were going the way of Bernie Sanders. In surprise after surprise, Donald Trump was pulling ahead of Ted Cruz. Of course, the biggest polling debacle was still a few months away.

But is Civis Analytics the answer?

Civis is a data science startup that aims to empower organizations to make better decisions using data and data analytics tools. Operationally, the company delivers on this promise by collecting and analyzing voting histories, consumer behavior, and other data on the American voting public. Mainly focused on political campaign targeting, the company has helped organizations answer questions like, what slogans best elicit Americans’ support for aiding Syrian refugees? And, how can the government identify and better target Americans in need of health insurance? [2]

The revenue opportunity for Civis is born out of the digital transformation forces that are creating a crisis for traditional polls. Mainly, the American public has become much harder to contact. In 1980, pollsters achieved a 70% response rate to (landline) phone calls, giving these firms at least a fighting chance to capture public sentiment. In 2016, the phone response rate has dropped to less than 1%. [3]

One major reason is the rapid adoption of cell phones, along with caller identification and voicemail technologies, which allow individuals to screen unknown callers. The National Health Interview Survey, a government survey conducted through at-home interviews, estimated that in 2014, 43 percent of the American public used only cell phones, and another 17 percent “mostly” used cellphones. [4]

Cell phone users are not only harder, but also more expensive, to reach. The 1991 Telephone Consumer Protection Act prohibits autodialing cell phones, a practice in which the call is passed to a live interviewer only when a consumer picks up. Thus, completing a 1,000-person cell phone poll, which may require dialing up to 20,000 random numbers, takes a great deal of paid interviewer time. [5] A landline-only polling campaign is cheaper but reaches, at best, half of the American public and generally the older, more conservative half. [6]

Polling firms have thus turned to the internet to achieve greater sample sizes at lower cost. The fundamental problem is that there is no way to draw a randomized sample of the American population from the internet. Not everyone has an online presence and of those who do, the ones most likely to complete online surveys are generally younger and more liberal. [7]

Thus, the good polling firms try to achieve a balance between more conservative, landline phone users and more liberal, online responders. It seems no one has achieved a good mix yet.

Enter Civis, the new great hope for campaign managers. Rather than relying solely on poll responses, the company is aggregating voting histories, employment, residence, consumer behavior, and other data on America’s 200 million voters. To answer the question of which slogans best elicit support for Syrian refugees, for example, the company still needs an initial round of telephone surveys. But, it can elicit opinions from a significantly smaller sample of individuals, identify which attributes (like geography and income) are likely predictors of their opinion, and extrapolate these findings to the rest of the voting public.

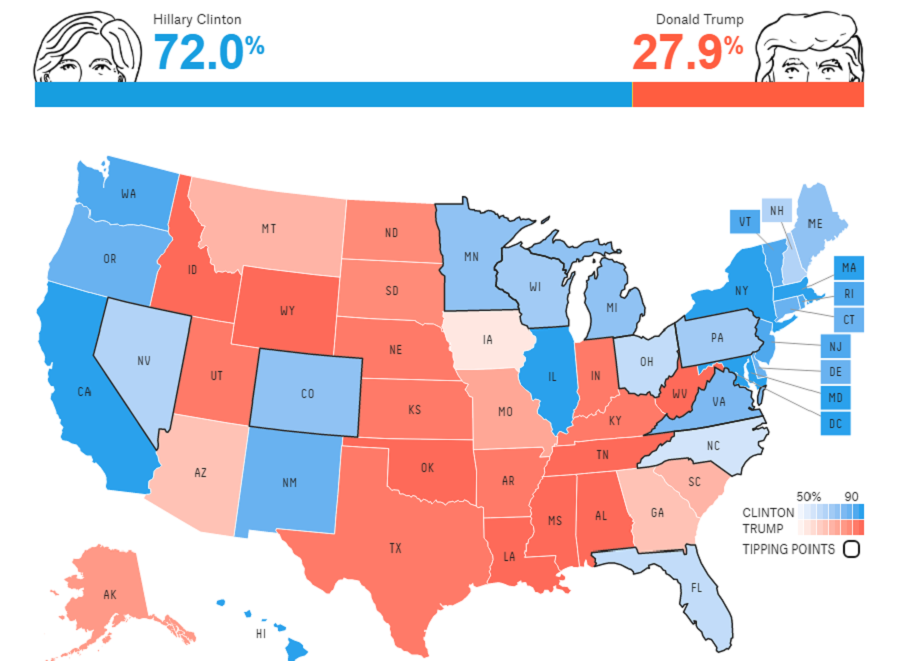

On November 9, 2016, amid the liberal hand wringing and finger pointing, polling firms were quickly dismissed and Civis and other “big data” competitors named their successors. But does innovation in the operating model – namely, using big data instead of traditional polling – help Civis “empower organizations to make better decisions”? Perhaps not. For one, Civis does not appear to have solved the fundamental problem of drawing a randomized sample, since it is still reliant on an initial set of telephone surveys. Indeed, the risk of bias may be exacerbated due to the significantly smaller sample size. Furthermore, the company is relying on past behavior to predict voting outcomes – a practice that the recent election should caution against.

The answer to better polling may not lie in greater digitalization after all. Instead, companies like Civis should consider going back to basics: having more conversations with the American public to understand priorities, emotions, and nuances behind voting decisions. Conducting the extensive on-the-ground or phone-based surveys necessary to obtain a representative sample may be costly, but understanding political preferences may be one space where greater reliance on technology destroys value.

Word Count: 761

[1] Graff, Garrett. “The Polls are all Wrong. A Startup called Civis is our Best Hope to Fix Them.” Wired. 6 June 2016. Available at: https://www.wired.com/2016/06/civis-election-polling-clinton-sanders-trump/ [Accessed 18 November 2016].

[2] Civis Analytics. “About Civis”. Available at: https://civisanalytics.com/. [Accessed 18 November 2016]

[3] Graff, Ibid.

[4] National Health Interview Survey. “Wireless Substitution: Early Release of Estimates From the National Health Interview Survey, January-June 2015.” Center for Disease Control. Available at www.cdc.gov/nchs/data/nhis/earlyrelease/wireless201512.pdf. [Accessed 17 November 2016].

[5] Zukin, Cliff. “What’s the Matter with Polling?” The New York Times. 20 June 2015. Available at: http://www.nytimes.com/2015/06/21/opinion/sunday/whats-the-matter-with-polling.html. [Accessed 17 November 2016].

[6] Lepore, Jill. “Politics and the New Machine”. The New Yorker. 16 November 2015. Available at: http://www.newyorker.com/magazine/2015/11/16/politics-and-the-new-machine. [Accessed 17 November, 2016].

[7] Kennedy, Courtney, et al. “Evaluating Online Nonprobability Surveys”. Pew Research Center. 2 May 2016. Available at: http://www.pewresearch.org/2016/05/02/evaluating-online-nonprobability-surveys/. [Accessed 18 November 2016].

I agree that it would be unwise to base all predictions on voter’s historical decisions. However, I worry that you also run into a significant bias when people are asked to report who they plan to vote for: if a person lives in a community where one candidate is more socially acceptable than another, they might report a preference for that candidate, but actually vote in private for the other. Therefore, I think a combination of the two is needed. And I’d argue that rather than “go back to the basics,” companies like Civis should strike a balance by using both methodologies.

Not only has digitization been really obstructive and counter-effective in this case, but perhaps it has had an external effect on the messages which the polling is meant to measure. When there is more accurate polling, then messages are constructed to reflect the sentiment of the American electorate. When polling is influenced by digital inaccurate results, perhaps the candidates change their message to reflect the inaccurate measurements. There is likely a compounding effect when polls are inaccurate in this regard as politicians begin to further reflect their polling results, leading to stronger polls reflecting those messages, and so the spiral continues.

The negative correlation between the recency and accuracy of polls doesn’t necessarily imply that going back to basics will improve poll reliability. Certain trends may limit the ability of any poll to accurately predict outcomes – e.g., priming poll respondents through repeated measurements, bombarding poll respondents with increasingly polarized and inaccurate information from “news” outlets, etc. By continuing to focus on the least informed cohort of the general electorate, which is becoming increasingly difficult to predict, given the aforementioned systemic problems, it is likely that both digital and nondigital polling methods will both suffer from the same fundamental errors.

I think over time it has become quite evident that what consumers or voters report often drastically differs what they actually did/will do. I therefore believe that the future will not lie in asking people (through whichever method) but in finding out about them. I am convinced that digital technologies are the only efficient way of doing this (living with every person surveyed for a week and observing their behavior is simply way to expensive). I agree that Civis may not have found a great solution yet but I am not sure that going back to basics is the answer given how much evidence we have of people misreporting (intentionally or unintentionally).

What a timely discussion. I agree with Sabine’s point on the attitude-action gap and you (and others) that past voting histories are certainly not the most intellectually sound predictor for future political decisions. That being said, I believe Civis’ issue is an operating model one, not a business model flaw. The company seems to provide an alternative to ‘traditional’ polling methodologies to generate a more complete sense of the American voting public; that is its business model. How it chooses to execute on that (whether past voting histories or employment data) will define the value of its insights. Therefore, I would argue that with the capability to parse and analyze large volumes of ID’ed datapoints can be applied to new and more expansive data sets (consumer purchasing data, entertainment and ticketing, or hospital visits) to provide a much more holistic view of the US voter. Past voting histories may end up being just 1 of 217 predictors that Civis focuses on.

I am a bit more optimistic on firms like Civis because I think they can help solve one of the big problems that polling still has and that is overcoming the unconscious biases of the pollers themselves. Making the process more scientific and objective can help alleviate some of these tensions, but not all of them. Poller bias can really affect as they optimize the polls for variables which they may have determined as important but that may not be as important to the people that they are polling. Building on Alejandro’s point, I think it is also important to realize that there is now no going back to less poll bombardment. The trend going forward will be more poll results every day up to the election day, and these are having very strong effects on voter turnout, where supporters for the losing candidate in the poll may be more inclined to vote versus those that see their candidate winning by a comfortable margin and may feel more inclined to stay home. So far, this effect hasn’t been studied in depth and hasn’t been built into predictive models to forecast the shift from poll-election, which could help bring more accurate results in the future.

Jaime

Do you think that more conversations with the electorate would have predicted the outcome of this past election?

Nate Silver (fivethirtyeight’s Founder and Editor in Chief) was the one who consistently said that Trump had a reasonable chance of winning the election, whereas his competitors were giving Trump single-digit odds. While the polls were off, he uses a probabilistic model for exactly this reason. There were a few things that broke in Trump’s favor on election day, and that proved to be the difference.

The polls ended up being off by 2 percentage points[1], which is within the accepted confidence margins. Even though the outcome surprised a lot of people, this shouldn’t be remembered as a polling error, but rather a reminder that polls are inherently an input to a probabilistic model, not a deterministic one.

Source:

[1] http://fivethirtyeight.com/features/what-a-difference-2-percentage-points-makes/

I disagree with the notion that polling companies are doomed. On Election Day, Nate Silver, the renowned statistician of FiveThirtyEight.com, predicted a 70 percent Hillary victory but Trump won. That’s not a failure of the statistics–there was, afterall, a thirty percent change of a Trump victory and that’s what happened.

I also believe that to a certain extent there will always be holes in the polling data that are difficult to ascertain from the front end. In my opinion, voting history is not always indicative of future voting. The American populace and opinions are a fast moving target, and as we saw this November, there is always room for surprises when it comes to elections. I just don’t see how Civis is doing anything different that will allow it to accurately predict outcomes that go against historical statistical evidence.