Intel Inside or on the Outside? Exploring the impact of artificial intelligence on the supply and design of processor chips

Intel Inside or on the Outside? Exploring the impact of artificial intelligence on the supply and design of processor chips

ARTIFICIAL INTEL-LIGENCE

Digitalization – specifically, the advent of artificial intelligence and the Internet of Things – has led to increased pressure on the computing supply chain. Chipmakers, in particular, have seen growing customer demand for greater computing power and product customization, as microprocessors are increasingly tested by complex algorithms across a widening variety of connected devices.

This presents a challenge to Intel. The company first established dominance in the semiconductors industry by producing Central Processing Units (CPUs) for PCs, and currently has 80% share in the global market [1]. However, OEMs have increasingly shifted attention away from CPUs towards Graphic Processing Units (GPUs), threatening Intel’s stronghold as an OCM in the computer supply chain.

Historically, computing power was determined by the number of CPUs and the cores per processing unit. CPUs tackle calculations in sequential order, with the number of CPUs influencing application performance and database throughput. The rise of AI has generated demand for processors that can perform matrix-based calculations in parallel process, such as GPUs. GPUs are better-suited to performing deep learning techniques such as neural networks and have accelerated AI integration in platforms from autonomous cars to Alexa.

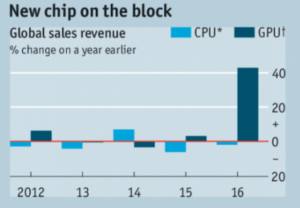

Nvidia is the current leader in GPUs and the category is growing rapidly within the chip market, per the chart below. Nvidia also continues to innovate and improve upon its product offering; its latest GPU units contain 3,584 cores, versus the 28 of Intel’s top-end CPUs [2].

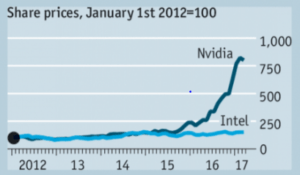

Correspondingly, Nvidia’s share price has risen in recent years, while Intel’s has remained stagnant, as seen below. The company risks falling behind if it does not respond and adapt to the changing demands of its customers in the supply chain.

CHIP ON THE SHOULDER

Intel has made efforts to expand their role in the microprocessor value chain, by acquiring intellectual property and talent (in the short term) and building out infrastructure to sustain product innovation (in the medium term).

Responding to the rise in GPUs, Intel has made strategic additions to its organization to target the graphics market. In November 2017, Intel announced the hire of Raja Koduri, the former graphics head of competitor AMD. Koduri will run the new Core and Visual Computing Group to “design high-end discrete graphics for a broad range of computing segments” [5]. Per Murthy Renduchintala, Intel’s Chief Engineering Officer, these changes are part of Intel’s “plans to expand [their] computing and graphics capabilities” given the acceleration of AI [6].

However, Intel has also made acquisitions to advance their offering in the chip market, hoping to direct the AI supply chain away from GPUs. In December 2016, Intel spent $400 million to buy Nervana Systems and its machine learning assets, including a customized computer chip [7]. Intel lent its production might to Nervana’s proprietary technology and was able to bring an AI-targeted chip to market soon after – in October 2017, Intel CEO Brian Krzanich announced the launch of the Intel Nervana Neural Network Processor (NNP), the first silicon for neural networking processing [8].

Intel is also investing in infrastructure to prepare for further product innovation, as customer needs evolve with the growth of AI. In March 2017, Intel announced the creation of an applied AI research lab, to “build chips that the Googles and Facebooks will want” [9], with “an emphasis on research – three, five, seven years out.” [10]

NETWORK CONNECTION

Intel could consider proactively partnering with its customers to position for trends arising from the adoption of AI and the ‘Industrial Internet’ [11]. As businesses shift focus from products to outcomes (such as providing efficient cognitive computing), ecosystems are expanding and blurring the boundaries between software and hardware [12], presenting opportunities for Intel to collaborate and create AI-specific products with its computing customers.

Google recently developed its own chip, the Tensor Processing Unit (TPU), in response to their own “fast-growing computational demands” [13]. In-house development by OEMs poses a threat to Intel, as their chips may potentially be phased out of the supply chain. However, Intel could mitigate this – and possibly create more value within the computing system – by partnering with their customers. They could combine Google’s vision of AI with Intel’s expertise in chip design and manufacturing to produce customized processors and more. Partnerships would ensure that OEMs receive components tailored to their evolving needs and that Intel remains a relevant and defining player in the computing systems supply chain.

As Intel looks ahead its place in the long term, it is worth asking: what is the future of the computing supply chain? Will there remain delineated OCMs and OEMs, or will there be a reversion to vertical integration? Or, much like the neural networks that yielded such change, will buyers and suppliers be linked through interconnected nodes, with the output of one informing the inputs of others?

(800 words)

Footnotes

[1] Trefis, “What to Make of the Intel-AMD Deal”, Forbes, November 7 2017, https://www.forbes.com/sites/greatspeculations/2017/11/07/what-to-make-of-the-intel-amd-deal/#26f9f7b36f09

[2] Janakiram MSV, “In the Era of Artificial Intelligence, GPUs are the new CPUs”, Forbes, August 7 2017, https://www.forbes.com/sites/janakirammsv/2017/08/07/in-the-era-of-artificial-intelligence-gpus-are-the-new-cpus/2/#6de338484efa

[3] Multiple, “The rise of artificial intelligence is creating new variety in the chip market, and trouble for Intel”, The Economist, February 25 2017, https://www.economist.com/news/business/21717430-success-nvidia-and-its-new-computing-chip-signals-rapid-change-it-architecture

[4] Multiple, “The rise of artificial intelligence is creating new variety in the chip market, and trouble for Intel”, The Economist, February 25 2017, https://www.economist.com/news/business/21717430-success-nvidia-and-its-new-computing-chip-signals-rapid-change-it-architecture

[5] Mark Hachman, “Intel hires Radeon boss Raja Koduri to challenge AMD, Nvidia in high-end discrete graphics”, PC World, November 9 2017, https://www.pcworld.com/article/3236669/components-graphics/intel-hires-radeon-boss-raja-koduri-to-challenge-amd-nvidia-in-high-end-discrete-graphics.html

[6] Mark Hachman, “Intel hires Radeon boss Raja Koduri to challenge AMD, Nvidia in high-end discrete graphics”, PC World, November 9 2017, https://www.pcworld.com/article/3236669/components-graphics/intel-hires-radeon-boss-raja-koduri-to-challenge-amd-nvidia-in-high-end-discrete-graphics.html

[7] Aaron Pressman, “Why Intel Bought Artificial Intelligence Startup Nervana Systems”, Fortune, August 9 2016, http://fortune.com/2016/08/09/intel-machine-learning-nervana/

[8] Intel, “Intel Pioneers New Technologies to Advance Artificial Intelligence”, Intel Newsroom, October 17 2017, [1] https://newsroom.intel.com/editorials/intel-pioneers-new-technologies-advance-artificial-intelligence/

[9] Cade Metz, “The Battle for Top AI talent only gets tougher from here”, Wired, March 23 2017, https://www.wired.com/2017/03/intel-just-jumped-fierce-competition-ai-talent/

[10] Cade Metz, “The Battle for Top AI talent only gets tougher from here”, Wired, March 23 2017, https://www.wired.com/2017/03/intel-just-jumped-fierce-competition-ai-talent/

[11] World Economic Forum, “Industrial Internet of Things: Unleashing the Potential of Connected Products and Services”, 2015, http://reports.weforum.org/industrial-internet-of-things/

[12] World Economic Forum, “Industrial Internet of Things: Unleashing the Potential of Connected Products and Services”, 2015, http://reports.weforum.org/industrial-internet-of-things/

[13] Kez Sato, Cliff Young, David Patterson, “An in-depth look at Google’s first Tensor Processing Unit (TPU)”, Google Cloud Platform, May 12 2017, https://cloud.google.com/blog/big-data/2017/05/an-in-depth-look-at-googles-first-tensor-processing-unit-tpu

Hi chloeho, you made a great analysis of the GPU industry and how the big plays like Intel and Google and competing in this industry. I feel in the future, there will be two major forms, one is the integrated player like Intel (or potentially Google) who can integrate industry both up and down and take a large portion of market share. But there will still be small and smart player in the market, developing one small part of the product, but with exceptional quality and sophistication.

In general, I feel the semi-conductor industry is one of the most innovative and also competitive industry, faster and smaller chips are coming out every single year. All the players are under a lot of pressure to continue innovation. It will be very interesting to see what will happen to this industry in the end.

This is a wonderful piece, Chloe – I feel more informed about a topic that I previously was not aware of. While reading, I was struck by both Intel’s market leadership and their reaction to innovative threats.

The fact that Intel has 80% global market share in any area is impressive, and I believe it speaks to their history of leadership in innovation. 80% was surprising to me because it represents near monopoly, and I would have expected other players to enter the market given the demand for CPUs and the ability of technology companies to reverse engineer. This leads me to believe that patents on intellectual property play a major role in this industry, which might be a barrier to Intel entering the GPU market. For this reason, I think that acquiring smaller competitors for their intellectual property is an effective supply chain solution.

Despite the tactical nature of Intel’s supply chain solutions thus far, you stated that they are “hoping to direct the AI supply chain away from GPU’s,” I wonder if they are doing enough to anticipate future digitization. This concerns me because it sounds like Intel continues to excel at incremental innovation, but I wonder if they are investing enough resources in more transformational innovation. Surely there is a balance between R&D spend and future market prominence, but I think Intel would be remiss to ignore nascent forces in the market. Hopefully, they continue to innovate and leverage their scale to deliver disruptive digitization technologies in the future.

Hey Chloe, awesome topic and write-up! I’d add just a couple thoughts.

The first regards the limits that traditional processors are currently running up against size-wise. Transistors are quickly approaching the point at which quantum mechanics won’t allow them to function properly anymore, which could lead Moore’s Law to breakdown (https://www.technologyreview.com/s/601441/moores-law-is-dead-now-what/). This could very well force engineers to think up new solutions in order to keep chip companies like Intel and AMD competitive.

It also remains to be seen whether radical new technologies, like neuromorphic chips, will gain a foothold. Prototypes like IBM’s TrueNorth chip attempt to more accurately mimic the circuitry of the human brain, and thus use far less energy than traditional processors (http://science.sciencemag.org/content/345/6197/668). Could they be the future? Only time will tell, but I’d argue that Intel should keep an eye out.