Facebook’s Negotiating Chatbots – Deal or No Deal?

With machine learning, Facebook has built robots that can negotiate and even lie; what are the business and societal implications for Facebook and its users?

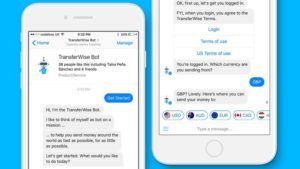

Chatbots, developed to simulate human conversations through natural language processing and machine learning, have recently exploded onto the tech scene, with Facebook at the forefront of innovation in AI and commercial opportunity. For businesses, chatbots that simulate human conversations offer a seamless way to reduce human operational costs and improve customer experience (e.g. Sales, Marketing, Customer Support). For example, through the platform chatfuel.com, one is able to set up a simplified ticket reselling bot on Messenger for HBS students in under an hour. For Facebook, innovating on chatbots provides enormous commercial potential and opportunity to gather extensive data on their expansive user and customer base. [1] In the past 2 years, Facebook has enabled companies to integrate chatbots on their Messenger platform, acquired natural language processing startup Ozlo, and released ParlAI, a framework that combines different approaches to machine dialogue and natural language data sets to allow developers to build more naturalistic and seamless chatbots. [2] Facebook now boasts over 100,000 bots on Messenger, which allow users to do anything from transferring money to ordering pizza. [3]

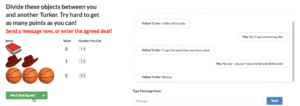

In the long-term, Facebook CEO Mark Zuckerberg characterized his goals as allowing users to “message a business just the way you would message a friend.” [4] This suggests that Facebook wants to master true language and conversational development in the field of AI, a challenge that has largely alluded even the most advanced companies and academics in the field. [5] Facebook’s Artificial Intelligence Research group (FAIR) has developed many notable and controversial advancements in the field of chatbots and how they interact with users. [6] One recent breakthrough by this team is the ability for chatbots to negotiate. This is particularly challenging because it requires chatbots to both interpret conversations, infer the motives of the opposing party, and produce sentences that maximize the chatbot’s end goals for the negotiation. In a paper released with the Georgia Institute of Technology, Facebook details that they trained bots, or “dialog agents,” through a supervised learning method with an extensive dataset of negotiation dialogues between real people. [7] These data sets were implemented as end-to-end neural networks that enabled agents to imitate and anticipate human actions, and then use reinforcement learning to reward models that had achieved a favorable outcome. The agent also was simultaneously trained to imitate and emulate human-language patterns, which was tested through online conversations with humans, most of whom did not realize they were talking to an agent.

The end result was agents who could engage in start-to-finish negotiations, were able to bargain on multi-issue negotiations, and anticipate dialog rollouts to simulate more favorable future conversations. The agents also naturally developed the ability to practice deceit by feigning initial interest on less valued items in order to create the pretense of “compromise” in later concessions. [8] This naturally raised some eyebrows and garnered press around whether Facebook had gone too far in their advancements of AI, and the implications of robots that can now lie to win negotiations against humans.

For Facebook, the question now is how they choose to further develop and apply machine learning and language processing in the field of AI and chatbots. In the short-term, Facebook should test these dialog agents in a few discrete business applications, like partnering with companies to see how dialog agents can scale and drive efficiencies in the sales process, and close deals that previously required a human touch. Sales functions are often incredibly costly resources, and highly variable in terms of quality due to previously “human” traits of negotiation, ability to converse, and innate trust. Introducing chatbots can help lead to more efficient sales processes (e.g. targeted messaging through Facebook data), speed up the sales cycle (e.g. no wait times to get ahold of a representative), and eliminate the fallible elements of human behavior that impact sales (e.g. biases, emotions, etc.). In the long term, Facebook needs to grapple with the potential ethical and social implications that comes with the advent of robots that can lie and negotiate. Alongside other companies in their field, they should define and codify some of the boundaries driving innovation in the field of AI, particularly as the lines between interacting with a human or a robot blur. Facebook should reconsider their open-source platform strategy, and establish guardrails to prevent chatbots from perpetuating bias, influencing socio-political movements, or masquerading as humans and deceiving users. As natural language processing and neural networks continue to advance the capability of robots, the question remains on where the trade-offs lie between the gains in efficiency and communication, versus the potential dangers to society as robots adopt the more sinister aspects of human behavior.

(Word Count: 769)

- Harry McCracken, “The Great AI War of 2018,” Fast Company, November, 2017, [http://web.a.ebscohost.com.prd2.ezproxy-prod.hbs.edu/ehost/pdfviewer/pdfviewer?vid=1&sid=e17f61a2-ffcd-4422-a5d4-e589f1ff5e77%40sessionmgr4009], accessed November 2018.

- Will Knight, “Facebook Wants to Merge AI Systems for a Smarter Chatbot,” MIT Technology Review, May 15, 2017, [https://www.technologyreview.com/s/607854/facebook-wants-to-merge-ai-systems-for-a-smarter-chatbot], accessed November 2018.

- Libby Plummer, “The best bots you can used on Facebook Messenger,” Wired UK, Jun 13 2017, [https://www.wired.co.uk/article/chatbot-list-2017], accessed November 2018.

- Alex Heath, “Facebook’s grand vision for how we’ll use apps in the future is all about Messenger,” Business Insider, Apr 12 2016, [https://www.businessinsider.com/mark-zuckerberg-unveils-messenger-bots-2016-4], accessed November 2018.

- Will Knight, “AI’s Language Problem,” MIT Technology Review, Aug 19 2016, [https://www.technologyreview.com/s/602094/ais-language-problem/], accessed November 2018.

- Liat Clark, “Facebook teaches bots how to negotiate. They learn to lie instead,” Wired UK, Jun 15 2017, [https://www.wired.co.uk/article/facebook-teaches-bots-how-to-negotiate-and-lie], accessed November 2018.

- Mike Lewis, Denis Yarats, Yann N. Dauphin, Devi Parikh and Dhruv Batra, “Deal or No Deal? End-to-End Learning for Negotiation Dialogues,” Facebook AI Research and Georgia Institute of Technology, Jun 16 2017, https://s3.amazonaws.com/end-to-end-negotiator/end-to-end-negotiator.pdf, accessed November 2018.

- “Deal or no deal? Training AI bots to negotiate,” code.fb.com, Jun 14, 2017, [https://code.fb.com/ml-applications/deal-or-no-deal-training-ai-bots-to-negotiate], accessed November 2018.

Great piece with a very real concern around the advancements of chatbots. As you say, it is going to be a very tough tradeoff for businesses investing in chatbots in the future. On one hand, you want your chatbot to be as effective in communication as possible given that customers actually prefer the efficiency and time savings that come along with a chatbot. However, if the chatbot makes mistakes and/or deceives a customer as you mention, your company will quickly drive away business and become untrusted. Therefore, you have a tough decision to make between training the chatbot to the point where it has too much power, but where you don’t have to worry as much about simple mistakes with customers, and not training the chatbot as thoroughly and accepting some level of mistake. In the end, companies will simply need to impose more guardrails as the technology improves.

This was a very insightful article outlining the potential benefits and concerns for chatbots as Facebook makes tremendous progress to use chatbots to make some of their processes much more efficient. You bring up a big tension point with the social and ethical implications that arise when chatbots are able to mimic real human behavior. Do you think people react differently when they know they are talking to chatbots vs. a real human in a customer service setting, for example? If so, do you think it is unethical for Facebook to disguise their chatbots and present them as real human customer support?

Another interesting point that came to mind was if Facebook continues with the open source strategy, people might be able to use chatbots on the customer end to negotiate against other chatbots. I think it would be interesting to see the equilibrium when two chatbots negotiate against each other.

Well-written and thought provoking piece! I too share your concerns about the dangers that exist with this particular use case. From the perspective of businesses that might adopt these robots, I would question whether the lack of control over their behaviours is truly worth offsetting the efficiency that they arguably help achieve. I also question how much the human element can and should be removed as an additional system of control should things go out of hand. From a Facebook perspective, the company is also opening itself up to further scrutiny around its lack of control around its products being used to further nefarious activity.