Dr. Roboto Will See You Now – Improving Radiology Diagnostics

To what extent can StatDx's machine learning tool help improve radiologists' efficiency and accuracy?

Over the last fifteen years, as diagnostic imaging has become more accurate and as new methods of imaging have been created, the volume of imaging has increased dramatically. For example, a typically CT exam in 1999 would have yielded 82 images while today that same exam would yield 679. As a consequence, American radiologists have had to dramatically increase their workload. Today, American radiologists are charged with examining an image every 3-4 seconds over the course of an 8 hour work day[1].This high volume of analysis and required speed puts stress on the potential accuracy of diagnoses. 75% of radiology malpractice claims are due to misdiagnosis [2]. Enter StatDx.

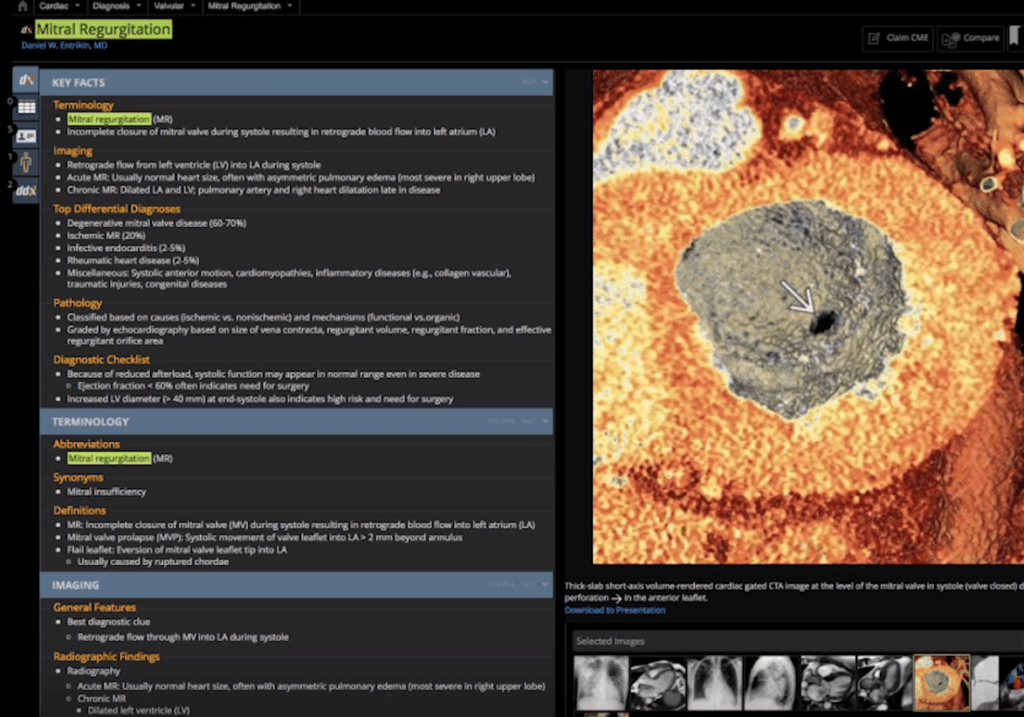

StatDx operates as a clinical decision support tool, built by radiologists for other radiologists. It has the largest database of radiology cases (over 20,000) and expert-written diagnoses to help radiologists increase their speed and accuracy[3]. When viewing an image, radiologists can log onto StatDx and test a potential diagnosis. StatDx will share that diagnosis as well as any other differential diagnoses, ordered by frequency. Each differential diagnosis is supplemented by symptoms, case studies, and typical images, so that the radiologist can compare their image against the standard before making a final diagnosis.

StatDx’s user interface has a combination of key facts, differential diagnoses and images

StatDx’s user interface has a combination of key facts, differential diagnoses and images

StatDx’s initial content library was developed by radiologists at the top of their chosen field. Since initial development, more data points and patient cases have been added to further refine the list of potential diagnoses. StatDx’s current model is able to improve radiologist diagnostic error by 19% overall and 37% in difficult imaging cases[4]. It has achieved almost 100% penetration of the radiology resident market in the US and is considered the go-to imaging diagnostic tool[3].

To date, machine learning has only helped inform the differential diagnosis component of the tool, through ongoing refinement of the list of potential diagnoses. However, there is vast potential for improvement here through the integration of further clinical data, such as information from the patient’s electronic health record (EHR)[5]. Today, radiologists must compare information from the EHR against information provided in StatDx, rather than have the tool match it for them.

In the short term, StatDx does not have the capabilities to integrate easily with the EHRs. The implications of this are two-fold: radiologists do not have the ability to easily include information from StatDx into their clinical reports for a patient, and, clinical data is not used by the program to improve or narrow the differential diagnosis list [6].

Looking Forward

StatDx recently announced a partnership with M*Modal, a clinical documentation tool[7]. M*Modal will provide advanced speech recognition and workflow management to allow the radiologists to seamlessly transcribe information from StatDx into their clinical notes on their EHRs. It remains to be seen whether this will result in a two-way flow of information in which the clinical data will be combined with the imaging to improve the accuracy of StatDx’s tool.

Over the medium term, StatDx should prioritize the inclusion of clinical data in its decision-making software. There are challenges inherent in pulling this information together, as each case already in the data set would need to be updated to include more data fields. It may be that those patient cases do not have all of the clinical data readily available and, as such, the data set would remain incomplete and challenging to accurately navigate. One potential solution for these challenges is to partner with a number of large academic medical centers and their EHR vendors to build out the data repository necessary for this additional functionality.

There is further potential to expand the clinical decision support to suggest the best path for follow up with each type of diagnosis. In this expansion, the combination of clinical data and imaging diagnostics would help information the best treatment path thereby potentially eliminating unnecessary procedures and additional imaging.

Finally, StatDx might consider expansion into other specialties where imaging and data are abundant (e.g., oncology, pathology). Much like with IBM Watson, there is some concern over the scalability of this product into other specialties. The design of StatDx has been highly targeted to the radiology community and workflow. Adjustments would need to be made to include the appropriate (and different) data fields as well as match the workflow needs of an oncologist or pathologist.

For Thought

Given StatDx’s market penetration and its accuracy impacts to date, how much runway does it really have for growth? Looking more broadly, is there potential for truly scalable machine learning decision support tools in healthcare? What are its limitations?

(798 words)

References

[1] McDonald, R., Schwartz, K., Eckel, L., Diehn, F., Hunt, C., Bartholmai, B., Erickson, B. and Kallmes, D. (2015). The Effects of Changes in Utilization and Technological Advancements of Cross-Sectional Imaging on Radiologist Workload. Academic Radiology, 22(9), pp.1191-1198.

[2] Appliedradiology.com. (2018). The radiologist’s gerbil wheel: interpreting images every 3-4 seconds eight hours a day at Mayo Clinic. [online] Available at: https://appliedradiology.com/articles/the-radiologist-s-gerbil-wheel-interpreting-images-every-3-4-seconds-eight-hours-a-day-at-mayo-clinic [Accessed 11 Nov. 2018].

[3] Statdx.com. (2018). Features | STATdx. [online] Available at: http://www.statdx.com/features/ [Accessed 11 Nov. 2018].

[4] Radiology Business. (2018). Elsevier’s decision support tool helps radiologists cut down on diagnostic errors. [online] Available at: https://www.radiologybusiness.com/topics/imaging-informatics/elsevier-decision-support-tool-radiologists-diagnostic-errors [Accessed 11 Nov. 2018].

[5] Diagnosticsimaging.com. (2018). Tools to Aid Radiology Workflow. [online] Available at: http://www.diagnosticimaging.com/practice-management/tools-aid-radiology-workflow [Accessed 11 Nov. 2018].

[6] Radiologytoday.net. (2018). Decision Support. [online] Available at: https://www.radiologytoday.net/archive/rt010p16.shtml [Accessed 11 Nov. 2018].

[7] Elsevier.com. (2018). Elsevier collaborates with M*Modal to launch next-generation diagnostic decision support workflow. [online] Available at: https://www.elsevier.com/about/press-releases/clinical-solutions/elsevier-collaborates-with-m-modal-to-launch-next-generation-diagnostic-decision-support-workflow [Accessed 11 Nov. 2018].

Fascinating topic and very well-written essay.

It is highly interesting that 2 of the most debated features of ML are absent from, or have low impact in, this healthcare application.

First, the data used to train the algorithm does not seem to include significant biases or errors that could negatively impact the algorithm’s accuracy and reliability. This is done by selecting only the most respected doctors. Second, it is used as a tool and not as a final answer to the questions at hand, which seems to be essential for its long-term sustainability, particularly considering that human lives are involved here.

As to whether it is scalable, my perception is that the current 100% penetration is not impediment for growth. On the contrary, it is a selling point that could be used to expand geographically (e.g internationally) and to other verticals, as cross-selling would be relatively straightforward.

My key concern is indeed the data integration issue. As more features are added to an algorithm, not only some data sets might become unusable due to incompleteness (as you pointed out), but also the retrained algorithm may change its prediction patterns. The risk is that a doctor could use the improved version on an old case and find out that he potentially committed both type I and type II errors (false positives and false negatives), lowering their confidence on the tool.

Great article. I think that image analysis is one of the biggest areas for growth in machine learning and had no idea that radiologists were faced with sifting through such massive quantities of imagery. This seems like the perfect use case for an AI first MD second approach towards detection and diagnosis.

I wonder whether in the future imaging studies could be performed of populations over time and AI used to find new ways to detect conditions at an earlier stage than now observable by doctors using image analysis. If imaging could be conducted on a population set during their course of their annual check ups over a long period of time their could be rich data set of images that could then be analyzed by AI after those patients developed conditions down the line to see if earlier methods of detection are feasible.

Great topic! Analysis of imagery is growing in each field. It seems like StatDx is a learning tool and there is a lot of room for growth. Potential to scale into any aspect of healthcare where there are symptoms, clinical pictures (not just radiology), and other forms of health care data. Dentistry is one of the examples.