Black Box Personalization and Fragmentation of the Facebook News Supply

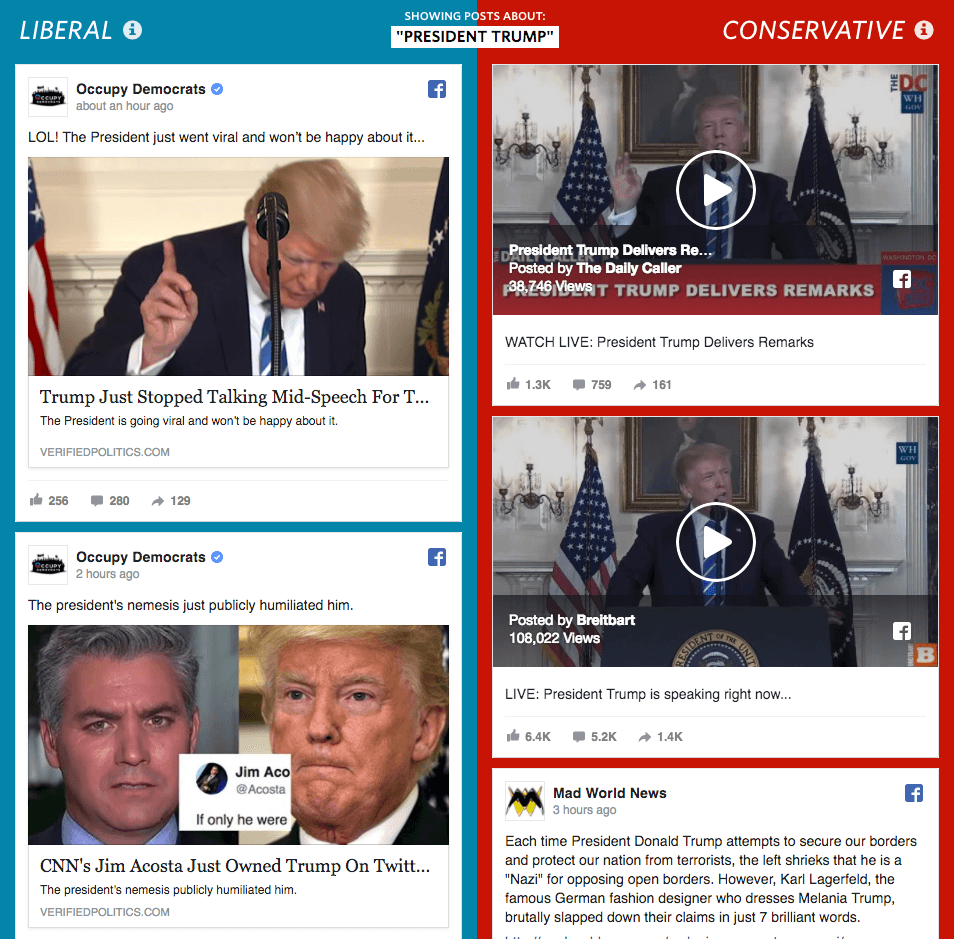

As Facebook has improved their news content supply chain via sophisticated machine learning recommendation algorithms, they have enabled an internet where we are rarely subjected to contradictory viewpoints.

As digitalization of news has become more pervasive across society, Facebook has become the de facto public forum for sharing of media content. As of 2017, 67% of adults get their news from social media[1]. From a supply chain lens, one could consider media content Facebook’s “customer offering”, and their recommendation algorithms to be the supply chain. Rapid advances in machine learning have made this supply chain more efficient via complex algorithms based on massive data sets, improving Facebook’s ability to connect users with media they choose to engage with. If user engagement is the desired heuristic, these algorithms have unquestionably improved the Facebook user experience. However, there are two negatives to this approach: the unpredictability that comes from “Black Box” systems, and the creation of “Echo Chambers” as users’ feedback loops silo them into, “Spheres of information where we’re rarely subjected to views that differ from our own.”[2]

Black Box models are those where only the outputs are visible. Facebook’s proprietary news recommendation algorithm is an example. These models trade high performance for a complete lack of explanatory power and oversight: “Users have no control over what algorithms decide to show us, and little understanding of why they may learn to show you one piece of content over another.”[3] In fact, even Facebook employees can’t definitively say what factors led to news being displayed a certain way for a certain person, or why tweaking inputs slightly will change result sets massively. The combination of black box algorithms with the general user’s “Human tendency to select information that confirms their existing beliefs”[4] has a scary result. In addition, research has shown that Facebook exacerbates this problem by restricting “cross-cutting” content, or content from outside one’s political sphere. “After ranking [news content], there is on average slightly less crosscutting content”[5]. Facebook is enabling formation of these echo chambers of like-minded users, without knowledge of how these echo chambers will affect the future profitability of Facebook or human society as a whole.

There are three actors in this equation: content producers, Facebook, and content consumers. Facebook must address this concern by answering these two questions. How does Facebook interact with the content producers to select which content to show, broadly? How do users interact with Facebook to consume content, specifically? Currently, there is a lack of clarity about Facebook’s role, intentions, and algorithms. To solve this problem going forward, Facebook must provide transparency in all duties they play.

As “Fake News” has entered the collective vocabulary, Facebook’s role in filtering content has been called into question: are they an impartial aggregator that is truly indifferent to content, or an editor, emphasizing “Real News” from trustworthy sources? In the short term, Facebook can build a system to flag and filter fake news articles written by trolls, or any other spam-esque content. In both the short and long, Facebook must define its position to maintain the trust of content generators and consumers.

The second question Facebook must answer is about its intentions and objectives. As Hosangar wrote, “Why should Facebook be obligated to show content with which we don’t engage?”[6]. Is the echo chamber actually even Facebook’s responsibility to fix? If Facebook does come to the conclusion that this echo chamber is something worth fixing, they can tweak their content delivery system to deliver media content popular in other circles, perhaps communities that have contradictory views.

The last question Facebook must face is how transparent they should be with the nuts and bolts of their actual recommendation algorithms. To this point, the details of the algorithm have been kept under wraps: “It’s the company’s unique intellectual property, a competitive advantage it can’t expose”[7]. However, this is not the only solution. Facebook could use an open-source recommendation algorithm to allow others to peek into the logic behind what they’re seeing. They could give the user more agency by building tools to allow more cross-cutting content to be displayed; potentially only a few users would opt into a more diversified Newsfeed, but it would at least force the user to make a conscious decision. Finally, Facebook could build and share reporting on what people consume, both at the individual (anonymized) and collective level. By taking these steps to ensure transparency in all steps of the content recommendation process, Facebook can ensure all parties have the trust and resources to consume news as they intend, while facilitating the conversation about the place of echo chambers in society.

(758 Words)

[1] Elisa Sheahrer and Jeffery Gottfried, “News Use Across Social Media Platforms 2017”, Pew Research Center, September 7, 2017, http://www.journalism.org/2017/09/07/news-use-across-social-media-platforms-2017/, accessed November 2017

[2] Patrick Kulp, “Escape the echo chamber: How to fix your Facebook News Feed” Mashable, November 18, 2017, http://mashable.com/2016/11/18/facebook-hacking-newsfeed-well-rounded/#fex3kZADGqq2, accessed November 2017

[3] Christina Bonnington, “It’s Time for More Transparency in A.I.” Slate, October 24, 2017, http://www.slate.com/articles/technology/technology/2017/10/silicon_valley_needs_to_start_embracing_transparency_in_artificial_intelligence.html, accessed November 2017

[4] Alessandro Bessi, “Personality Traits and Echo Chambers on Facebook”, June 16, 2016, IUSS Institute for Advanced Study, https://arxiv.org/pdf/1606.04721.pdf, Accessed November 2017

[5] Eytan Bakshy, Solomon Messing, Lata Adamic, “Exposure to ideologically diverse news and opinion on Facebook”, Science Magazine, Vol 348 Issue 6239 p. 1130, June 5, 2015

[6] Kartik Hosangar, “Blame the Echo Chamber on Facebook. But Blame Yourself, Too” Wired, November 11, 2016, https://www.wired.com/2016/11/facebook-echo-chamber/ accessed November 2017

[7] Hosangar

I have a friend who is especially passionate about certain topics. My favorite question to him is “can you articulate the other side’s point of view?” He frequently cannot. It reminds me of a famous Charlie Munger concept: in order to have an opinion you need to be able to articulate both sides of an argument.

Facebook feeds my friend’s biases. It feeds my biases. Facebook is in the cross hairs, but, as you know, the issue is even more widespread. Google was under fire early this year as their search autocomplete showed “are Jews evil” after a user typed “are Jews.”

Its amazing how big a problem this is. My opinion is Facebook should take a more active role in helping users mitigate their biases. Facebook’s mission statement is to bring the world closer together. The state of their algorithm today does just the opposite.

Hi, Mark!

Good post. Your two recommendations for Facebook are to: a) open source their recommendation algorithm, and b) for Facebook to provide reporting into what content people actually consume.

While these are creative suggestions, it seems unlikely that either of these approaches will be aligned with Facebook’s business model. Without API access, Facebook doesn’t allow any third party apps to be integrated with their backend, so tweaking their algorithm in an open-source way won’t be possible.

Facebook currently does allow users to understand how they’re classified for ad and news targeting here. Check out a great Nytimes writeup on this here:

https://www.nytimes.com/2016/08/24/us/politics/facebook-ads-politics.html?_r=0

Unfortunately, the arms race of ad targeting and sales seems likely for users to receive content that they won’t engage with. If Facebook sees itself as having an obligation to reduce political polarization, it’ll need to make ad product targeting decisions that don’t align with its bottom line–a commitment to display content that likely won’t have high engagement.

The million dollar question: does Facebook have this obligation? Is this a role for the FCC to regulate into existence, a la its broadcast rules from the 1950s?