VMWare: Is there a Future in the Datacenter?

VMware has made a business of building solutions for on-premises datacenters. Can they continue their dominance in the era of the public cloud?

Since it’s founding, VMware has provided the “glue” that’s held the modern data center together, enabling system administrators to separate applications from physical hardware. Instead of needing to dedicate specific resources to an application, a system administrator can simply partition a “virtual machine” that can be moved, installed, deleted, or expanded on any resource to which the administrator has access. With this flexibility came a massive increase in resource utilization efficiency, and decreased costs of maintaining complex IT infrastructure.

This flexibility ultimately led to the cloud infrastructure that powers technology today. When it no longer mattered where an application physically ran, or on what hardware, it was possible to run that application anywhere. Initially, this shift to “the cloud” was a boon for VMware. With more machines being added to each company’s “private cloud” there were more opportunities to sell software to manage every layer of the technology stack. What VMware initially did for servers, they expanded into networking equipment and storage. Ironically, this shift to cloud infrastructure that the wave of virtualization enabled, ultimately could become the undoing of the company that brought it to popularity.

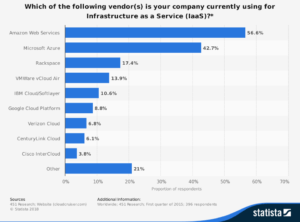

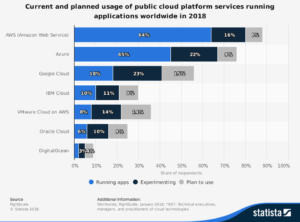

VMware’s crisis stems from the shift from privately owned cloud (e.g. traditional datacenters) to publicly owned cloud (e.g. Amazon Web Services). As more workloads shift to public clouds, there is less of a need for the software that powers privately owned datacenters. The company is currently at an inflection point, how will VMware stay relevant when its customers no longer own the infrastructure that it enables them to administer?

VMware’s first response when it realized that its customers were looking to the public cloud in was to put its head into the sand and argue that the public cloud was irrelevant to the types of applications that its customers wanted to run[1]. Is argument being that companies would not want to move applications to the public cloud because if they did, it would be impossible to move them back. In other words, moving to the public cloud decreased flexibility that customers initially turned to virtualization to enable. Unfortunately for the company, its customers didn’t agree. Workloads began to migrate to public cloud and the threat to VMware became very apparent.

Its second reaction was to attempt to build its own cloud infrastructure and enable its customers to purchase computing resources directly from VMware. In 2008, VMware announced its vCloud initiative at its conference in Las Vegas which would enable its customers to migrate workloads to a public cloud and then back again effectively extending a private cloud by leveraging public resources. Effectively, this worked into VMware’s strategy of enabling flexibility for its customers and allowing the best of both worlds.

Unfortunately, this was seen as a sort of half measure by its customers. While it was able to generate significant market share, it was never able to turn vCloud into a successful business, ultimately selling the division in 2017 to OVH, a French cloud computing company[2].

The most recent iteration of VMware’s cloud strategy has come in the form of a partnership with the industry giant Amazon Web Services[3] announced in 2017, a relationship that appears to be going very well.

This appears to be the best of both worlds for both VMware and for its customers. They get the flexibility to leverage the same virtualization software they’re using in their datacenters while gaining access to the immense power of the public cloud from Amazon Web Services. Initially, this appears to be a win-win for both VMware and for AWS. The flexibility to migrate workloads between public and private clouds should be a draw for additional customers from other cloud providers as well as enabling broader adoption of both public and private cloud solutions.

Going forward, VMware faces multiple challenges including continued erosion of the private cloud business, commoditization of its software offerings by cheaper or open source vendors, as well as a shift to workloads that its current solutions cannot handle such as heavy graphics card usage or proprietary chip designs (e.g. Tensor processing units or FPGAs).

In order to remain relevant in its core business of enabling virtualization VMware needs to continue to seek partnerships with more cloud vendors. Enabling Amazon Web Services to have a monopoly on a solution for a hybrid private/public cloud could be a strategic misstep. By standardizing on one vendor, VMware is effectively seceding the control of all IT infrastructure to a single vendor, and removing itself from the equation. Ideally, VMware could enable its customers to actually move their virtual machines across different cloud providers as well as between public and private clouds. This would enable customers to choose the vendor which can provide the best price/performance mix for each of their applications and be able to easy move those applications as the price/performance equation shifts.

This shift to enabling a customer to shift its virtual machines across different cloud providers also would slow the progression of VMware’s competitors, be they for profit or open source. VMware could become the standard through strategic partnerships similar to the one they have recently forged with AWS.

Lastly, VMware needs to begin to develop functionality to virtualize the infrastructure that enables large advancements in machine learning and artificial intelligence. It currently does not have an offering that would enable a business to virtualize computation resources like graphics cards, Tensor Processing Units, FPGAs or ASICs that enable efficient execution and training of artificial intelligence and machine learning models. As more workloads shift to this type of computation, in order to stay relevant, VMware must adapt their core offering to enable the flexibility of partitioning these types of resources similarly to how they do so with servers, networking, and storage.

Unfortunately, this last consideration could be extremely difficult. The virtualization that VMware has developed thus far relies heavily on standards such as the x86 standard processor instruction set or the SCSI and SATA hard drive interfaces. There are no standards across various vendors to implement a virtualized instance leveraging these tools. For example, Nvidia, Google, and Intel all utilize different instruction sets for their machine learning optimized processors. In order to have a scalable solution, VMware needs to learn how to leverage whatever type of compute resource a customer would like to utilize.

[1] https://www.telegraph.co.uk/finance/newsbysector/mediatechnologyandtelecoms/digital-media/10039968/Pat-Gelsinger-Cloud-computing-is-the-fifth-wave-firms-must-ride-it-or-be-swept-away.html

[2] https://www.theregister.co.uk/2017/04/04/vmware_sells_vcloud_air_core/

[3] http://ir.vmware.com/overview/press-releases/press-release-details/2017/VMware-and-AWS-Announce-Initial-Availability-of-VMware-Cloud-on-AWS/default.aspx