NVIDIA: How to win the “GPU wars”

This blog attempts to explain the drivers behind NVIDIA's growth story.

If you ever wondered how a not-so-stellar start up can beat large incumbents like Intel, NVIDIA is the perfect case for you. Founded in 1993, NVIDIA started with humble beginnings but today it is the leading designer of graphics processors commanding more than 70% of the GPU market.

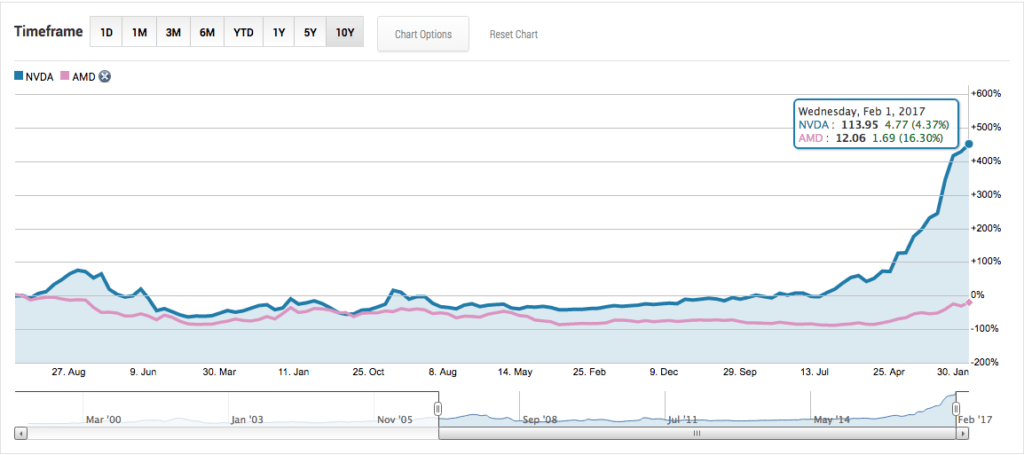

In mid 1990s NVIDIA’s products were a failure. Fast forward to today, NVIDIA is one of the most impressive tech companies. In 2016 the company stock price skyrocketed by 229% driven by its continuous technology investments in graphics processing units (GPUs) and targeting ‘hottest’ application areas like deep learning. While the competition in GPU market has a long history which started with the dominance of Intel and AMD, NVIDIA has grabbed significant market share from its key competitors thanks to its strategic investments.

NVIDIA 10-year stock price evolution in comparison to its long-term rival, AMD [2]

Trends in Technology

GPU-accelerated computing has been on the rise in the past years due to its massive speed advantage over CPU computing. GPU-accelerated computing uses GPU along with a CPU to accelerate analytics applications. The difference in processing speed is driven by the parallel structure employed by GPUs. As opposed to the sequential computing logic of CPUs, GPUs have parallel architecture with thousands of smaller cores designed for running tasks simultaneously [1].

While initially GPUs were mostly used for generating high quality images in video games, NVIDIA realized that the key value creation opportunity in GPU market was the processing speed. By attacking emerging technologies early on, NVIDIA started making significant investments in R&D. NVIDIA’s competitive advantage was its rapid advancements in graphics technologies, releasing a new chip every 6 months, as opposed to the industry average of 18 months. In order to achieve such fast turnaround, the company invested heavily in forming multiple development teams and new facilities that would reduce the failure rate in chips manufacturing [3]. Unlike its closest competitor AMD, NVIDIA’s followed a holistic approach to its investments. While AMD has introduced incremental technological improvements, NVIDIA was able to generate across the board advancements. Moreover, the company created an employee-friendly environment with flexible working hours and generous compensation packages. By 2000, NVIDIA was able to beat its deep-pocketed competitors like Intel.

The important value capture lever was the higher prices NVIDIA was able to enjoy thanks to increasing consumer demand for GPUs. Given the vast performance difference of GPU powered computing, there has been increasing demand in the market and willingness to pay premium price for performance. The company was therefore able to subsidize its investment costs through higher margins on its products.

Big Bets on Deep Learning

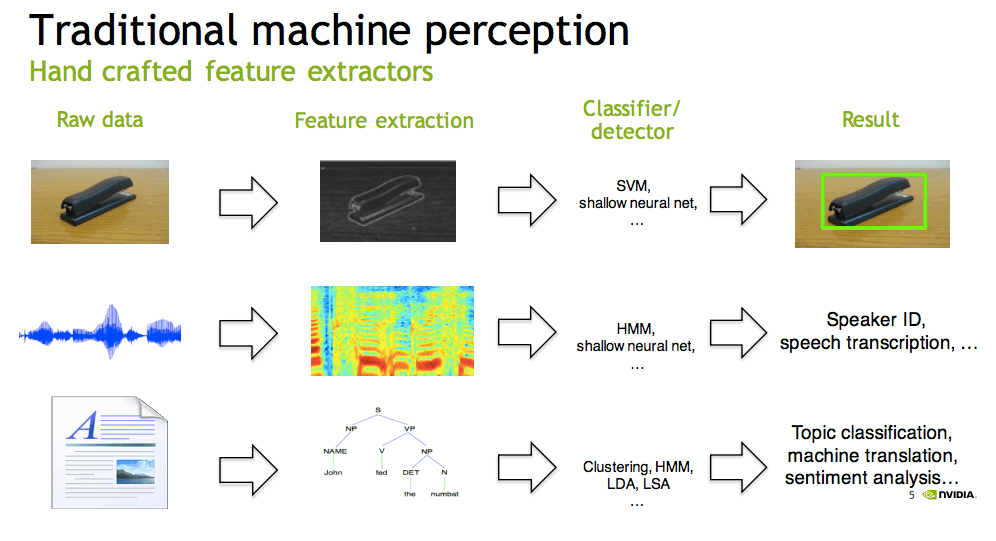

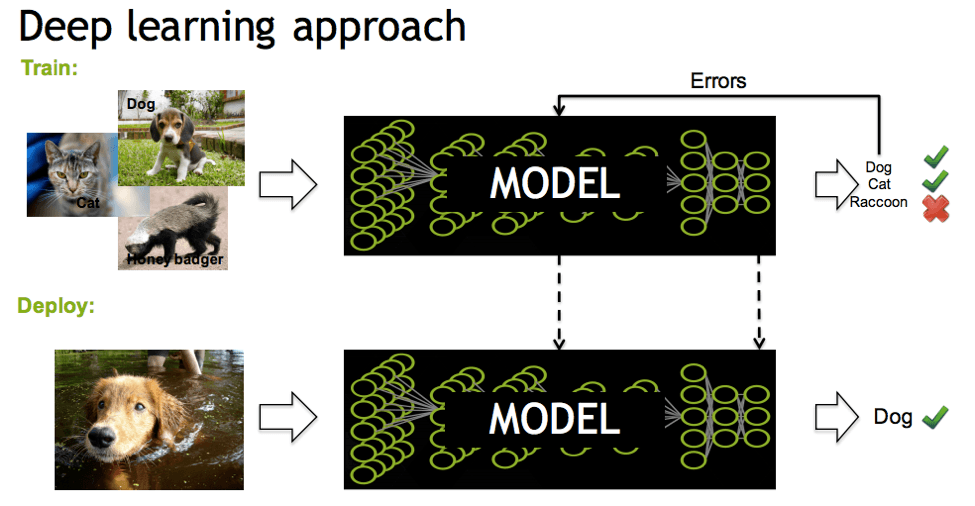

More recently, the company is continuing to make big bets in technology by investing heavily on deep learning. Deep learning combines machine learning with the application of neural networks. [4] In the industry deep learning has immense application potential ranging from speech recognition and translation to image recognition. While in traditional models raw data is fed into algorithms for feature extraction of images or texts; in deep learning, large amounts of training data is fed into the model and the model is trained through rapid feedback iterations [6].

The problem with traditional model is that it fails in unexpected variations ending up with brittle performances that make them unfeasible in sensitive use cases such as driverless cars. However in deep learning, the model’s performance is not dependent on the robustness of the algorithm, but rather on the extensiveness of its training. Therefore, the key driver of success in improving the performance of these neural networks is the processing power of the servers on which they are run, traditionally, CPU servers.

According to industry estimates, deep learning market is expected to generate more than $100 billion revenue by 2024 [5]. NVIDIA was able to become part of this emerging market by its early investments in utilizing GPUs for deep learning applications. The company built programming tool kits (e.g. CUDA) that allowed researchers to easily develop their deep learning models. The company cleverly identified key value creation levers for its customers. A crucial advantage of using GPUs over CPUs for processing of neural networks is the cost advantage. According a Wired magazine article, Google built a ‘computerized brain’ with 1,000 CPU servers costing $1 million, while Stanford AI Lab has used 3 GPU-accelerated servers with similar performance results for only $33,000! Moreover, training times of networks have been demonstrated to be about 9 times faster when trained with GPUs. Grasping the cost and speed advantages of GPUs early on, NVIDIA made significant investments in this area. Predictably, today many tech companies, including Facebook are using NVIDIA’s GPUs to build their machine-learning computers.

While NVIDIA’s success in this market is attracting competitive responses, NVIDIA has a clear advantage in seizing the growth trend in graphics processing for its big head start. However, in order to sustain its winning story, NVIDIA needs to keep investing in its technologies and grab the high-end market with high margins.

[1] NVIDIA: http://www.nvidia.com/object/what-is-gpu-computing.html

[2] The Motley Fool: http://www.fool.com/quote/nasdaq/nvidia/nvda/interactive-chart

[3] McKinsey: http://www.mckinsey.com/business-functions/strategy-and-corporate-finance/our-insights/the-perils-of-bad-strategy

[4] http://www.moorinsightsstrategy.com/nvidia-gtc-nvidia-bets-big-on-deep-learning/

[5] The Motley Fool: https://www.fool.com/investing/2016/08/18/nvidia-corrects-intels-mistakes-in-deep-learning.aspx

[6] NVIDIA Deep Learning Course: http://on-demand.gputechconf.com/gtc/2015/webinar/deep-learning-course/intro-to-deep-learning.pdf

Great post, thank you for sharing it. NVIDIA’s growth is a remarkable story. I’m curious how sustainable or defensible their competitive edge is… When a new technology breakthrough inevitably finds itself further down the Moore’s Law cost curve, what will happen to NVIDIA if that breakthrough happens in another company?

Great post – very interesting to see NVIDIA thriving thanks to the adoption of GPUs as the hardware of choice for deep learning. With deep learning being increasingly used by businesses to generate insights into their data, I would worry about GPU’s becoming commoditized as more entrants become enticed by the high margins and increasing volumes on offer. This would drive competition up and force prices (and margins) down. Other than its first mover advantage, does NVIDIA have any other protection against this seemingly inevitable outcome?