Beware of Ninja Turtles on 88th & 2nd

Allow anyone to report “sketchy” behavior? What could go wrong?

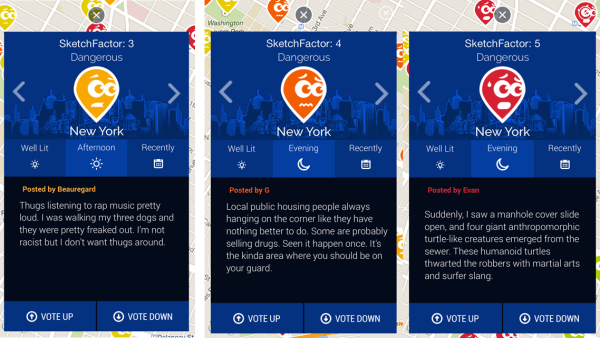

In August 2014, Allison McGuire and Daniel Herrington created an app called SketchFactor that quickly became one of the most popular yet hated apps on the market. SketchFactor’s problem wasn’t poor UI, software bugs, or lack of security; those were apparently fine. SketchFactor’s main problem was how it was used and the unanticipated consequences of crowdsourcing highly subjective data from the general public. You see, SketchFactor was an app that sought to make the streets safer by allowing users to report “sketchy” incidents on a map to warn other users of potential danger. The app’s stated purpose was to empower communities with more information about crime and safety in their neighborhoods. Sounds good, right? “Safety in numbers” and whatnot. Well, it turns out that people aren’t as reliable, impartial, and benevolent as the creators believed because shortly after its launch SketchFactor became a repository of vitriolic, racially-charged, classist, and/or downright imaginary incident reports that tainted the reputation of people and communities across the country. The app became infamous and – despite being at one point the third most downloaded iPhone navigation app (likely due in part to the controversy) – ultimately succumbed to PR hurricane it found itself in. By February, the SketchFactor team saw the writing on the wall (graffiti is sketchy) and decided to pivot. As of today, SketchFactor.com is just a grey screen with the message:

One of the main downsides of crowdsourcing is the risk of relying on (often anonymous) users generating your content, especially when that content is unmoderated. As well-intentioned as the creators may have been, they failed to anticipate and mitigate these risks. SketchFactor exclusively relied on crowdsourced real-time content that it didn’t (and couldn’t) police, meaning anyone could report anything at any time regardless of the report’s validity. As the creators said, “Sketchy means a number of different things. To you, it may mean dangerous. To someone else, it may mean weird.” This subjectivity opened the floodgates for racist, classist reports that accomplished nothing other than tarnishing (mostly minority) communities’ reputations and further alienating the poor. In fact, studies comparing actual Washington D.C. crime data with SketchFactor reports showed few overlaps.

Selection bias often plagues crowdsourcing efforts. Companies must understand and account for th

e fact that their user pool is almost always biased in one way or another and that these biases can confound the data. In the case of SketchFactor, a major source of bias was the simple fact that it was a smartphone app, something only relatively well-off individuals can afford. So while in theory SketchFactor should have allowed anyone to report suspicious behavior, only middle to upper class people had access to the service. When asked about the racial and class implications of the app, the creators answered, “SketchFactor is a tool that can be used anywhere at any time by anyone. The app is not exclusive to privileged communities or tourists. Many of our users experience racial profiling, police misconduct, and harassment. We encourage all users to report this information. In addition, we partner with community organizations to ensure all members of the community have access to this app.” A lofty, but likely unattainable goal.

Could it have worked?

Almost certainly not. Even if the media firestorm hadn’t erupted and done irreparable damage to the brand, at its core SketchFactor’s mission of crowdsourcing community alerts depended on the same technologies and people that were its greatest flaws. Using an app platform allows for mobile, real-time reporting but also requires access to a smartphone. Its best bet would have been to replace “free text” reporting with a set of predefined choices that subtly help would-be reporters assess whether an incident merits posting and prevent rogue users from publishing inappropriate material. This way they could somewhat moderate user-generated content. Even still, SketchFactor was walking on very dangerous ground and things likely wouldn’t have ended well for it.

John – Super interesting choice to write about. SketchFactor illustrates the difficulties of using crowdsourcing to generate content versus innovation. Content crowdsourcing has demonstrated success for companies like Waze, but it appears human insecurities and prejudices override the benefit in this case. I completely agree with your final assessment and really wonder if the founders ever believed SketchFactor could avoid what it ultimately became.

Great post! I wonder if they could have a use if they limited the types of crowdsourced content. Meaning, if they preset “sketch” content in labels entitled “muggings”, “violence”, etc. , perhaps people would be more inclined to take it seriously. This could help in content curation, which is their key problem. I think a real use could be using useful data to integrate with a google maps, for example. If they can leverage analytics to integrate this qualitative information with data (ie #muggings per month) that could be helpful as an add-on.

Shame that this application was so abused since it clearly has value to users. I really liked your assessment of the downfall of unmoderated crowdsourced data. Clearly, similar applications to SketchFactor that utilize reliable data (i.e. police reports) are more successful, if not as entertaining.

To improve the application, SketchFactor could have stolen another product feature from Waze: self-moderation. In Waze, other users are able to “clean up” the data as time goes on — e.g. indicate that there is no longer a cop on the side of the road. In SketchFactor, there could have been a Useful/Not Useful indicator to remove useless notes, or perhaps indicate if a “sketchy” incident (graffiti, abandoned buildings etc.) had been remediated.

Interesting blog post, thank you! Could they have implemented some sort of user feedback or user scoring system to prevent the slippery slope? Uber is trustworthy because of the working feedback loop. I can imagine that users with high rankings would be rewarded for an actual violence/mugging report (for example confirmed by the police). Alternatively, can the power of crowds “push out” the negative comments (as for example in the case of TripAdvisor – one individual can only cause certain harm)?

Very interesting example – I enjoyed reading about this company. Your post certainly highlights a potential problem common to platforms that rely on crowd-sourced content – that of monitoring quality and relevance in an efficient and cost effective way. It does seem that while one option here would have been more manual content curation, this definitely seems to be at odds with efficiency of the platform, and its ability to push content out quickly. While companies like Facebook and Twitter do rely on manual curation – outsourced to contractors in the Philippines, for instance – as part of their content moderation to keep nudity out of posts, this approach would have proved ineffective for SketchFactor, since it seems the type of content people might be manually moderating would not lend itself to the task – ie moderating whether a guy was selling drugs on corner x is nothing like making the much clearer call of “nudity” or “no nudity.” More manual curation would create a huge bottleneck in the platform, and the quality control mechanism therefore needs to somehow be automated – either by equipping and relying on the crowds themselves to perform this quality control function, or by limiting what can be posted in the first place.