AI fiction

What does AI make of prompts even humans know nothing much about?

I wondered how the AI generative model would respond to a prompt that doesn’t make much sense even to a human. Or perhaps a prompt that makes sense literally, but one on which we have no real-life experience or prior knowledge about.

So I decided to go with “alien on unknown planet” because it has no “correct answer” for a response. As one would expect, the results are quite strange and unpredictable.

To further break down the source of unpredictability, I tried a few more prompts to analyze whether the AI is just doing a fantastic job at depicting what humans can’t imagine or whether it is just stumbling and throwing back garbage. To do that I modified the prompt, making it simpler / decipherable in three stages:

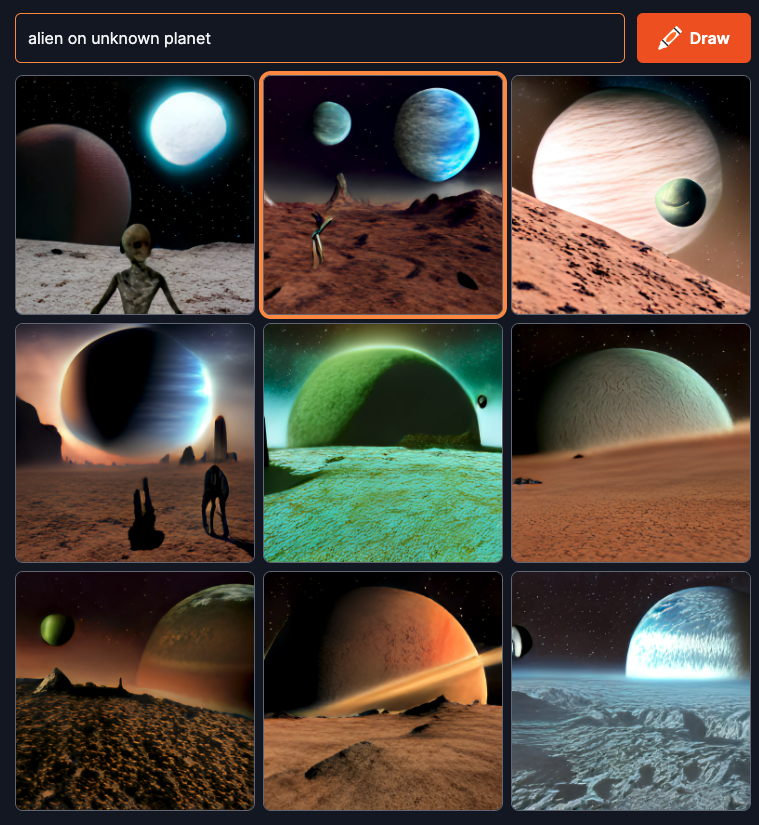

1. “alien on pluto”: The outcome was now dominated by well-known images of Pluto, with strange artifacts, perhaps induced by “alien”.

2. “alien on mercedes”: While the prompt doesn’t make much sense, the output looks like distorted images of Mercedes. However, I’m suspecting the distortions are simply stemming from the AI doing a poor job, and not because of being distracted by “alien” in the prompt.

3. This hypothesis is supported by the final prompt which simply says “mercedes” but still returns mauled-up images of a Mercedes-like vehicle.