Royal Bank of Canada banks on machine learning as equity research propels into a new paradigm

As one of the first North American banks to incorporate artificial intelligence into equity research, RBC is capitalizing on the opportunity to use machine learning as a way to dramatically improve the speed and accuracy in conducting capital markets research.

While the approach to capital markets research has steadily increased in sophistication throughout the years, the emerging trend of incorporating artificial intelligence (AI) and machine learning offers the potential to dramatically reshape the way equity research is done. In an industry where firms compete on accuracy and speed to synthesize news flow into investment recommendations by publishing research reports that money managers use to support investment decisions, machine learning can significantly improve an equity research firm’s competencies on both fronts.

Although virtually all of the large financial institutions have publicly recognized the importance of such technology, Royal Bank of Canada (RBC) is one of the few banks in North America to have incorporated AI into equity research [1]. The focus on machine learning has increased in large part because of the growth in computer memory, faster computers, and automatic data capture [2]. Through these technological advances, RBC is capitalizing on the opportunity to better compete on the critical success factors of accuracy and speed:

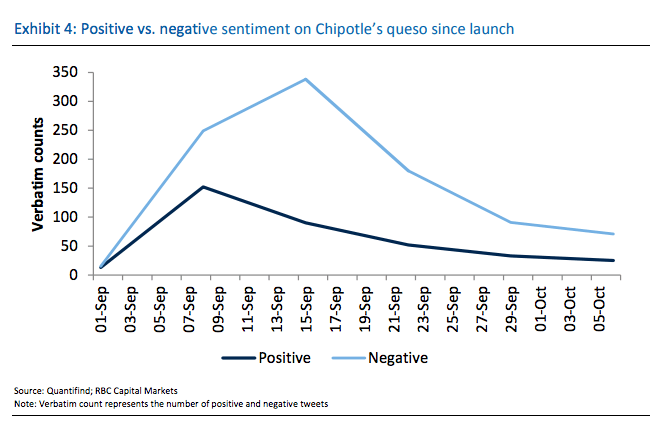

Accuracy (learning better): Machine learning opens up new statistical techniques that allow research analysts to better predict patterns and associations within data. As an example, RBC used machine learning to weigh the effect of a social media storm over Chipotle’s queso (cheese dip) debut. While some research analysts were optimistic that the product would help Chipotle recover from its recent struggles (such as an E.coli outbreak in 2015), RBC Analyst David Palmer reduced his price target and estimates ahead of the Q3/2017 earnings release due to findings from machine learning that pointed to weaker-than-expected financial results. The data on search engine trends showed that negative tweets outnumbered positive ones in the week following the queso release and remained negative for some time. While finding correlations between social media data and stocks historically didn’t work, dramatic improvements in natural language processing with AI has made this data more valuable in predicting impact [2]. Shares of Chipotle fell by roughly 15 percent after the company released Q3/2017 earnings that missed analyst estimates. A month later, Chipotle made changes to its queso recipe with CEO Brian Niccol cited saying “the feedback we’ve gotten on queso is that there’s still some opportunity to improve…”[3]

Recommendations and actions

In order to ensure the successful implementation of machine learning, RBC must continue the following actions:

1. Increase understanding of data analytics in top management. Successful implementation of machine learning requires thoughtful consideration of the organizational and cultural changes that are necessary to support it [5]. By ensuring that top management understands the concept and value of implementing big data, executives can invest early and wisely into such projects in order to expand the competitive moat between the company and its rivals.

2. Increase integration between data science team and equity research analysts. Most advanced analytics projects dedicate a significant amount of time into identifying and curating the necessary data to input into machine learning algorithms [6]. Machine learning platforms that rely on restrictive data inputs can be limited in its effectiveness. As such, it is essential to integrate the data science and research team and assess the critical data components of the equity research process so that the machine learning system remains flexible in integrating data sources from different systems.

Considerations for RBC moving forward

How should the company transition its workforce to better integrate machine learning? As machine learning platforms perform more manual data extraction tasks that were previously completed by junior research staff, how should RBC optimize the allocation of resources? Should it reduce the overall research workforce to cut costs, or will machine learning free up time for research teams to do value-adding analyses that it previously did not have the capacity for?

(Word count: 796)

Sources

[1] Alexander, Doug. 2018. “28 | January | 2018 | RBC’s Push Into Artificial Intelligence Reveals Link Between Social Media Uproars And Stock Prices”. Financial Post. https://business.financialpost.com/news/fp-street/rbcs-push-into-ai-uncovers-chipotles-worst-queso-scenario.

[2] Giamouridis, D. (2017). Systematic investment strategies. Financial Analysts Journal, 73(4), 10-14. Retrieved from http://search.proquest.com.ezp-prod1.hul.harvard.edu/docview/1989831861?accountid=11311.

[3] Taylor, K. (2018). Chipotle’s CEO says the chain is still trying to fix its queso, which has been slammed as a ‘crime against cheese’ and ‘dumpster juice’. Business Insider. Retrieved 12 November 2018, from https://www.businessinsider.com/chipotle-queso-changes-to-come-ceo-says-2018-5.

[4] Roche, Julia. 2018. “RBC Cuts Chipotle Price Target”. Finance.Yahoo.Com. https://finance.yahoo.com/news/rbc-slashes-chipotles-price-target-expects-new-queso-dip-flop-135035770.html.

[5] Halaweh, M., & El Massry, A. (2015). Conceptual model for successful implementation of big data in organizations. Journal of International Technology and Information Management, 24(2), 21-II. Retrieved from http://search.proquest.com.ezp-prod1.hul.harvard.edu/docview/1758648727?accountid=11311.

[6] Fernandez, Daniel. 2018. “Is Artificial Intelligence Ready For Financial Compliance?”. Bloomberg.Com. https://www.bloomberg.com/professional/blog/artificial-intelligence-ready-financial-compliance/.

Definitely interesting implications for such a speed-intensive industry, but my rather-uninformed view (I’m not from finance) is that RBC will downsize it’s research staff to cut costs while still improving speed. I’d think they might try to do more value-add work, but I don’t understand the business model enough – how does a company actually monetize the fact that its reports are better?

Interesting topic for equity research and technology! I would argue that research staff could do 1) more coverage of companies (such as small-mid cap) and industries, 2) spent more time analyzing areas where technology cannot use such as interviews with management, supply chain etc. As more investors with different risk profile increase, more coverage and deep insights, which normal investors cannot obtain would be valued. The best thing about AI, machine learning etc is that you can cut down time to prepare data and information drastically. I believe RBC could use this type of technology not only research division, but also other divisions such as investment banking where large amount of data and information needs to be gathered and analyzed. I heard that big banks such as JP Morgan is thinking about this type of transformation. Their aim is to try to fee up associates from manual work/data crunching, and let them spend more time with clients. I believe this type of transformation would also help the industry increase potential talent pools as well.