Hurry Up and Wait: Bringing Machine Learning to the US Department of Homeland Security

The US Department of Homeland Security is exploring machine learning. The outcome could be increased security and shorter lines, but what are the risks?

Imagine arriving at a major US airport 30 minutes before boarding time, checking a bag, proceeding through security and arriving at the gate with time to spare for a coffee. This utopia is not beyond the grasp of reality as we explore the potential impact of machine learning on the airport check-in and security processes.

In 2017 the US airline industry net operating revenues were in excess of $175 Billion [1]. With air travel expected to increase in the coming years, this industry is marked by fierce competition. One area in which the major contenders are investing is machine learning with hopes of improving customer service, logistics, and bag checking [2]. The competitiveness of this market will undoubtedly drive rapid innovation and implementation, which leaves the government run security screening as the most likely bottleneck in the process of getting from the airport entrance to your aircraft seat.

Current Security Screening Process

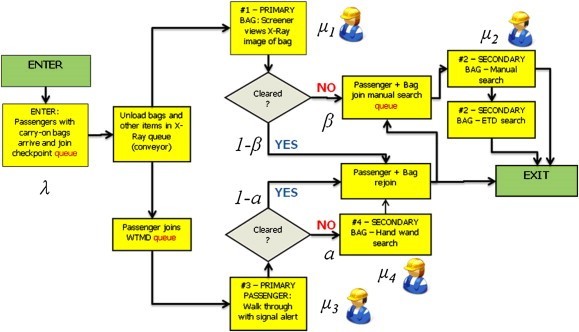

The Transportation Security Administration (TSA), which is a subset of the Department of Homeland Security (DHS), is responsible for security screening at US airports. At airport security checkpoints, the TSA places several employees along with equipment (x-ray machines, metal detectors, cameras, etc.) to screen each passenger. Figure 1 shows the process flow diagram for a single passenger passing through a TSA security checkpoint. The process depicted begins after the passenger’s ID and boarding pass have been checked.

Progress

Fortunately, this process is ripe for machine learning intervention, and DHS has taken notice. In 2017 DHS teamed up with Google to introduce a $1.5 million contest to build computer algorithms that can automatically identify concealed items at airport security checkpoints [4]. Furthermore, DHS is working with technology companies to develop CT systems that can automatically identify items hidden in luggage while using neural networks to be adaptive in the face of emerging threats [4].

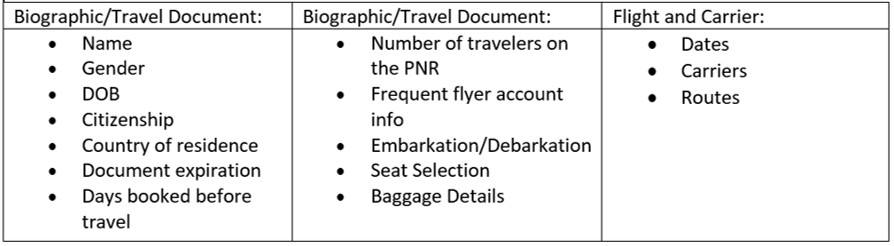

DHS has even developed an open source web application known as Global Travel Assessment System (GTAS). Developed in response to UN Resolution 2178, GTAS is meant to improve “Global Security by using industry-standard Advance Passenger Information (API) to screen commercial air travelers.” [5] Specific data used for algorithm targeting includes:

Concerns

As is the case with all emerging technology that impacts human safety, there are concerns with implementation. As we have seen, the current phase of artificial intelligence is statistically impressive, but often individually unreliable. Machines simply make mistakes that a human never would (recall IBM Watson’s response of “Toronto” while answering a question about US Cities on the game show Jeopardy). While playing a game, those mistakes are funny, but at an airport security checkpoint the humor falls short.

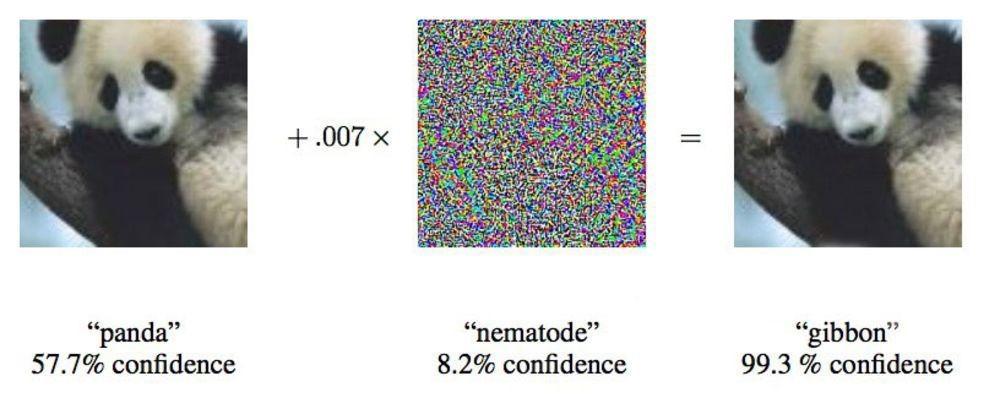

Current machine learning technology is also susceptible to “targeted distortion.” [7] In Figure 2 below, a machine learning system that had been taught to recognize images, correctly identified the image on the left as a panda. However, an engineer with knowledge of the algorithm overlaid the panda image with the center image. The result was the image on the right, which looks the same to the human eye, but caused the algorithm to incorrectly identify the image as a gibbon.

Moving Forward

DHS should implement machine learning at airport security checkpoints in order to improve the current standard of ID matching and hidden object detection while simultaneously reducing the time it takes passengers to get through the security screening process. Even a small reduction in the number of false positives in the passenger and bag screening process would massively reduce the burden on both patrons and staff.

To reduce public safety risk, DHS should begin implementation on a prescreened population, such as those travelers in TSA Precheck and Global Entry. Human monitoring and quality checks would further reduce the initial risk. As the algorithm adapts and trust is developed, the use case could spread to the entire air traveling population. Long term, DHS should consider applying the machine learning tool to other areas of airport security, such as suspicion behavior recognition and predictive demand algorithms to optimize staff allocation.

Questions Remain

- What are the potential forms of machine learning manipulation and are they executable enough to pose a credible security risk?

- Referencing Table 1, how do we keep human bias from creeping into the model?

(Word Count: 797)

Sources:

[1] Bureau of Transportation, “2017 Annual and 4th Quarter U.S. Airline Financial Data,” https://www.bts.gov/newsroom/2017-annual-and-4th-quarter-us-airline-financial-data, accessed Nov 2018.

[2] Tech Emergence, “How the 4 Largest Airlines Use Artificial Intelligence,” https://www.techemergence.com/airlines-use-artificial-intelligence/, accessed Nov 2018.

[3] Kelly Leone, Rongfang (Rachel) Liu, “Improving airport security screening checkpoint operations in the US via paced system design,” Journal of Air Transport Management, Vol.17, No.2 (2011): 62-67.

[4] The New York Times, “Uncle Sam Wants Your Deep Neural Networks,” https://www.nytimes.com/2017/06/22/technology/homeland-security-artificial-intelligence-neural-network.html, accessed Nov 2018.

[5] Global Travel Assessment System, “What is GTAS?,” https://us-cbp.github.io/GTAS/, accessed Nov 2018.

[6] Department of Homeland Security, “News Release: DHS Awards Virginia Company $200K to Begin Automated Machine Learning Prototype Test,” https://www.dhs.gov/science-and-technology/news/2018/08/20/news-release-dhs-awards-va-company-200k-begin-automated, accessed Nov 2018.

[7] Defense Advanced Research Projects Agency, “DARPA Perspective on AI,” https://www.darpa.mil/about-us/darpa-perspective-on-ai, accessed Nov 2018.

Interesting read Romaan. One of the tricky things about applying machine learning or algorithmic-driven approaches to really critical activities like catching bad actors is who’s to blame when it goes wrong. We all know that humans are fallible, but even if a machine performs at a statistically better level, it’s easy to ask “would a human have caught that?” if things go wrong. I do believe that using machine learning in such a scenario avoids much of the bias that we’re seeing with other applications of tech and homeland security. Solving the manipulation is all about control – how can you ensure that the engineers building these protocols are reviewed enough to stop bad actors from slipping in.

Romaan, I echo your excitement for a faster TSA process. To respond to your question about “potential forms of Machine Learning manipulation” I would highlight that ID matching can be incredibly difficult to get data on. Every state has a different ID, and they all update their IDs every 5-10 years and at different intervals. The onus is on the state to send the IDs to the DHS– but rarely they do this. So just considering the US, the algorithm has to have data on every valid ID, from every state, and continually be updated and learn the features of new forms of identification. Now, expand that out to the rest of the world! Thus, a major concern is not just having the technology within the DHS, but getting buy-in from other ID officiating authority to provide DHS with a robust enough data set to build upon. This could mean we are farther than we would like from a 30 min arrival at the airport.

Nice article! An additional application of ML I’ve heard of within a security context is algorithmic processing of would-be passengers’ facial expressions to detect nervousness, aggression or other signs that they be in the process of committing a crime. I wonder if this sort of approach may be more prone to adoption, as it could (at least initially) be run in parallel with current bag / document screening procedures, to provide an extra layer of security rather than a replacement for current practices. The AI bag screening could also likely run in parallel to a human-backed check, which to me also seems like a more likely path-to-market than an immediate replacement. Unfortunately, both of these approaches, while perhaps decreasing false negatives and improving safety, do nothing to shorten our wait times in the TSA line. Guess I’ll renew my Pre-Check for now 🙂

Great article! The examples of error here are particularly striking in the world of security. In some ways, it is as though humans may be more forgiving of a human error than a computer error, because one is more relatable. As always, the answer may be a two-pronged system, though the issues of bias that you discuss are also a cause for concern. The question I am left with is whether security should be a leader or a follower: should security be where we test this security, or where it only goes once it is very proven?