Dream On: An Exploration of Neural Networks Turned Inside Out

What if computers could dream? In fact, they can. Google’s groundbreaking DeepDream software is turning AI neural networks inside out to understand how computers think.

Human consciousness is likely one of the most significant marvels of nature in the entire universe. With all our technology, scientific advancement, and ever-increasing computational power, we still have so little understanding of how our own minds actually work. In the quest to deepen our understanding, the field of machine learning and artificial intelligence is growing by leaps and bounds. Already, it has enabled things that seemed like magic a few years ago—Alexa can tell you jokes, AI chatbots can answer your questions on Facebook Messenger, and Google’s advanced neural-net machine learning algorithms can recognize pictures of virtually anything. In a fascinating twist, however, it turns out that the inner workings of neural networks are so complicated that no one actually understands how they work [1]. It was while pondering this question that Google engineers had a crazy idea—they decided to let the algorithm dream.

Now known as DeepDream, Google has taken their neural net image recognition software and programmed it to run backward. Artificial neural networks work by being trained on millions of examples and gradually adjusting network parameters until they result in the classification desired [1]. For example, a net might be shown millions of pictures of dogs until it is able to recognize dogs with a high degree of accuracy. Due to the complexity of the learning process, however, what is actually happening inside the neural net is poorly understood. To understand this process better, Google engineers decided to turn the operation inside out.

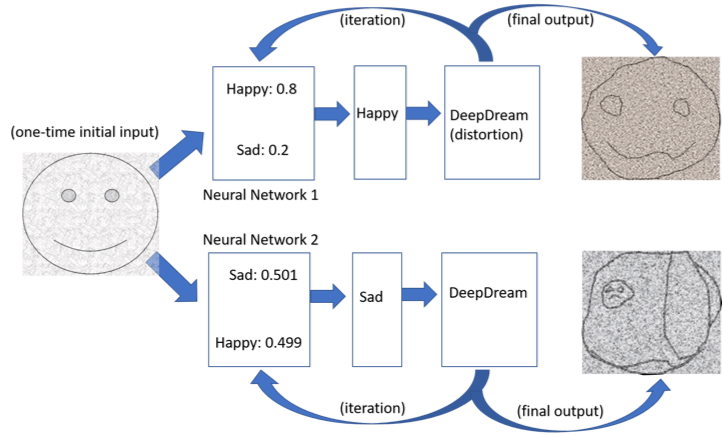

Initially, engineers fed the neural net an image of a random scene and allowed it to look for an image to recognize. Once the program came back with a fit, they then instructed it to change the original image to fit the program’s classification algorithm better. Repeating this cycle many times resulted in psychedelic images “dreamed” by the algorithm—essentially manifestations of what the algorithm understood the classification in question to be [2].

Figure 1: “Dreaming” Process

Source: [2]

Figure 2: 50 iterations of “dreaming” with an algorithm trained on dogs, given an image of jellyfish

Source: [3]

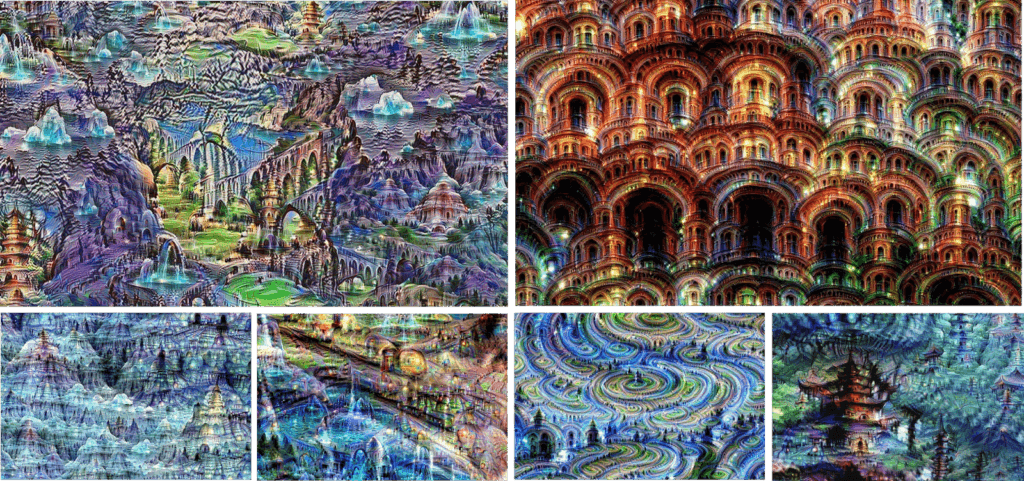

Taking it a step further, Google then tested their neural net on pure white noise. Given images with absolutely no patterns, this allowed the algorithm to dream much like a human does when looking at clouds. When we see a shape that is similar to something we recognize, we might say a cloud looks like a rabbit or a car. This abstract generalization is formed in the depths of our consciousness [4]. By replicating this process with DeepDream, Google is gaining insight into how its algorithm thinks.

Figure 3: Images generated from random noise

Source: [1]

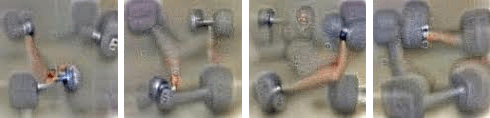

In some cases, the results are surprising! According to a Google researcher, “The problem is that the knowledge gets baked into the network, rather than into us [4].” In one particularly interesting case, a program “dreaming” dumbbells produced images of dumbbells with arms attached to them! Since the network had likely rarely seen images of dumbbells without an arm lifting them, it associated the arm with the object itself [1]. Examples like this show the power of this analysis—Google has found a way to see into the mind of their creation and understand how a program understands reality. In this case, the algorithm failed to separate the weight from the weight-lifter!

Figure 4: Dumbbells with arms attached

Source: [1]

The implications of this technology are profound. In the short-term Google will be able to leverage this type of processing to improve their existing image recognition software and increase their understanding of how neural nets learn and understand reality. This will benefit consumers as AI products become increasingly accurate and begin to truly understand the world at contextually-indifferent depths [4]. The longer-term implications are even more exciting, however. Eventually, this understanding may lead to software that is predictive. In other words, algorithms may learn to fill in missing pieces because they understand what is missing [2]. This opens up a world of applications, from automatic photoshopping to the mythical “zoom and enhance” of sci-fi movies [3]. Some even speculate that DeepDream may be able to create testable predictions for the pathogenesis of psychosis by understanding how false perceptions of reality arise in the mind [5]. Personally, in the next several years I would love to see Google explore applications in expressive music generation, creative art, and even storytelling.

DeepDream is a fascinating step in understanding how computer learning algorithms understand reality. Hopefully, this understanding will eventually shed light on the great mystery of our own cognition. However, many questions still remain. Do our minds actually function in a meaningfully similar way to neural nets? If machines eventually “think” and “understand,” will they understand in a way similar enough to humans to be even recognizable to our organic minds? How do we separate machine learning insights from garbage when the output is so profound that we ourselves don’t even recognize its brilliance? I don’t know the answers to these questions, but I certainly dream of a marvelous future.

Word Count: 798

Citations:

- Alexander Mordvintsev, et al., “Inceptionism: Going Deeper into Neural Networks,” Google AI Blog, June 17, 2015, https://ai.googleblog.com/2015/06/inceptionism-going-deeper-into-neural.html, accessed November 2018.

- Wilkin, Henry, “Psychosis, Dreams, and Memory in AI,” Special Edition on Artificial Intelligence (blog), August 28, 2017, http://sitn.hms.harvard.edu/flash/2017/psychosis-dreams-memory-ai/, accessed November 2018.

- Wikipedia, “DeepDream,” https://en.wikipedia.org/wiki/DeepDream, accessed November 2018.

- Castelvecchi, D, “Can we open the black box of AI?,” 05 October 2016, Nature News, 538(7623), p.20.

- Keshavan, Matcheri S. et al. Deep dreaming, aberrant salience and psychosis: Connecting the dots by artificial neural networks. Schizophrenia Research , Volume 188 , 178 – 181.

This article is very proudly written and very technically presents me how the future machine learning will go.

I believe Google’s DeepDream research is very meaningful since it’s trying to mimic a way how people think. But I think this is only the first step of the endeavor. Since the machine now can only process the information given and “think” using these information, they don’t have the ability to decide what they are doing, or find the “purpose” of their “thinking”. The self consciousness is pretty crucial in developing a real AI. I’m looking forward to seeing more advanced technology which could address this in the near future.

Thanks for an entertaining read! I think an important clarifications is that the software must still be trained initially (though supervised learning of classified images) to categorize images or define traits as it chooses, before the process can be run backwards. This creates an interesting dynamic by which trends in the initial data set may result in biases for how the program classifies objects on its own. For example, having come up with its own independent classification for what makes an animal “dog-like” or “cat-like”, the program might classify a dalmatian as a cat due to its spots. Perhaps that was a bad example, but this might indicate a use of this software in pointing out existing visual biases in existing data sets, since that’s what would be revealed when white noise is fed through and the machine tries to reiterate on these traits.

This is a really interesting article. Machine learning is really changing the way a lot of industries do business. I don’t know if Google’s DeepDream will ever be able to mimic exactly how people think, but I wonder if/how it could impact the art industry. “…the Deep Dream auction raised $97,600 for Gray Area Foundation, with Akten’s working achieving the highest sale price of $8,000 – respectable figures for a small gallery sale, yet microscopic in comparison to Google’s parent company, Alphabet which has a market capitalization of around $550bn.” (https://www.theguardian.com/artanddesign/2016/mar/28/google-deep-dream-art )

I don’t believe it will ever substitute the fine (men made) creative art, but could it be an introduction of an entirely different style of art? I also wonder, what other applications could this DeepDream have in other businesses.

Examining neural nets is an interesting way for humans to learn about themselves through a tool that we created. While machine learning has many other applications, this specific use is a highly advanced form of self-discovery and reflection. The questions raised at the end of the article are important and reveal significant limitations. Given the physical differences between neural nets and our own brains, it may be impossible for machines to shed light on something that is fundamentally different, with certain organic restrictions. For example, there are limitations with respect to electrochemical information processing (e.g., the speed at which humans can receive and interpret information, chemically) that machines do not have. Also, the brain changes as we age, starting with ~100 billion neurons as children and eventually reducing to ~86 billion neurons.[1] The physical design of the human brain, including degradation of information (e.g., memory loss, non-perfect recall, etc.) is also something that a machine would not be impacted by. These differences and more lead me to have a bit of skepticism around machine learning truly shedding light on the functionality of the human brain.

[1]Tim Dettmers, et al. “The Brain vs. Deep Learning vs. Singularity.” Tim Dettmers, 10 Feb. 2016, timdettmers.com/2015/07/27/brain-vs-deep-learning-singularity/.

It is interesting to consider that neural networks are inspired by the complex biological processes underlying human thought and perception. These machine learning models mimic the way biological neurons process inputs within their specific receptive field. The synapse of a neuron applies a specific weight to each input, and then an activation function is used to determine whether the aggregated input is sufficient to activate the neuron and propagate the signal. Science has progressed to the point that we understand the underlying mechanisms of this biological process, but the sheer amount of neurons and synapses in the system makes it difficult to fully understand how the pieces all come together.

I believe artificial neural networks do emulate the basic processes of human thought, but our current understanding of the human brain is so limited that I am not surprised that artificial neural networks are just as esoteric.