Applications of Machine Learning in the US Criminal Justice System

Applications of Machine Learning in the US Criminal Justice System

The criminal justice system ostensibly protects all Americans and enforces laws in our communities to allow all “law-abiding” individuals to flourish equally. But in practice, its impacts are far from equal. There is a broad base of evidence demonstrating racial bias in how the criminal justice system is applied today and has been applied through the history of our country [1]. From the drug war, the application of “Stop-and-Frisk” policies, to the use of the death penalty: the criminal justice system produces disparate outcomes for racial minorities.

Human judgement has its limitations and we are all impacted by our own biases. By augmenting human decision making with “unbiased” machine learning algorithms, the hope is that we may be able to change the way that the criminal justice system is applied so it is more fair and equitable [2]. The need for improvement is widely recognized and the potential that machine learning can play in that process is great, but experimenting in processes where human lives are concerned is fraught with difficulty.

To start to address this issue and its potential, jurisdictions around the country have started to experiment with how machine learning can be used to inform and help solve some of the most complex problems in the criminal justice process. The development of these technologies has largely been outsourced, co-developed with the private sector, and then used by law enforcement or court systems [3][4].

One example of this is how the system COMPAS has been used in Broward County, Florida in pre-trial hearings. The COMPAS system employs a 137-question questionnaire to apply a “risk score” to individuals. This risk score is then used by judges in pre-trial hearings to determine whether an individual can be released or needs to be held in jail until their trial [4]. The motivation behind this software is clear: if machine learning algorithms can accurately predict who is likely to commit additional crimes, taking sentencing decisions out of the hands of humans and their biases, the criminal justice system might be applied more fairly. And, by keeping people out of jail who are unlikely to commit future crimes we can reduce the overall cost of our penal system [4].

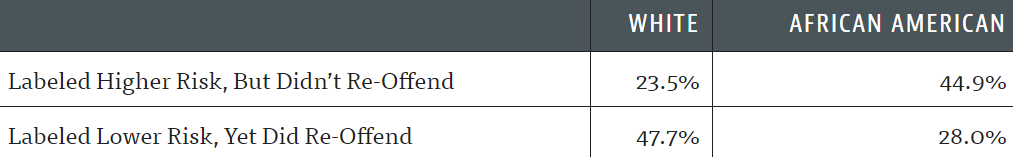

This is, however, predicated on the ability of the algorithm to get this process right. An investigation of the system found that the program was resulting in significant racial disparities: the formula was almost twice as likely to falsely flag black defendants as future criminals and white defendants were more likely to be mislabeled as low risk [4][5]. The system was designed to eliminate human bias using machines, but the outcomes continued to perpetuate the same biases we see today.

Moving forward there two important steps public organizations can take to improving the use of machine learning in the criminal justice system.

The first step is promoting transparency. Today, like COMPAS, the algorithms used are largely proprietary with no transparency in how they function – they work as “Black Boxes” [3]. This makes it impossible to audit where the logic in the system fails when it produces undesired outcomes. To improve how these algorithms work, they need to be open to critique. [2]

The second step these organizations should take is the democratization of criminal justice data. While much of the data is in theory available to the public, creating an aggregated database of criminal outcomes and risk factors with which these algorithms can be trained and evaluated would help in the acceleration of their development [2].

Many would argue that throughout its history our society has failed to enforce our criminal laws fairly and without bias. There seems to be opportunity to help eliminate bias by replacing or at least aiding human decision making with algorithms, but this is by no means a straightforward process and there are important consequences to consider.

In addition, here are a couple additional questions worth considering as we move forward in this domain:

- How do you design and employ a machine learning program in a realm that has been historically riddled with racial and socio-economic bias? How do you get data that isn’t itself the product of a racially and socio-economically biased system?

- In decisions with such high human consequences, what are the ethical considerations for experimenting with and developing these programs before knowing how effective they will be?

[word count: 730]

[1] R. Balko “There’s overwhelming evidence that the criminal-justice system is racist. Here’s the proof.” The Washington Post, 18 September, 2018; https://www.washingtonpost.com/news/opinions/wp/2018/09/18/theres-overwhelming-evidence-that-the-criminal-justice-system-is-racist-heres-the-proof/?noredirect=on&utm_term=.b574d28c2036

[2] C. Watney, “It’s Time For Our Justice System To Embrace Artificial Intelligence” Brookings, 20 July, 2017; https://www.brookings.edu/blog/techtank/2017/07/20/its-time-for-our-justice-system-to-embrace-artificial-intelligence/

[3] R. Rieland, “Artificial Intelligence Is Now Used to Predict Crime. But Is It Biased?” Smithsonian Magazine, 5 March, 2018; https://www.smithsonianmag.com/innovation/artificial-intelligence-is-now-used-predict-crime-is-it-biased-180968337/

[4] J. Angwin, J. Larson, S. Mattu, L. Kirchner, “Machine bias: There’s software used across the country to predict future criminals. And it’s biased against blacks,” ProPublica, 23 May 2016; www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

[5] E. Yong, “A popular algorithm is no better at predicting crimes than random people.” The Atlantic, 17 January, 2018; https://www.theatlantic.com/technology/archive/2018/01/equivant-compas-algorithm/550646/

One caution I have with respect to tools such as the “risk score” is that it falls victim to the basic flaw inherent in most machine learning algorithms: identifying correlation is not the same as identifying causation. One of the reasons that our justice system experiences bias is because it is hard for humans to understand the root causes behind criminal activity. For example, while “on average,” lower income groups tend to commit crimes at higher rates (thus a high correlation between income and crime rate), there are likely underlying factors in individuals lives – such as addiction – that directly motivate theft. If addiction is actually a more direct cause of crime, then looking at income levels to predict someone’s chance of re-offense is flawed, because high income populations also experience addition. This is a grossly over-simplified example, but the point remains that with the complexity of human decision-making, there is a high risk that machine learning will spot historical correlations that are not accurate predictors of future behavior, and thus introduce even more bias into the justice system.

This reminds me of the movie Minority Report in which people were arrested on analytics from machine learning before they actually committed the crimes. I am curious to see the statistical breakdown of the algorithm’s outputs for other various groupings such as socio-economic status, gender, age etc. to attempt to triangulate the root of the algorithm’s malfunction. I am also fairly floored that this was actually employed considering how low its accuracy is. A testing period to collect data and determine COMPAS’ efficacy before it went live seems like a much safer way to go about things.