AI helps data center to stay cool and keeps energy bills low

Data center is a facility that centralizes computer servers, storage and networking systems for certain IT needs. As the world’s economy shifts further into the digital era, the demand for data centers keep accelerating at a fast pace. In 2006, data centers in the U.S. consumed about 61 billion kilowatt-hours (kWh), which is roughly 1.5% of total U.S. electricity consumption. The energy used by data centers was more than doubled between 2000 and 2006. [1] If the energy-efficiency of data centers did not improve, it was predicted that by 2011 the energy consumption of data centers would exceed 100 billion kWh. That would have significant impact on the power grid and power generation as well as increase millions of tons of greenhouse gas emission [2]. The need for more efficient data centers is urgent and it calls for innovative solution.

Fortunately during the past decade all the major companies of data centers worked together to improve the efficiency and in 2014, data centers in the U.S. consumed an estimated of 70 kWh, at growth rate much lower than originally forecasted. Some of successful strategies and best practises include:

- Consolidate smaller server operations into hyperscale data centers

- Adopt new energy-efficient servers and components

- Apply best cooling practices: liquid cooling, hot air containment, extensive monitoring and free cooling during cold season

As most data centers adopted these best practices, the improvement to energy efficiency starts to show diminishing return and the energy use is expected to slightly increase in the near future, at rate of 4% growth from 2014-2020. However according to the Uptime Institute’s 2014 Data Center Survey [3], the global average Power Usage Effectiveness (PUE) of the largest data centers is around 1.7, which means every 1kWh of energy used on IT equipment, 700Wh is consumed as facility overhead.

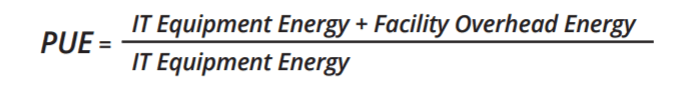

Definition of Power Usage Effectiveness (PUE)

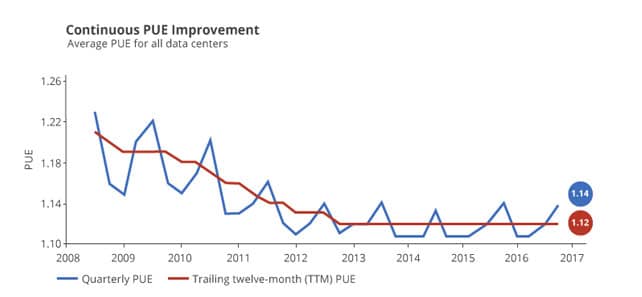

Google, the company with the largest data centers in the world, has always been focused on improving energy efficiency while sustaining the explosive growth of the Internet. Since it started reporting PUE publicly from 2008, the average PUE across the whole fleets continued to decrease and stabilized around 1.12.

History PUE values at Google [4]

As the modern data center becomes more complicated with a wide variety of mechanical and electrical equipments, further reducing PUE is found extremely difficult due to following reasons:

- Traditional formula-based engineering could not capture the complex, nonlinear interactions among various of equipments and their settings.

- Although every day millions of data points are monitored by thousands of sensors installed in the data centers, analyzing such data is found beyond simple intuition and heuristics.

- Rapid changes in internal requirements (usage/traffic spike) and external conditions (weather change) make quick adaptation even more challenging for human operator.

- As each data center has different design and environment, a working model for one data center does not transfer to another one.

Hence, a general intelligence framework is necessary to understand the data center’s interactions and to provide optimal management of various equipments. Based on its success in DeepMind’s AI algorithm, Google trained a system of neural networks with different operating scenarios and parameters within its data centers. About 120 variables in the data centers (such as the fans and pump speeds, temperature and system efficiency) are feeded into the neural networks to allow the AI to understand the dynamics of data centers and work out the most efficient methods of cooling. [5]

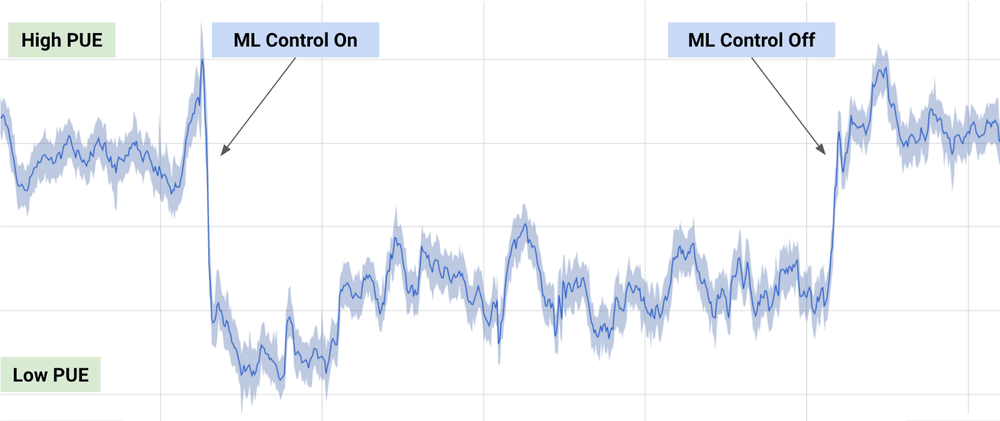

PUE comparison during a typical day of testing, between AI recommendations on and off [6]

The result of the AI system is impressive. It is able to reduce the amount of energy used for cooling by 40%, which is equal to 15% of overall PUE overhead.

The potential impact of such intelligent system is enormous for data centers. Since most non-google data centers have significant higher degree of PUE overhead, the room for improvement is even bigger. If adopting such intelligent system can reduce the average PUE from 1.7 to 1.6, a similar 15% cut in the above scenario, 4 billion kWh of energy can be saved each year or 2.8 million tons of greenhouse gas can be eliminated. If all the non-google data centers can reach Google’s PUE of 1.12, 24 billion kWh of energy or 16.8 million tons of greenhouse gas can be eliminated.

Conclusion:

While many companies have made big strides in increasing data center efficiency, there are still significant opportunities for further improvement. The recent attempt by Google to utilize advanced technology like DeepMind’s to optimize data center operations showed impressive result in reducing energy consumption and demonstrated a great potential on how AI can help human to optimize the system and address the urgent challenge of climate change.

Word count: 770

References:

[1] United States Data Center Energy Usage Report, Ernest Orlando Lawrence Berkeley National Laboratory

https://eta.lbl.gov/publications/united-states-data-center-energy-usag

[2] Based on the estimated increase of 30 billion kWh and Greenhouse Gas Equivalencies Calculator

https://www.epa.gov/energy/greenhouse-gas-equivalencies-calculator

[3] 2014 Data Center Industry Survey, UpTime Institute

https://journal.uptimeinstitute.com/2014-data-center-industry-survey/

[4] Efficiency: How we do it, Google

https://www.google.com/about/datacenters/efficiency/internal/

[5] Machine Learning Applications for Data Center Optimization, Jim Gao, Google

http://research.google.com/pubs/pub42542.html

[6] DeepMind AI Reduces Google Data Centre Cooling Bill by 40%, Google

https://deepmind.com/blog/deepmind-ai-reduces-google-data-centre-cooling-bill-40/

Interesting article – it raises the question if AI control of climate systems could be applied to other industries to reduce their energy usage. For example, could an AI system control the air conditioning and light level in a large office building more precisely than human operators, leading to an increase of occupant comfort while simultaneously decreasing the building’s energy consumption? While the impact of such a system to total energy consumption may be low, if the technology became cheap enough to implement, it could have a huge impact when aggregated across all large buildings, and that is just one application.

Current cooling system is either time based or thermostats rule based. The ideal AI system could be aggregating all available info like how many people present in the room, each person’s comfort temperate/what each is wearing today, when you expect people arrive and leave. New building will have most sensor and control needed for this kind of application, it is just software isn’t smart enough to make such call. It is coming really fast though.

Great post. I would be interested to see how smaller companies with smaller server requirements are coping with their energy efficiency. I would guess that many firms don’t think about it at all. That said, I wonder if there are ways to pool together smaller users into larger, more efficient data centers. This could free up the office space requirements for smaller companies and allow pooled data centers to use the same AI to keep overhead low in the same way as Google.

This also begs the question of whether or not it is Google’s responsibility to share their research and AI technology with other firms to reduce energy consumption across the board. It may be a competitive advantage at the moment, but Google could potentially have a greater impact if they worked to decrease data center emissions worldwide. Perhaps they can find a way to find shared value with their work.

Consolidate individual, smaller server houses into big data center was actually what happened. This change improved the efficiency so much and kept the growth of total power consumption limited while the capacity increased significantly during last decade.

This also aligns very well with the Cloud computing trend when more and more companies are getting rid of their own server and using Cloud server offered by Amazon or Google remotely. If more work and services are running on Cloud, we can expect the efficiency keep improving. I think for either Amazon or Google, keeping data center energy bill low will continue to be the key competitive advantage so to other company, the cost of using Cloud will be much lower than maintaining their own server.

Thinking about utilizing AI in data center power usage brings many smart-grid applications to mind. Are there ways to increase sharing of information between data centers and future smart-grids to utilize load balancing and demand charge minimization as well? This would involve AI optimization both on the load as well as cooling ends of data center management. Moreover, as our AI becomes more intelligent, I anticipate we will begin threading data center back up and bridge choices through optimization algorithms as well, further complicating power usage management at the individual center level.

One more question I’d ask is: In the face of other pressures on data centers (bandwidth and security to name a few), how will we incentive centers to prioritize power management over other drivers? If solar electricity production efficiency takes off in the next years, aided by AI no doubt, will the power management equation even be relevant? Thanks for writing!

Right now the energy bill is the single biggest cost for data center. I think the cost is even higher than their hardware depreciation cost each year. Even if we move to other ‘free’ energy such as solar, the actual cost of generation electricity will still be a major expense of running data center.

I love your idea of grid level load balancing and global optimization. It is not there yet but it is heading for that direction. We need to have all these facilities connected and share their data all the time.

Really interesting post. Couple of thoughts:

1) is there a way to apply the same algorithms and models that utilities use for consumer power demand to data centers? For example, adjusting operations intelligently based on time of day (e.g., evenings likely to be busier than early mornings)? If there was a way to “turn off” parts of the DC during low demand periods, that could help reduce energy cost.

2) Overall, the growth of data means that proliferation of data centers will continue. Aside from sheer electric consumption, the explosion in data that is being tracked and stored will also mean additional land used for data centers, more materials used to construct servers / racks, and more manufacturing activities associated with DCs. For a DC to be sustainable, we must also consider some of the downstream issues arising from new construction and ensure that those downstream processes are sustainable as well.

They are actually doing part 1 right now. Before they adopt AI in data center, they already built rules that turn off part of DC during low traffic time. What AI differentiate from human rule is that AI can handle very complicated, inter-relative, non-linear system relationships. Basically for any intuitive rule or heuristics, like turning server off when not used, human are doing perfect job. It is the other jobs AI is best suited for.

Growing land use of DC is an important point to mention! Due to the limit of moore’s law, the speed of shrinking transistor size is slowed down so the density of processor or storage is improving slower. The demand for data grows exponentially while the capacity is growing more like linearly now. This can imply some serious problem down the road if not addressed.

Very interesting application that Google has developed that fits well into our discussion of corporate responsibility (being such a high energy user, does Google have a social obligation to continue to reduce its energy consumption) and company profitability (this clearly represents a massive cost savings and operational improvement for not only Google, but also the industry as a whole. I wonder how their acquisition of Nest in 2014 fits into this strategy on a consumer level? Nest is similar in that it learns your home heating/cooling preferences and works behind the scenes to effectively monitor and maintain your home temperature based on when you will be in the house. Could they expand Nest to look at not only temperature, but also overall energy usage at a consumer level.

I really like your mentioning of Nest. I think it is always the goal of Nest to have everything connected and interacted coherently in a house. Unfortunately Nest as a business wasn’t doing as well as it hoped. We can chat more about Nest’s recent challenge.

Very well written post Peter! I appreciate how you broke down PUE into simple to understand terms.

I’m guessing that the cyclical nature of Google’s PUE is to do with the seasons each year, with peak PUE during the summer. However, it would be interesting to see the cost-benefit analysis of locating the data centers in colder climates where summers aren’t as hot. A quick search shows that most of Google’s data centers are located in varying climates (https://www.google.com/about/datacenters/gallery/#/locations). This is probably to do with density of population, but I would like to see the PUE of each of these locations.

Taking the technology further, this can be applied to telecom towers. I have seen that telecom towers take a lot of harsh climate changes, and cause frequent shut-downs and hence disruptions to customers. Having such intelligent technology built into each tower would alleviate some of this pressure.

Google did list the PUE for different locations here: https://www.google.com/about/datacenters/efficiency/internal/

You will find the location from tropical areas are generally higher than cold areas. Google did mention they are using free cooling in its DC.

Very interesting post and creative topic. I am curious if the energy efficiency AI algorithm comes with a corresponding performance cost. Without knowing the details, it seems like there could be an unfortunate consequence where optimizing data centers for energy efficiency may not lead to the same resource allocations as optimization for speed, redundancy, or other key parameters. It would be interesting to hear how Google thinks about this trade-off, and how they choose to internally prioritize energy efficiency vs. raw performance.

Another question that comes to mind for me is how global warming impacts the performance of existing data centers. I know that many data center operators choose to build their facilities in colder locations in order to take advantage of the climate’s natural cooling effects. However, with global warming, data center efficiency may be hurt as a result, and centers may have to move to even colder locations.

Yes, actually there is another approach to cooling. I know one startup is trying to rent family’s basement as distributed server room for free. Basically it works in some cold country where the winter is long and family used to spend significant money on heating every year. By giving out the space of the basement, the server in the basement will run at full speed during winter to generate enough heat for the whole house.

This is an incredibly cool article, Peter! Not least because my husband is a machine learning engineer, I really enjoy hearing about clever applications of these technologies. What surprises me is that Google isn’t using its data center operations as a proof-of-concept to monetize this technology for other industries. What Nest has done for homes DeepMind could do for firm sustainability operations more broadly! Thanks for the great read!

I am glad you like it. This is an innovative way of using AI but underneath it is a perfect case to tackle: we have too many parameters and complex nonlinear interactions within parameters so AI is best at finding the answer than human. I can see how this tech can be applied to many other areas too.

A great example of how data on each component of a complex system can be aggregated and used to improve the operations of that system. We can apply these same principles to a host of other situations to great benefit, the main barrier is the suite of sensors necessary to do so. This is essentially why IoT is receiving so much attention lately at large technology companies.

I think it is neat that they can use AI to reduce the power usage. Computers may continue to help us resolve the climate change issues that we can’t quite wrap our brains around.

At first I struggled to understand the graph showing a decrease in effectiveness, because I would have thought that we would want to improve our effectiveness. It was much clearer when I reviewed the equation and saw its dependence on the industry IT contribution. The denominator was more like the required amount of power needed so to improve that ratio you would want to reduce the numerator.

Great post, and I wonder whether the technology I posted about could be of use in such a situation.

A thoughts, I wonder the emissions impact as we see an expansion of the use of data centers in economies with a much higher temperature (i.e. emerging markets vs developed markets). My question, however, is what will be the impact on the data center industry in the UK as a result of the tax breaks brought in to encourage more climate friendly centers, and where else could such tax systems be applied?