AI in People Analytics: Rhetoric and Snake Oil

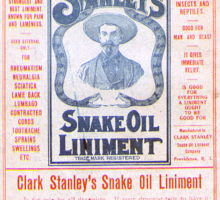

“AI” has become a buzzword in people analytics. To be sure, AI is indeed a powerful technology. Yet, as with many organizational practices that ascend to buzzword status, its promise has exceeded its potential. Sometimes it’s even sold as a mythical snake oil.

“AI” has become a buzzword in people analytics. To be sure, AI is indeed a powerful technology. Yet, as with many organizational practices that ascend to buzzword status, its promise has exceeded its potential.

The article I read was a mini-case about using AI at Microsoft to improve employee engagement. The data analysts noticed that a large group of employees was dissatisfied with their jobs. To find the root of the problem, they conducted analysis of email and meeting data. The data showed that employees were spending 27 hours a week in meetings and long hours on emails. They changed some policies on email and meetings, and the problem was apparently resolved (Romeo 2020).

This does seem like a successful case of using AI to drive real change in an organization. But wait. Does it really take “AI”, troves of data, and a team of data analysts to figure this out? Shouldn’t any manager on the ground realize that employees are wasting their time on 27 hours of meetings per week? How was it that whichever manager had the power to change meeting and email policies did not realize the daily frustration of hundreds of employees? I can’t help but wonder if there was more to the story. Perhaps there were much easier and less costly ways to come to the same realization. Or perhaps the true insight came from introspection or a lunch conversation, which needed to be validated by the data analysis for the political capital to actually do something about it. Yet the way it was presented was a clean, black-and-white win for AI.

This is not an isolated case. I’ve seen dozens of similar cases that boldly proclaim wins for AI. And again, there are some powerful use cases. But in my mind it’s a classic case of what organizational theorists Meyer and Rowan deemed “de-coupling” of organizational practices (Meyer and Rowan 1977). In a nutshell, managers are adopting AI (in some cases) because everyone else is, and they have incentives to share success cases to look good or justify their huge investment. This rhetoric reverberates throughout and between organizations and makes it seem like AI is fundamentally changing all aspects of HR decisions. In reality, there are some useful applications, but nothing like what we imagine. This process may be especially salient for technology companies like Microsoft, who are actually selling AI and other analytics solutions.

On a related note, I’m reminded of the Quantified Communications case we discussed in class. I was pleasantly surprised by some aspects of the technology. Yet, I am naturally skeptical of companies that are riding the hype of the AI wave. According to a handful of computer science researchers at Cornell and Princeton, startups that use algorithmic analysis on videos (like Quantified Communicatitons) are not much better than random number generators (Narayanan 2018). These researchers with a deep knowledge of AI claim that AI can be very powerful for certain tasks like content identification in images and text. But when predicting social outcomes (like the success of new hires), AI methods are “fundamentally dubious”. The companies trying to sell AI for these complex tasks are essentially taking advantage of the rhetoric to sell “snake oil”–a mystical solution to the organization’s persistent ailments.

I am not claiming that it is impossible for AI to be used effectively in people analytics. But I believe that in general, the current claims outstrip what is possible, or useful, for AI.

Meyer, John W., and Brian Rowan. “Institutionalized organizations: Formal structure as myth and ceremony.” American journal of sociology 83.2 (1977): 340-363.

Narayanan 2018. “How to recognize AI Snake Oil”

Romeo, Jim. 2020. “Using AI And Data To Improve Employee Engagement”. SHRM. https://www.shrm.org/resourcesandtools/hr-topics/technology/pages/using-ai-data-improve-employee-engagement.aspx.

THANK YOU! As I’ve mentioned in class, calling everything from a linear regression to quick analytics of survey data “AI” gets quite old after a while. Just using the term AI instead of machine learning plays in to this weird sci-fi / futuristic trope that is getting pretty old in the tech industry. It seems that more legitimate VCs are catching on to the snake oil and using technical advisors to dive deeper into the stack to see what the core IP really entails. There are definitely areas that can use ML to significant benefit (i.e. medical imagery) but most people aren’t doing anything close to “AI”.