Putting AI bots to the test: Test.ai and the future of app testing

“It’s easy to build an app. It’s hard to test it.” – Liu, co-founder of test.ai[1]

With 70,000+ apps already tested, Test.ai is on a mission to “improve the quality of software apps by making app development easier through the power of AI and automation.”

The Evolution of Testing Apps

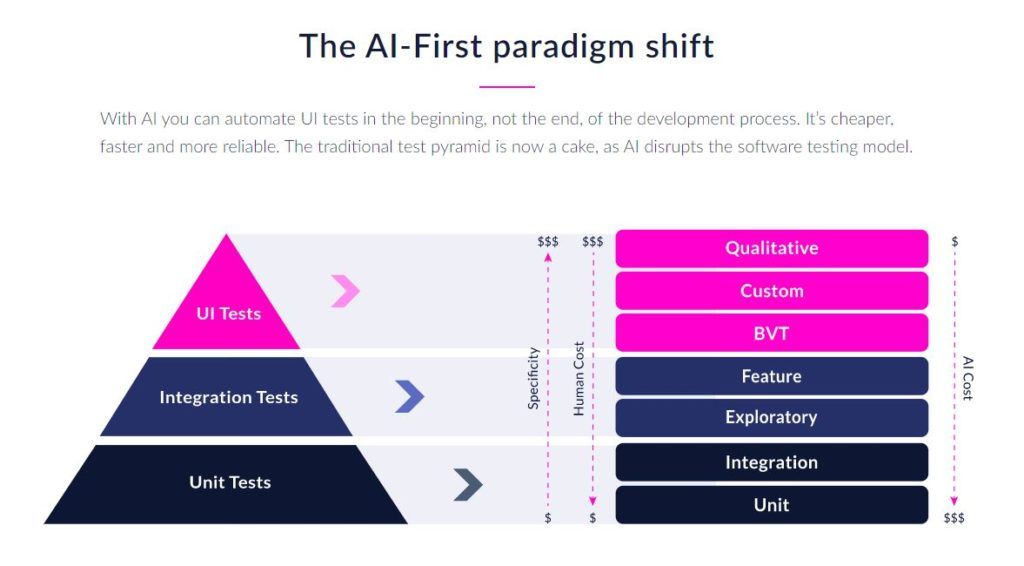

Test.ai (formerly and legally Appdiff) “provides an AI-powered test automation platform to help organizations deliver quality mobile app experiences at scale.”[2] Founded less than five years ago by software engineers from Google and Microsoft, the company uses AI bots to test mobile apps. A couple decades ago, testing software applications was a manual process performed by testing engineers.[3] The engineers developed a test plan based on business requirements, created test cases, and executed the test. Later, automation test engineers would automate parts of the testing process by writing code that replicated the actions a user might take, thereby reducing human effort. Testing has historically been bottleneck for app development teams and one of the most expensive parts of building an app – each time a change is made to an app’s user interface (UI) – for example, relabeling an object such as a button – an engineer might have to rewrite an entire framework for testing.

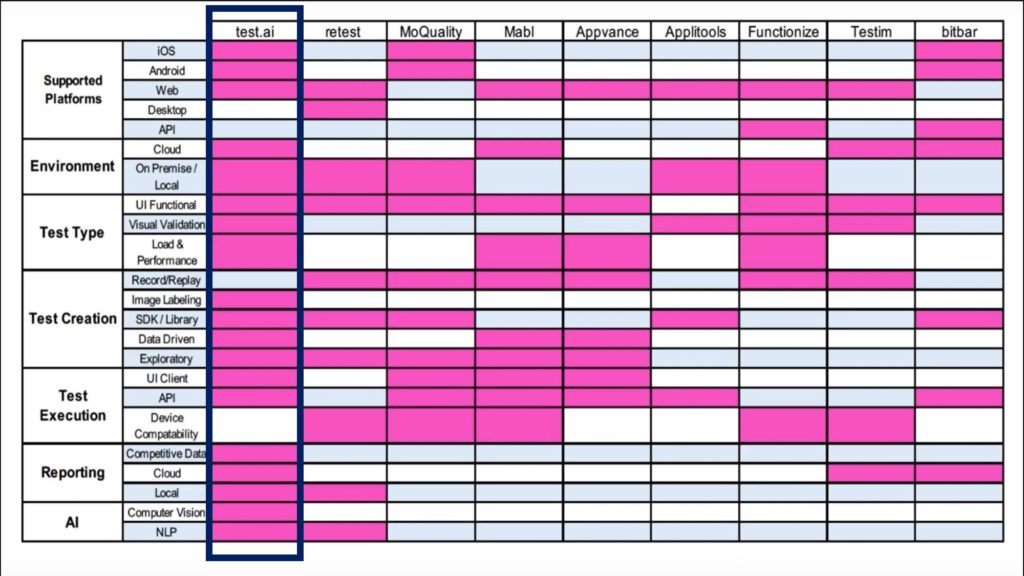

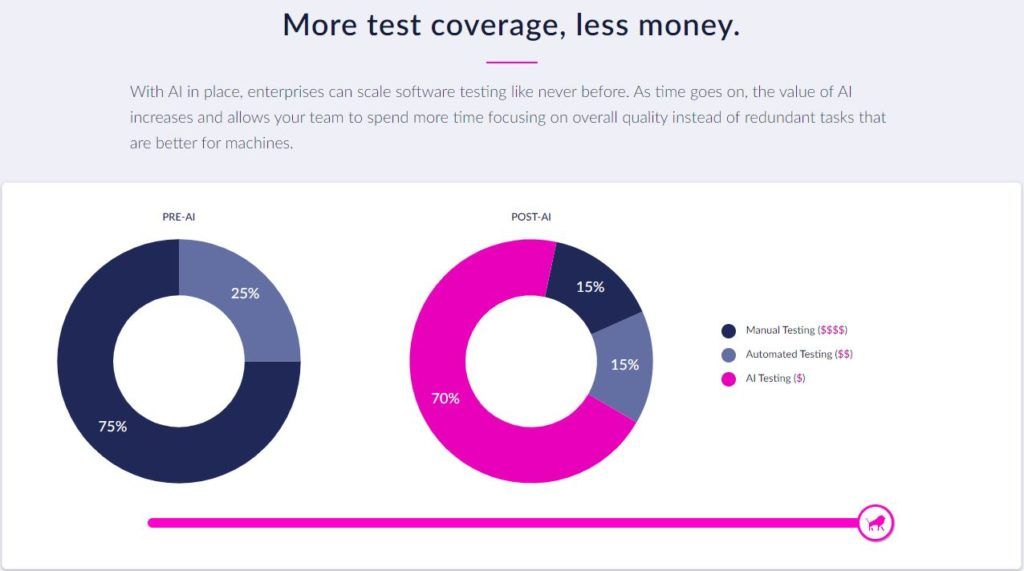

Today, testing is evolving even further with AI bots that take on the heavy lifting. In the case of the test.ai platform, bots use computer vision with natural language processing to see and understand everything on the screen and test the app’s workflows as a human would.[4] However, because the bots can learn and understand the entirety of the app at superhuman speed, they can run tests for multiple environments or OS’s, multiple devices, and screen sizes – all simultaneously and continuously. Furthermore, machine learning allows the bots to grow smarter with more iterations and discover things humans may not have thought of. Because the bots look at the visual layer of the app, tests can be written without ever touching the code base of the app. Testing an app that once took months to years, and even still takes days, can now happen in hours or minutes with fewer resources. The key value proposition of test.ai is that it “provides performance and UI analysis of apps without any upfront setup, code, or overhead…and works in minutes.”[5] Additionally, there is a benefit to running the same test on multiple apps. One company’s app can be benchmarked against competitors’ apps, delivering insights on how it performs objectively in certain metrics that drive user experience relative to competitors and opening the door to evaluate and improve quality in new ways.

Test.ai bots running tests identify issues with red boxes.

The founders sought to solve a fundamental problem faced by organizations with digital apps by making testing faster and simpler. As apps grow increasingly complex with new features and integrations and speed to market pressure increases as consumers demand flawless user experiences, organizations run into a coverage gap because they don’t have enough time and labor to test everything through traditional processes.4 The business case and value created by the test.ai platform is clear: organizations deploy resources more efficiently and effectively, improve software quality, decrease time to market, and spend less time on quality and focus on more interesting work.

A game-changing opportunity, but with challenges and the need to change itself

It is worth noting that the tech itself is not new – it has been used by telecom companies and device manufacturers with large app stores such as Google and Apple for years. The opportunity for test.ai is to help organizations improve their software quality at scale by giving them access to such technology. This opportunity poses several challenges. First, without having had exposure to the AI technology, potential enterprise customers have been hesitant to embrace it. As a pioneer in AI-powered testing technology, test.ai must first educate organizations. Moreover, they must help customers identify pain points and help them to understand how AI can be a solution. This surfaces a basic and often seen challenge faced by companies in the AI space at this stage – shifting the sole focus of the founder team from product/technology to customer-oriented solutions. In its first few years, test.ai has been focused on the AI technology and less so on marketing or selling a software solution. They must now orient themselves towards the concrete commercial applications of the product.

Picking up $11M last year in its Series A round, test.ai would do well to hire a leader that can bring marketing, sales, and business development expertise to help the company to create a more customer-oriented culture, build out its marketing/sales organization, and grow the customer base.[6] This is essential for a couple of key reasons: 1) in order for the bots to learn different kinds of applications, they need more test cases to train, and to get more test cases, they need more customers and 2) VC investors are exercising more scrutiny and demanding concrete data and proof that the product is helpful to customers.

Another way test.ai could increase its marketing capabilities is through partnerships. This is an avenue that is has already begun to pursue through a Partner Program that aims to connect with technology creators, system integrators, and managed service provides. One such partnership is with PinkLion, a full-service AI vendor that provides an AI-first development approach and works with companies to “build a custom AI integration layer that ties people, processes, and AI together.”[7]

Test.ai also needs to solidify its target market which was initially enterprises with existing test engineering teams that are actively seeking solutions.5 This has broadly included any industry. Test.ai must ask itself whether it should continue in this way or whether it might also consider approaching companies that outsource testing and require a full solution. Test.ai could expand is product offering of quality assurance and testing in SaaS model. Currently, customers of test.ai pay an annual subscription fee for each app tested on the platform. While pricing information isn’t publicly available (and, also, likely to be a challenge), the founder has made clear that there are enormous cost savings to be gained by companies using the platform. The suggestion for expanding product offering leads to another recommendation which is to hash out a solid product pipeline.

Test.ai has a competitive edge from being the leader – its AI bots are adding to their years of learning as I write this – but it must make some necessary changes in order to deliver on its goal to automate testing of the world’s apps.

[1] Tomio Geron, “Appdiff Bags $2.5M for Bots That Test Apps,” Wall Street Journal (Online), September 14, 2016, ABI/INFORM via ProQuest, accessed December 2019.

[2] “Test.ai Overview,” Crunchbase, https://www.crunchbase.com/organization/testai#section-overview, accessed December 2019.

[3] Execute Automation, “Automating Web Applications with Artificial Intelligence and understand how it works!,” YouTube, uploaded May 19, 2018, https://www.youtube.com/watch?v=EcTmKXdYvtM, accessed December 2019.

[4] SoftwareTestPro, “Re-shaping the Test Pyramid – Jennifer Bonine,” YouTube, uploaded August 30, 2019, https://www.youtube.com/watch?v=LHyBiR7aR-c, accessed December 2019.

[5] Clare McGrane, “Appdiff raises $2.5M for AI-powered automated app testing platform,” GeekWire, September 14, 2016, https://www.geekwire.com/2016/appdiff-raises-2-5m-ai-powered-automated-app-testing-platform/, accessed December 2019.

[6] Lucas Matney, “Test.ai nabs $11M Series A led by Google to put bots to work testing apps,” TechCrunch, July 31, 2018, https://techcrunch.com/2018/07/31/test-ai-nabs-11m-series-a-led-by-google-to-put-bots-to-work-testing-apps/, accessed December 2019.

[7] Test.ai, “test.ai Partner Program,” https://www.test.ai/partner/pinklion, accessed December 2019.

Great article – thanks for sharing! Like several cases we’ve seen in class, we have a company that is scaling an AI service (QA-testing) that other firms would purchase as a subscription. I agree that one of the major uphill battles the company faces is in getting customer buy-in. It will be challenging to build a scalable service when each application is unique (how can the company really test for whether a feature is not working as expected vs. a bad design decision) and companies are still skeptical about the value creation provided by the firm (which will likely require demoing to customers).

Love the post! It got me thinking about one of my close friends who started his career in the QA portion of a giant software company. These traditionally are career tracks and I wonder a) how receptive would software engineers like him be to his workflow being potentially automated away b) how receptive software developers in the next part of the workflow process be to the prospect of this technology evolving and getting smarter and potentially automating parts of their process as well. Is this just an inevitable change that software developers would have to accept and the organization will embrace or will Test.AI face headwinds in getting adoption due to potentially misaligned incentives?