Forest full of trees

What to focus on when developing an AI can be challenging.

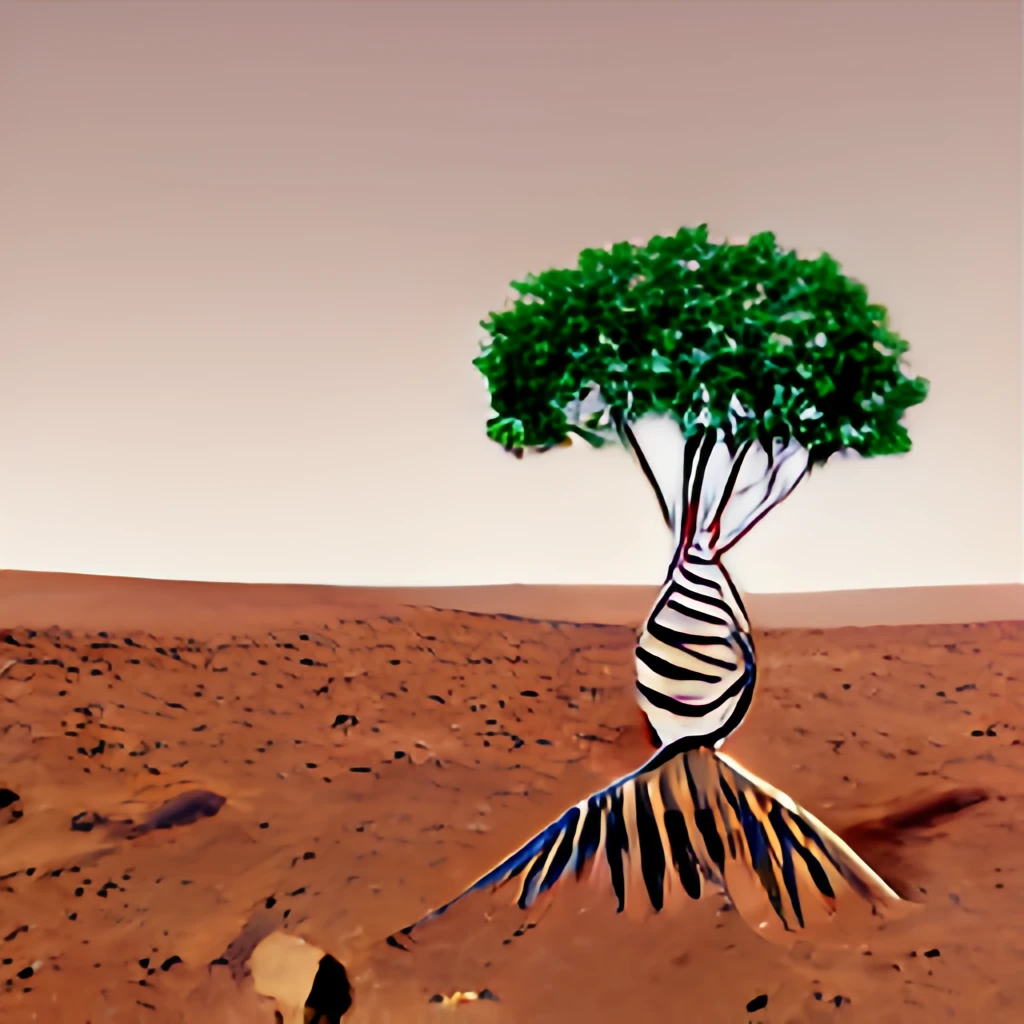

Text —> AI —> Picture. It seems easy enough. But if we look at this tool more closely it becomes clear quite quickly that the pictures do not really equal the input text yet.

The input text for the feature picture? It was “A Line“ not “A Cross“. Putting two lines over each other in a certain angle already changes the meaning of that picture for humans, for an AI it might still just be two lines. Training an algorithm for meanings and interpretations, rather than just facts and representations of easy commands seems to be an obstacle.

The tree pictures of the AI are fundamentally different. I would argue that one needs to know what the text is to understand the picture. I am wondering if going forward it might make sense to narrow on a focus category for the AI to learn first and then scale from there.

Very interesting post, Kate! Clearly brings out the limitations of AI text-to-image converters in better-representing contexts as well as what humans do. In fact, that’s probably the reason why the latest versions of Dall-E 2 nudge the users to prompt the AI in a structured form to include (a) what object, (b) with what features, (c) in what context/background, and (d) what form of art is expected – to be able to build pictures closer to user expectations. Thanks once again, Kate, for this insightful post!

Thanks Kate! I think you’re completely right when you point out that training an algorithm for interpretations instead of just facts is where there tends to be difficulty. I also really liked the feature picture you chose because it shows the nuances of the AI program.

Hi Kate, thank you for sharing these generated images. You suggested that narrowing the focus of AI learning could focus on interpretation and meaning rather than just facts and the representation of simple commands. I think learning to interpret like a real person can be difficult because different people may interpret the same thing slightly differently, especially when it comes to visualization.