AI-as-a-service with Scale

Scale combines human intelligence and platform algorithms/services to enable companies to build better AI. It has created a platform that connects companies and contractors who can label data.

In today’s world, many firms are able to collect and digitize information. This explosion of data offers many exciting new use cases that can enable firms to make predictions or to automate workflows. To make use of this data, though, companies must be able to create or leverage a machine learning infrastructure. Safe, accurate, and unbiased AI systems require high quality training data and accurate algorithms.

Source: https://scale.com/blog/series-c

For many companies, high quality training data is a bottle neck that can prevent them from leveraging their data. Making the investment in labeling AI data can be costly. In some cases, it takes billions or tens of billions of examples to get AI systems to human-level performance. This requires investment in training workers to label data and to build a system that can test the accuracy of human labeling. Accuracy in the training data is a key metric during this stage of a process – a poorly labeled dataset will lead to poor predictions. Consider the case of automated vehicles. If a training data set is mis-labeled (e.g. some cars are not labeled) or systematically mis-measures information (e.g. the data is not labeled when there is no sunlight), this can lead to accidents and stunt the usage of the AI.

The mission of Scale is to accelerate the development of artificial intelligence. It has created a platform that connects companies and contractors who can label data.

Scale is able to create value by commoditizing the labeling stage of the machine learning process – they are able to produce high-quality, low-cost labeled datasets for companies. They enjoy economies of scale and have minimized the risk of disintermediation, which allows them to capture value from companies that want to utilize their data for machine learning.

Economies of Scale

There are at least two scale benefits that the company enjoys. By providing a standardized set of labeling tasks, Scale is able to aggregate demand. This allows them to have lower training costs – a contractor can be trained for a set of specific tasks and specialize in labeling a certain type of data. In addition, contractors will have less idle time and may be hired for a lower price than if they were working on a one-off project.

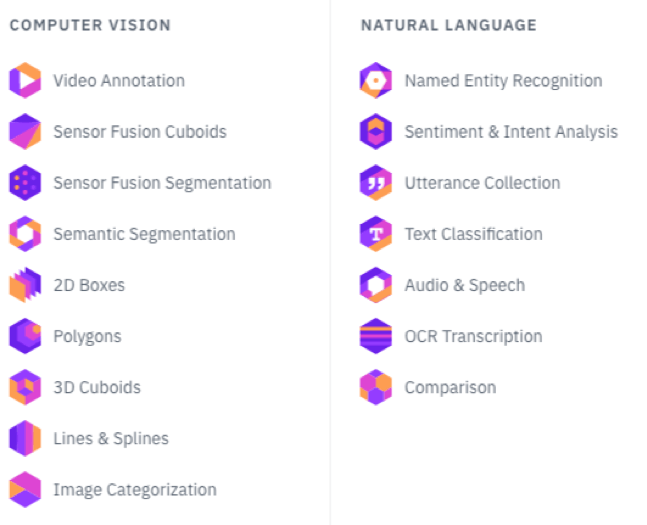

Source: https://scale.com/

Furthermore, Scale first uses a machine algorithm to help label the data. As the volume of data processed by Scale increases, this also improves the accuracy of their own labeling algorithm. This learning process can help Scale automate their own process and further lower the cost of labeling.

Source: https://scale.com/

Together, this allows Scale to generate faster labeled data at lower prices than a firm that invests in an internal infrastructure.

Risk of disintermediation

Scale shares similarities with outsourcing platforms such as upWork and ZBJ. The company creates value by delivering high-quality labeled data that companies can trust, which is partially sourced from skilled contractors. Given the similarities, the company may face the risk of disintermediation. If a company is able to match with skilled contractors who are already specialized in performing a task, could the two sides of the platform move off the platform and transact without Scale?

Source: https://scale.com/

Scale is able to reduce this risk in two ways. First, human validation is only part of the value that Scale creates. Scale also directly takes a first-pass at labeling the data with an algorithm. This drastically reduces the time to label data. Furthermore, Scale has built a variety of tools that evaluates the quality of the labeled data. Without either of these steps, a company would have limited value in disintermediating.

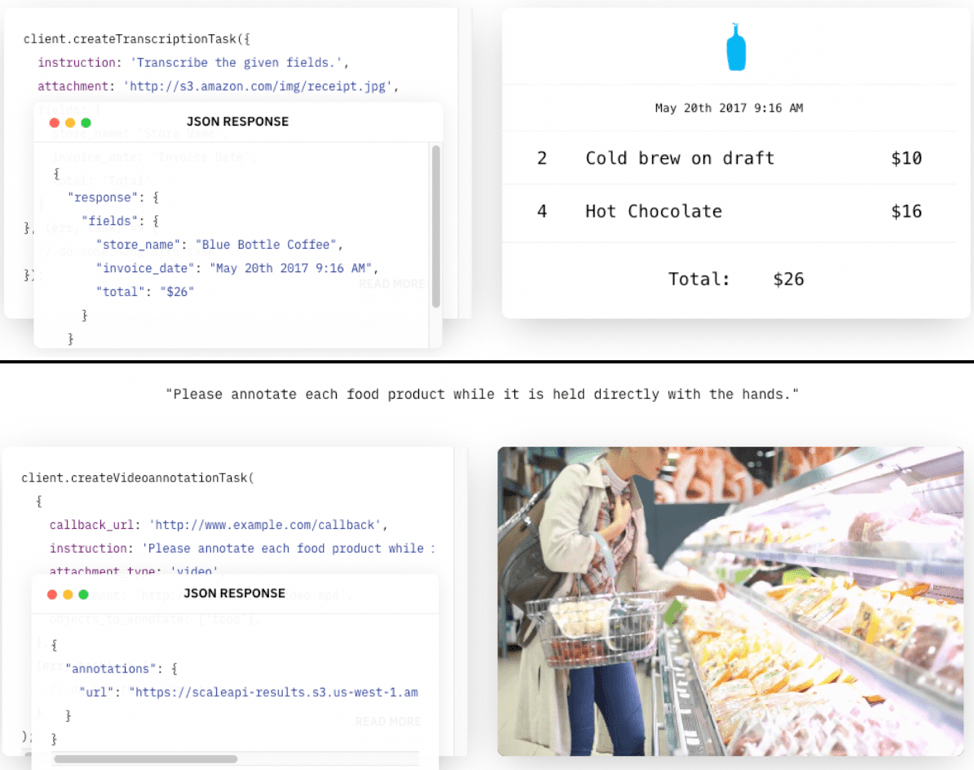

Second, Scale minimizes the interaction between the two sides of the platform. In fact, a company can simply share data through the Scale API. Given that Scale offers specific labeling tasks and embeds the human validation into the process of creating labeled data, the platform has the ability to choose how to assign the task. Scale may divide the task amongst several contractors, or it may assign the task to a contractor who specializes in the task. This creates a process in which trust in the platform is maintained without necessarily interacting with the other side of the platform.

Summary

Scale is a fascinating case of combining human intelligence and platform services to enable companies to build better AI. Rather than simply serving as a marketplace that connect companies with labelers, Scale creates value by managing this process. One open question is how Scale will continue to grow. Will it continue expand and commoditize other parts of the ML value creation process (e.g. DataRobot)? Given that it produces data that serves as input for ML models (e.g. provides training data), will there be sustained demand from its existing customers? How can Scale develop tools and products that can keep up with the increasingly diverse applications of Machine Learning?

That’s really interesting, thanks!

I have a question. My understanding is that this labeling is a necessary step to create ML algorithm, if the company already has ML algorithm that can do labeling – why human intervention is required?

Thank you for sharing this post!

I am curious to know more about how Scale decides what customers/companies to target. From their website it looks like they’ve worked with a number of big names, but are they mostly focused on SMEs or earlier stage organizations? Because ML is becoming ubiquitous, customer selection seems to be an important part to how they will remain competitive in the future. How do some of the big players continue to use Scale? For ongoing innovation?