Valve using machine learning and deep learning to catch cheaters on CS:GO (794 words)

CS:GO is one of the most popular and competitive video games worldwide. In the past few years, reports of players using "hacks" in the game were increasing, negatively impacting customer satisfaction of CS:GO's user base. To counter this rampant cheating, Valve turned to machine learning and deep learning.

What is CS:GO?

Counter Strike: Global Offensive (CS:GO) is a popular online first person shooter game released by Valve in 2012[1]. At the peak of it’s popularity in 2016, CS:GO boasted 850,000 concurrent players on Steam (Statista, 2018). CS:GO is also played competitively around the world as an “e-sport” in a 5 vs. 5 format with 49 officially recognized tournaments that had a combined tournament prize pool of over $5.7 million in 2017 (Liquipedia.net, 2018). Players have credited it’s skill-based, deep gameplay (Exhibit 1) with it’s long-term success; however, this “skill-based” aspect was challenged by an increase in the perception of cheating within the game.

Cheating in CS:GO

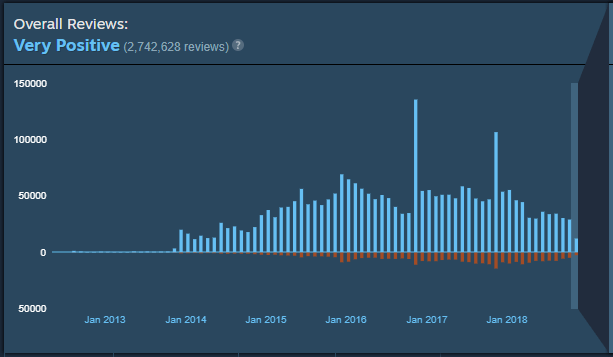

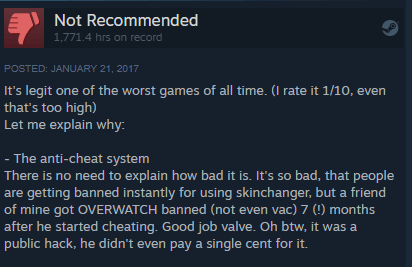

From 2016 through the first quarter of 2018, despite generally positive reviews, Valve received an increasing number of complaints on their forum (Exhibit 2), most of them related to general dissatisfaction with the rampant cheating occurring in the game (Exhibit 3), to more specific accounts of cheaters using “wall-hacks” (being able to see through walls to easily identify where you opponents are) and “aim-bots” (a cheat that gives you 100% shooting accuracy without having to aim with your mouse). Valve already had a mechanism in place called Valve Anti Cheat (VAC) that was used to detect client-side modifications and ban users that were utilizing these modifications form using their system (Support.steampowered.com, 2018), but they were struggling to keep up with the developers of the cheats. First, VAC required Valve developers to write thousands of detections to find individual cheat programs, but once the cheat developers got wind of new detections they just modified their programs accordingly. Second, because banning was such a serious offence that can have huge customer satisfaction implications (banned players lost access to content that they paid real money for), Valve had to be 100% sure that someone was cheating. These challenges created a “treadmill of work” for Valve developers, so they turned to machine and deep learning as potential alternative solutions (McDonald, 2018).

The trust score

Valve thought that one alternative to banning cheaters was to instead reduce their impact on players who wanted to play fairly. To do this, they developed a “trust score,” which utilized “Naïve Bayes” machine learning algorithms to determine if a player was likely to cheat. The algorithm would take things into account like the number of times that these players had been reported for cheating by other players and the number of accounts with the same IP address that were previously banned along with other metrics to determine if these players were likely cheating. Instead of banning these players with low trust scores, they would pair them with each other (McDonald, 2018). Basically, players with high trust scores would only play against other players with high trust scores, while players with low trust scores would play against other alleged cheaters. This resulted in a reduction in cheating complaints.

Deep learning

To reduce occurrences of cheating, Valve looked to deep learning. First, they created a program called “Overwatch,” which used experienced players that were designated as “investigators” who watched replays of reported games to determine if the reported player was cheating. This resulted in a 15-30% conviction rate, and a very low false conviction rate (Blog.counter-strike.net, 2018). Basically, humans were pretty good at determining when a player was cheating or not even though the cheat couldn’t be identified by VAC. Valve decided that with this data on convictions, they could use deep learning in the form of neural nets to build a program that could “learn” how to detect cheats in the same way a human would. VACnet, as it was later named, used the data from the investigator convictions from Overwatch to train the model (see Exhibit 4 for process), and constantly ran this model across ~3500 processors to scan the 150,000 daily matches played on Valve’s CS:GO servers (McDonald, 2018). Early results showed that conviction rates increased from 15-30% to 80-95% (close to 100% when newly re-trained), but VACnet results were ultimately given to a human to determine guilt and the appropriate punishment.

What’s Next?

Valve has made impressive strides in reducing the occurrence and impact of cheating in CS:GO; however, they have just scratched the surface. In his talk on Valve’s move to deep learning, McDonald mentions that VACnet is only good at detecting blatant cheaters (McDonald, 2018). Somebody that turns the sensitivity of their “aim-bot” to hit only 60% of the time may not be detected as easily. Further, as gamers subscribe more monetary value to in-game items that can usually only be acquired by winning games, the incentive for cheat developers to improve their programs increases. One example of such an item, the “Ethereal Flames Pink War Dog” pet in Dota 2 (another Valve game) was sold for $38,000 on Valve’s online market place (Successstory.com, 2018). Will Valve and other game companies be able to successfully manage these cheaters? Will cheat developers be able to eventually outsmart these machine learning and deep learning algorithms? Only time will tell.

[1] CS:GO is essentially an updated, spin-off version of the base counter strike game, which was released in 2000 as a mod for Half Life (another Valve game).

Statista. (2018). CS:GO peak players on Steam 2018 | Statistic. [online] Available at: https://www.statista.com/statistics/808630/csgo-number-players-steam/ [Accessed 12 Nov. 2018].

Liquipedia.net. (2018). Major Tournaments – Liquipedia Counter-Strike Wiki. [online] Available at: https://liquipedia.net/counterstrike/Major_Tournaments [Accessed 13 Nov. 2018].

Support.steampowered.com. (2018). Valve Anti-Cheat System (VAC) – Valve Anti-Cheat (VAC) System – Knowledge Base – Steam Support. [online] Available at: https://support.steampowered.com/kb/7849-RADZ-6869/ [Accessed 13 Nov. 2018].

McDonald, J. (2018). GDC 2018: John McDonald (Valve) – Using Deep Learning to Combat Cheating in CSGO. [online] YouTube. Available at: https://www.youtube.com/watch?time_continue=101&v=ObhK8lUfIlc [Accessed 13 Nov. 2018].

Blog.counter-strike.net. (2018). Counter-Strike: Global Offensive. [online] Available at: http://blog.counter-strike.net/index.php/overwatch/ [Accessed 13 Nov. 2018].

Successstory.com. (2018). 10 Most expensive virtual items in Video Games | SuccessStory. [online] Available at: https://successstory.com/spendit/most-expensive-virtual-items-in-video-games [Accessed 13 Nov. 2018].

I think the issue you are pointing out is very relevant, especially considering that cheating in some cases might be as lucrative as a small bank robbery – as you have pointed out. I wonder if there would be a way to get the actual human players more involved in feeding the machine learning algorithm since as you pointed out the can detect cheating very well. This would give the algorithm access to a lot more data than just from developers, possibly enhancing the results significantly.

I liked how Valve used the trust score to put cheaters to play against cheaters, protecting the experience of those who wanted to play fair. Cheating in videogames has a long and proud history and it is arguably even a part of the culture, finding cheat codes, passwords, secret shortcuts and other similar things to improve performance, and some people like to use those. On the other hand, in a very competitive game like CS, of course a lot of gamers will want a fair playing ground to really test their skills, and that is fine as well. To be able to recognize that there are different types of players and offer a different experience for them based on their differences is a good solution for the cheaters problem.

Really interesting application of ML, thanks!

I found particularly clever that they would match players with low trust scores against each other, while players with high trust scores would only play against other high trust players. I can see how that translated in a reduction in cheating complaints; nevertheless, I’m not sure that will reduce cheating in the long run. Wouldn’t the cheaters have the incentive to escalate their cheating techniques more rapidly given their new environment in which they are placed? As Der Biez mentioned, I would like to see an initiative from Valve that incentivizes the current users to spot cheating in order to feed the algorithm (maybe give them a monetary benefit everytime they identify and prove someone was cheating?)