Using Machine learning to protect wild animals

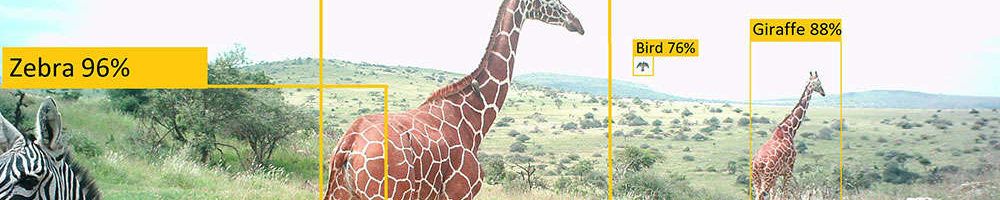

The Zoological Society of London (ZSL) is now partnering with Google’s Cloud AutoML platform to use machine learning to protect wild animals. The partnership can help ZSL leverage Google’s expertise in image recognition to automatically analyze millions of images captured by cameras in the wild and thus identify species movement and potential poachers.

Monitoring and protecting wild animals across vast and remote habitats is a huge challenge. ZSL has been using camera traps to capture images of animals for some time. The cameras have sensors to detect motion and heat, so it can be triggered when wildlife or human moves past. The cameras can capture images and gather data from hard-to-reach areas, and the data can be used to understand the movement of animals and help scientist develop conservation plans for each of the species.

Traditionally all the analysis work are done by human manually. ZSL conservationists had to tag hundreds of images every day in order to analyze the data, which is very labor intensive and time-consuming. According to ZSL, in some cases, reporting on that data takes up to nine months, by which time the situation or animal movements may have been changed and ZSL strategies also need to be changed.

Thanks to machine learning algorithm, the tagging work now can be done automatically and it cut the nine months down to an instant. ZSL started building its model in locations it regularly does camera trapping, such as Costa Rica, where it holds a large amount of existing data. ZSL provided Google with existing data of 1.5 million “tagged images” to facilitate the learning of Google AutoML, and later tested the model with new data to refine the model.

The organization is now training custom models by providing more conservation details like region, environment, and species, aiming to create models bespoke for different regions and different species sets. ZSL’s long-term goal is to create image recognition models that any conservationist can use to identify specific species and humans by simply uploading their data and getting insights back.

Value creation:

- By automatically analyzing millions of images, the machine learning can help ZSL speed up data processing. It will benefit conservationists in identifying animal species more efficiently and making conservation plan by monitoring and tracking their movements. It will be particularly helpful in protecting endangered species.

- Additionally, the tool can also help detect poachers, sending real-time alert to conservationists and helping respond to the threats as soon as possible. I am looking forward to seeing the tool helps reduce the trade in elephant ivory, rhino horn, and other wildlife products.

Ongoing challenges:

- The early pilot proved its usefulness, but the accuracy of image recognition is still challenged. According to a report published on CBR “last year, skiers were miss-identified for a dog (by the model)”. It reminds me the example of “bagel or puppy” we talked in class. However, with a larger dataset and continuously improved technology, the algorithms will be able to identify more subtle variations and be more accurate in the future.

- I think implementing the technology may face some challenges, as it requires a large number of cameras that can endure extreme conditions and can be connected from extremely remote areas. As a charity organization that relies on government funding and donations, ZSL may need to convince its stakeholders the value created by machine learning algorithm and collect enough funding to deploy the technology.

This is just a start, “Our longer-term goal is to provide a health check for the planet, and that’s a very big ambition obviously, but as it starts to become possible to perform more of these studies and get the results back more quickly, our view of biodiversity will grow and grow.” Says Sophie Maxwell, head of conservation technology at ZSL.

Sources:

- https://www.cbronline.com/news/google-ai-algorithm-zoos

- https://diginomica.com/2018/02/02/zsl-puts-machine-learning-work-studying-camera-trap-data/

- https://www.zsl.org/

- https://www.computerworlduk.com/data/how-zsl-is-using-cloud-automl-prevent-poaching-with-automated-image-tagging-3671144/

- https://www.networksasia.net/article/how-cloud-automl-helps-zsl-london-zoo-prevent-poaching-automated-image-tagging.1517447038

- http://www.thehindu.com/business/Industry/google-to-make-artificial-intelligence-accessible-to-every-business/article22457587.ece

Interesting post Ting. I can see how there is a lot of value in using machine learning data analysis to be able to monitor such remote areas. Currently they are using a huge amount of human labor, which i can imagine is expensive for cash strapped conservation groups. This technology seems like it would be more practical in some geographies than others. For example, it would be easier to capture images in the Serengeti plains than in the densely vegetated areas of South Africa (where it is difficult to see animals and poachers alike), thus limiting the scale of the value creation. I also think that it could be a huge challenge to use artificial intelligence to accurately identify poachers (as opposed to conservation workers, biologists, etc in the bush) and even more challenging to identify them in time for intervention.

Great read Ting. I agree with you in that one of the main challenges will be getting the right level of accuracy in the system. If ZSL requires the system to simply identify species then I can see how this approach would work. However, if ZSL needs to be able to identify individual animals and not just species (i.e. endangered species with very low population numbers) I don’t see how this approach would work. For the latter case I think that tagging will still be a better solution.

I love this Ting! Thanks for sharing. I love the broader vision of being able to track the biodiversity/health of all populations, though it does seem a bit ambitious. To push this further, how would you actually track a population’s health beyond just living? Just because you are able to track that a certain population is decreasing, that doesn’t necessarily help you determine the why or fix it, and it seems that in some cases waiting for there to be a problem is waiting too long. However, there should be more hands on deck to determine the why a population is decreasing and how to fix it since there will be so much labor saved in data collection!

Interesting post! It’s great to see that work that was once performed manually is now being tagged automatically through these techniques. To your point, I’m also pretty concerned about the fact that some things may be mis-tagged, which requires humans to detect and fix. I suppose that if there is enough data, the algorithm will eventually learn to correctly identify, but until then, humans may still need to perform manual check-ups.